Result of the Month

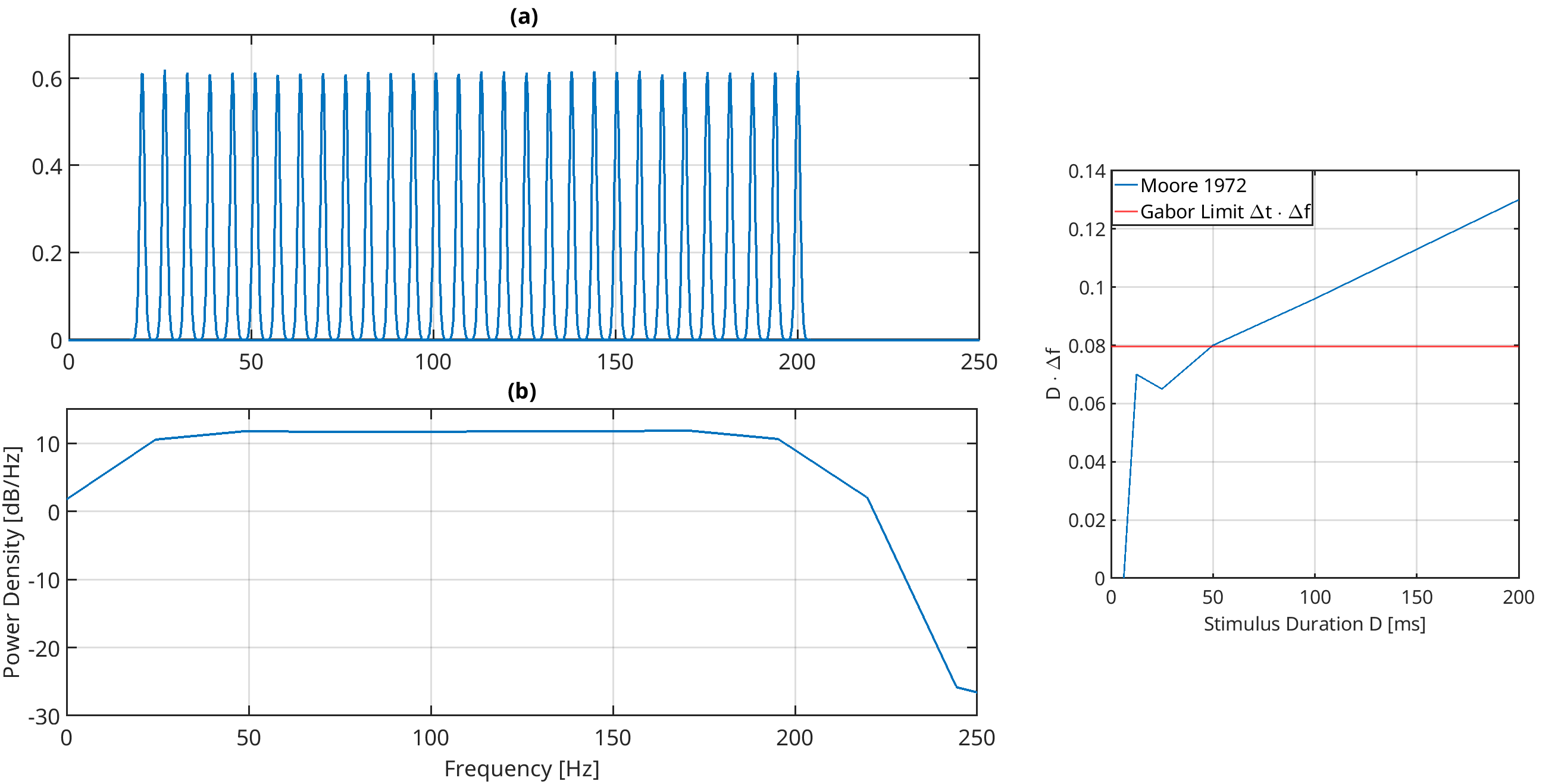

It is known that the human auditory system contains Outer Hair Cells (OHCs), which can be modeled as nonlinear oscillators. Further, it has a remarkable time-frequency resolution (Moore, 1973), with lower time-frequency resolution products below the Gabor limit (right figure). We show that this time-frequency resolution can be achieved with a Stuart-Landau Oscillator (SLO) that exhibits a subcritical Hopf bifurcation. This raises the question of whether continuously oscillating outer hair cells can exist without producing a noticeable tone (i.e., constant tinnitus)? Our results suggest yes, if these cells oscillate chaotically. When modeled as SLOs with white system noise, every cell exhibits narrowband chaos (top left figure), with the mean equal to the cell’s characteristic frequency. By looking at the summed output of an ensemble of OHCs, the power spectrum density becomes constant, indicating a Gaussian process rather than multiple pure tones (bottom left figure). This suggests that narrowband chaos is...

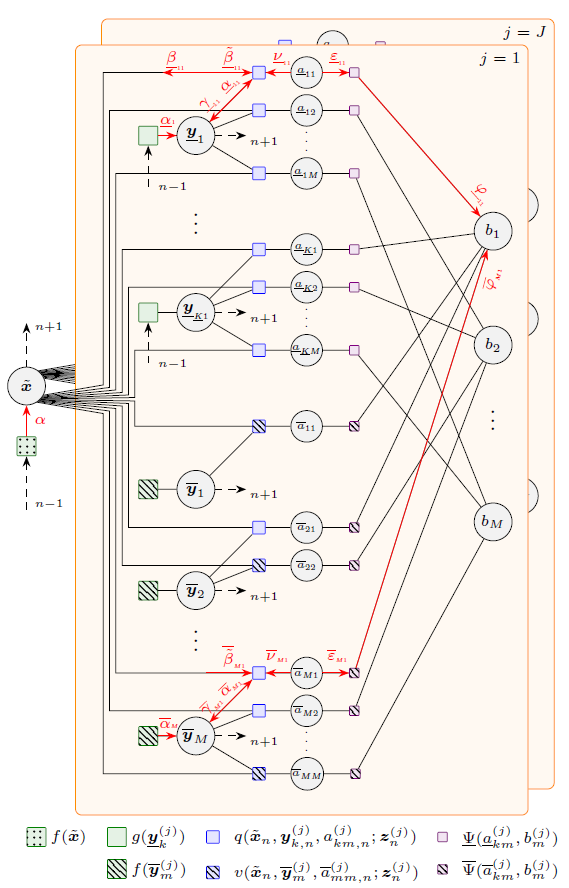

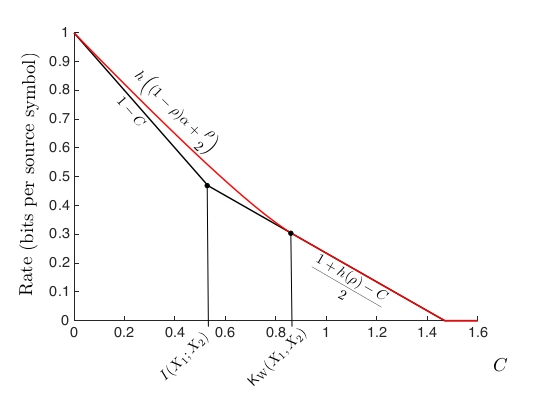

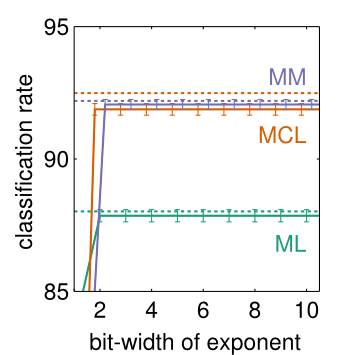

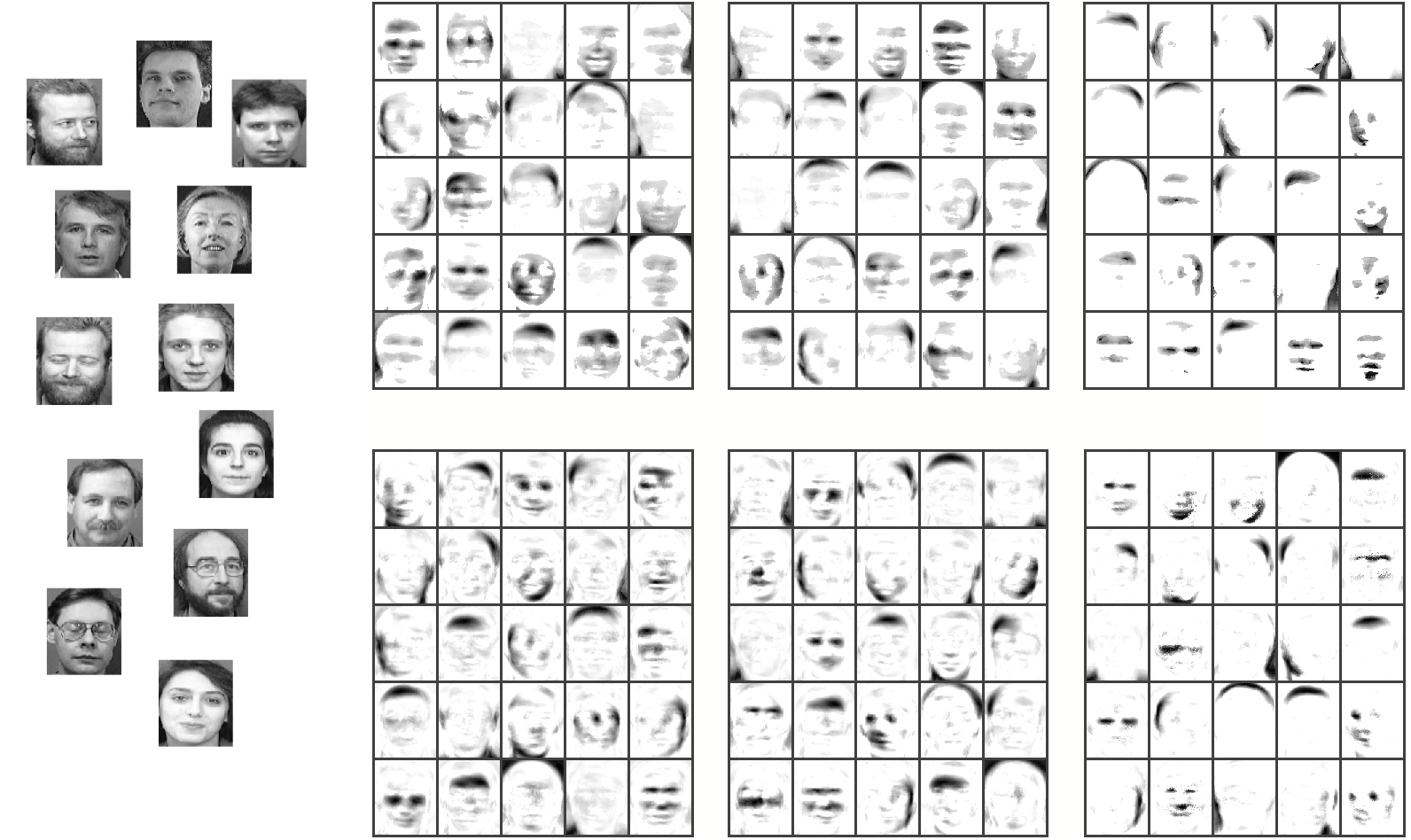

Ensuring trustworthiness in machine learning—by balancing utility, fairness, and privacy—remains a critical challenge, particularly in representation learning. In this work, we investigate a family of closely related information-theoretic objectives, including information funnels and bottlenecks, designed to extract invariant representations from data. We introduce the Conditional Privacy Funnel with Side-information (CPFSI), a novel formulation within this family, applicable in both fully and semi-supervised settings. Given the intractability of these objectives, we derive neural-network-based approximations via amortized variational inference. We systematically analyze the trade-offs between utility, invariance, and representation fidelity, offering new insights into the Pareto frontiers of these methods. Our results demonstrate that CPFSI effectively balances these competing objectives and frequently outperforms existing approaches. Furthermore, we show that by intervening on sensitive attributes in CPFSI’s predictive posterior enhances fairness while maintaining predictive performance. Our paper is published open-access in Machine Learning (Springer).

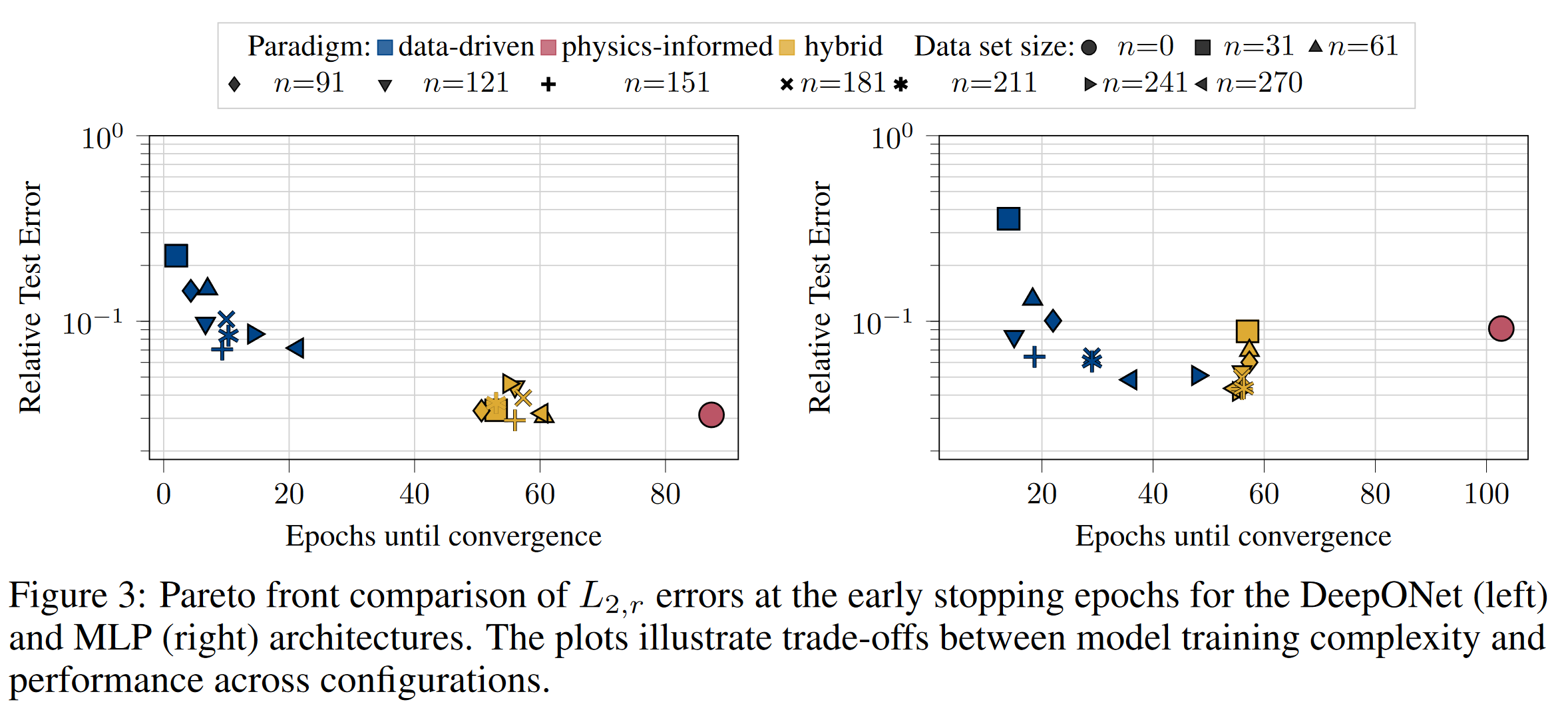

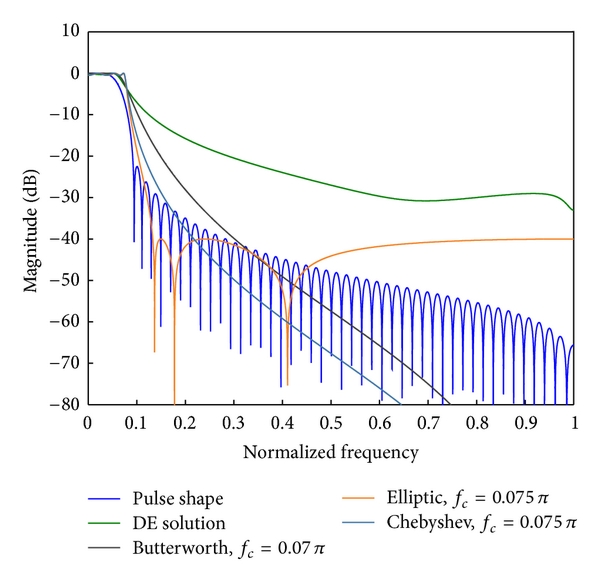

Operator networks have emerged as promising surrogate models, replacing computationally expensive numerical solvers for differential equations. Beyond achieving competitive accuracy with traditional solvers, the practical viability of this approach greatly depends on its training cost, which is comprised of ground truth data acquisition and network optimization. Physics-informed machine learning seeks to reduce reliance on labeled data by embedding the governing differential equations into the loss function; however, such models are often very challenging to train using physics constraints alone. In this work, we study how varying amounts of labeled data and architectural choices affect convergence and final performance in operator learning. Our results show that incorporating training data into a physics-informed training regime can significantly reduce training time. However, this effect does not scale with the amount of data used. Conversely, adding physics-based residuals can substantially enhance performance, if the network architecture is well-suited to exploit the underlying physical behavior....

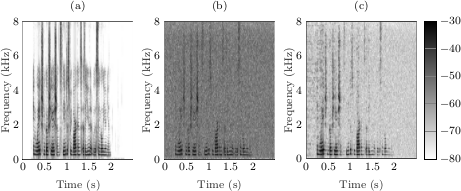

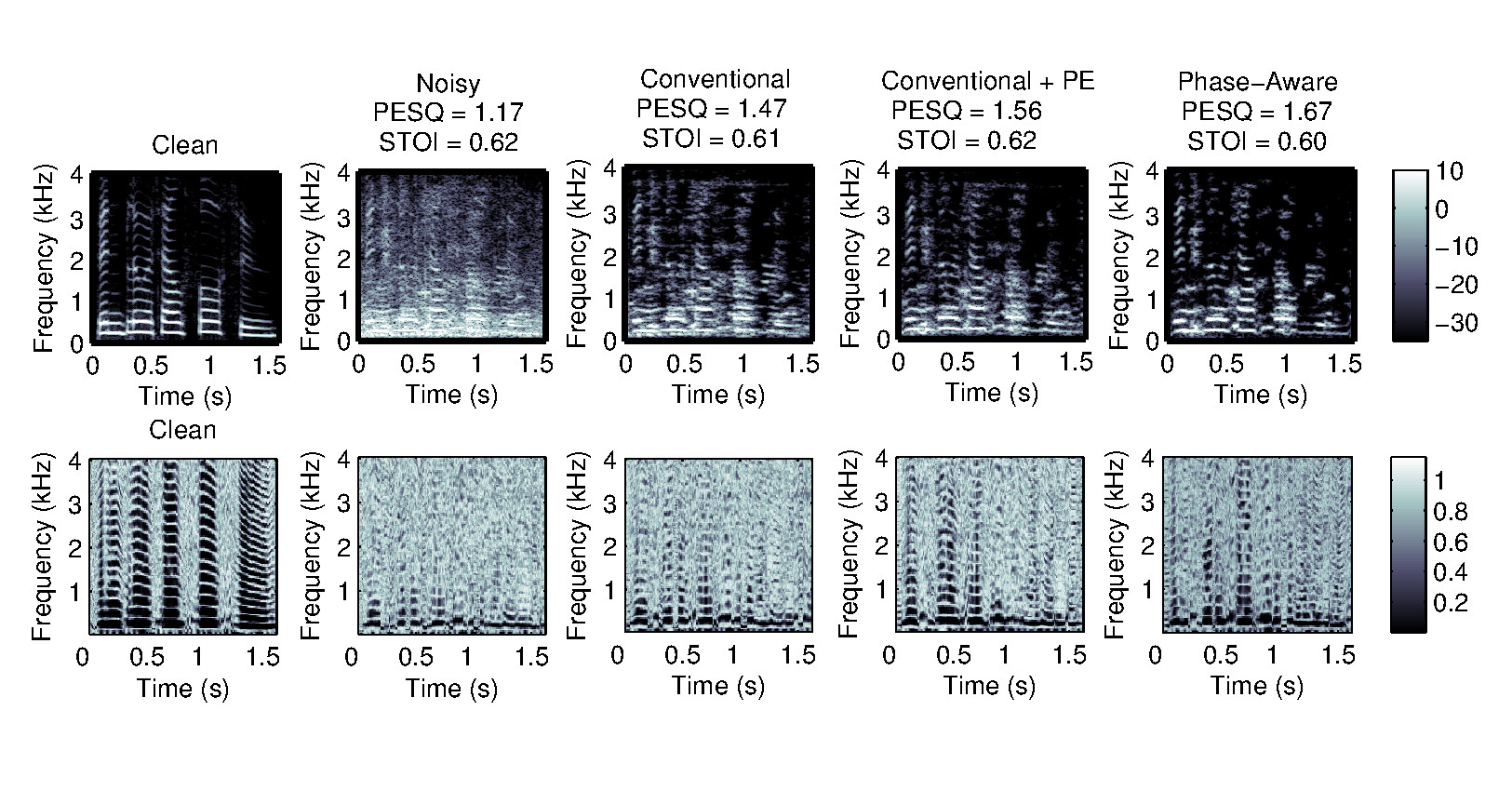

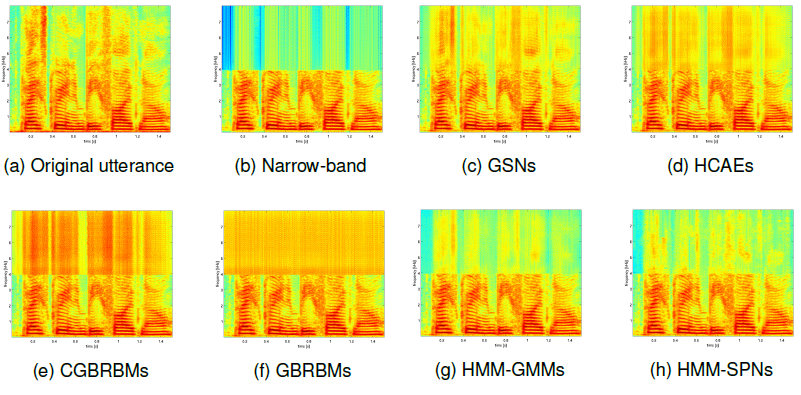

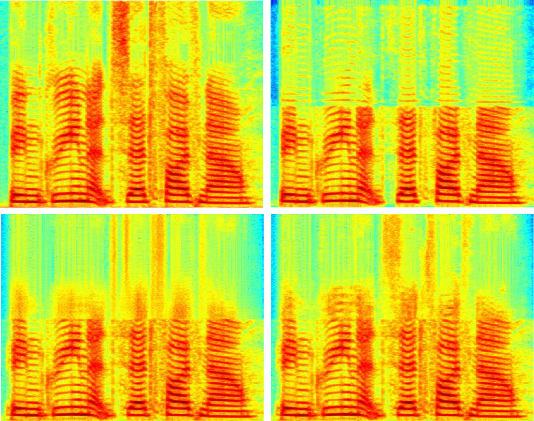

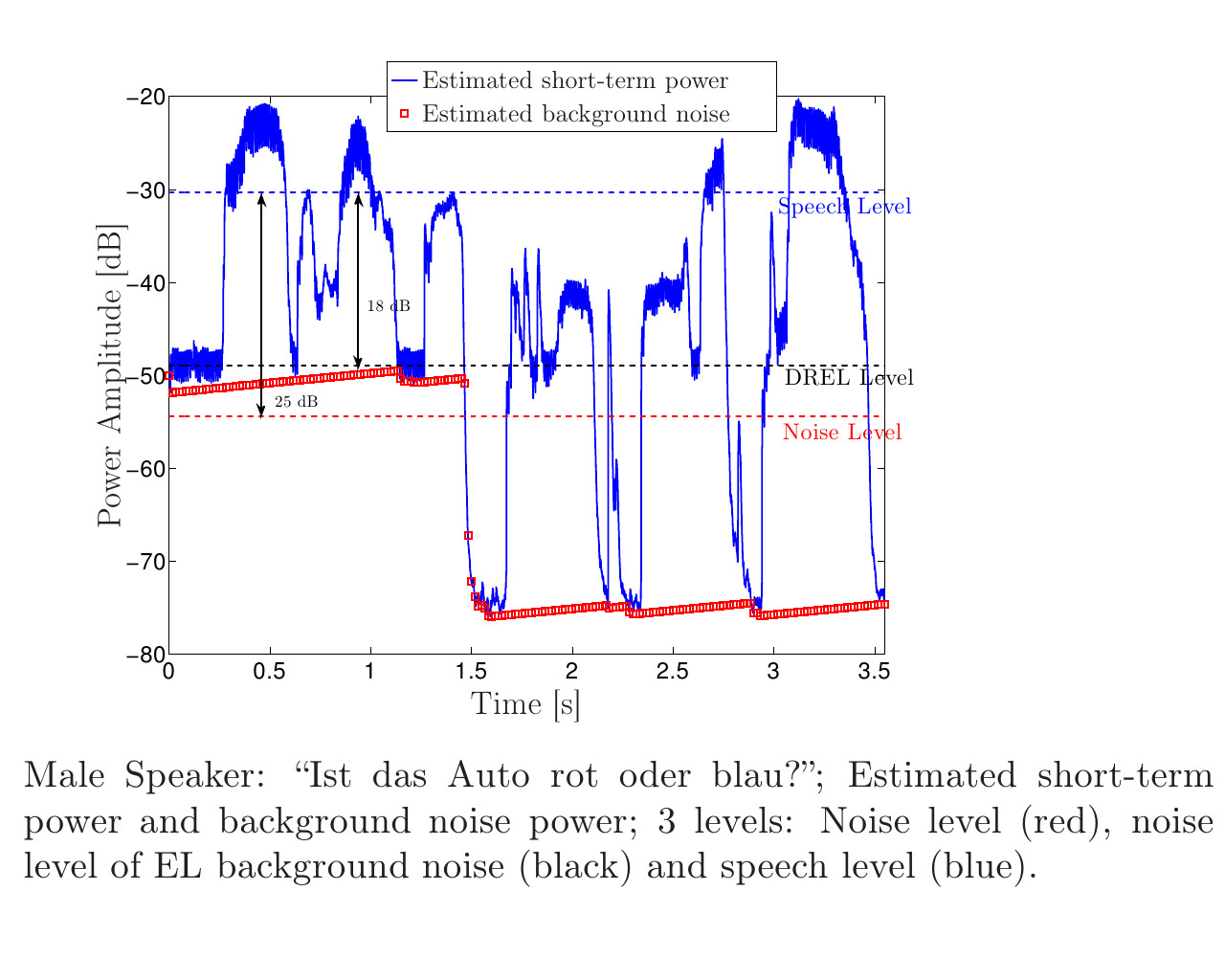

Voice disorders, such as dysphonia or speech produced using an electro-larynx, often result in reduced intelligibility and unnatural prosody and speech quality. This paper investigates the potential of modern voice conversion (VC) technologies to restore healthy-sounding speech from pathological inputs. Four state-of-the-art VC models (FreeVC, QuickVC, LLVC, and XVC) were fine-tuned on Austrian-German datasets and evaluated using both objective and subjective measures. Results show substantial improvements in perceived naturalness, intelligibility, and vocal health, with listener preference scores exceeding those of the original pathological speech by up to 200 %. The study employs large-scale listening tests and quantitative analyses to assess intelligibility, rhythm, perceived vocal quality and preference ratings across 93 participants. The best-performing models (QuickVC, FreeVC, and XVC) consistently improved the naturalness and healthiness of the converted voices, while LLVC, although capable of real-time processing on CPUs, showed limited synthesis quality. Spectral analyses confirm that VC restores formant structure and...

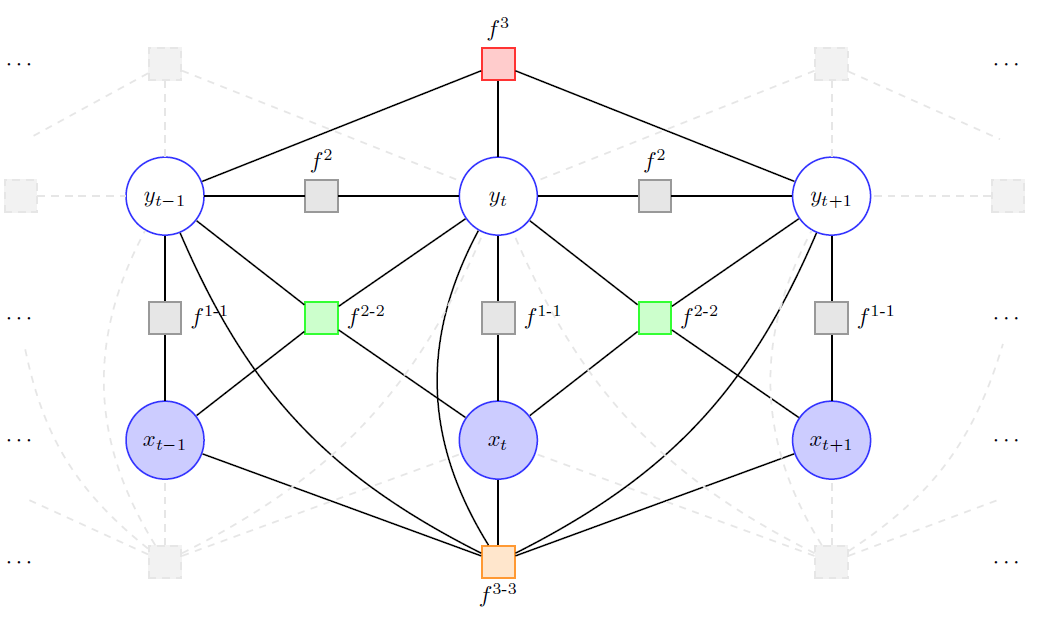

Human communication is a remarkably coordinated activity. Successful interaction not only relies on the words themselves, but also on how these words are said, on subtle cues and fine-grained timing between conversational partners. Speakers continuously adjust to each other in real time, relying not only on linguistic content but also on prosody, gestures and context. This dynamic behavior is especially evident in spontaneous conversation, where speakers rarely plan their turns in advance but instead co-construct utterances on-the-fly and in conjunction with their interlocutors. Understanding how this coordination unfolds, particularly in the area of turn-taking, remains a central challenge for speech scientists and technologists aiming to understand and model human speech processing in spontaneous human conversation. This paper explores backchannels, short listener responses such as “mhm”, which play an important role in managing turn-taking and grounding in spontaneous conversation. The study investigates if and when backchannels occur by taking into account...

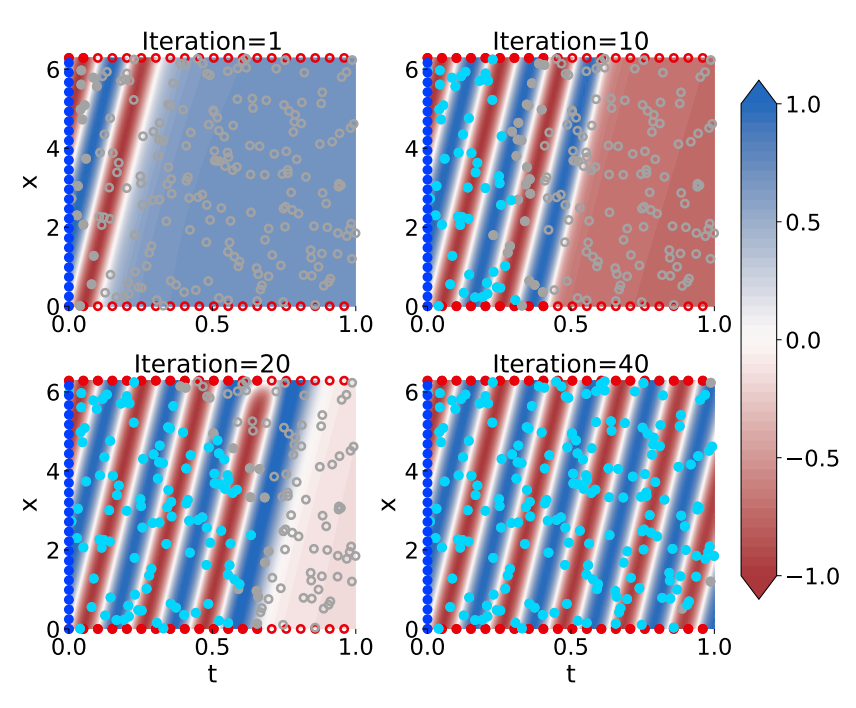

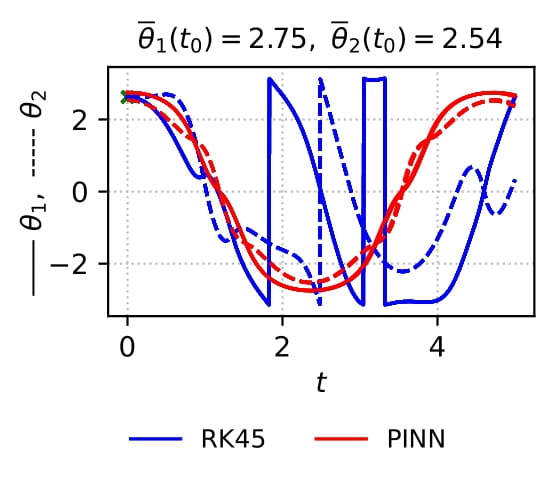

Physics-informed neural networks (PINNs) are known to have poor training convergence if they are used to solve boundary value problems, i.e., if they should learn the solution to a partial differential equation given only initial and boundary conditions. Previous work has shown that training is more stable if the computational domain – the extent of the space and time coordinates for which the PDE should be solved – is small. As a consequence, a series of domain decomposition and collocation point sampling methods were proposed to improve training convergence. Specifically, Haitsiukevich and Ilin (2023) proposed an ensemble approach that extends the active training domain of each PINN based on i) ensemble consensus and ii) vicinity to (pseudo-)labeled points, thus ensuring that the information from the initial condition successfully propagates to the interior of the computational domain. In this work with Kevin Innerebner and Franz Rohrhofer, we replaced the ensemble by...

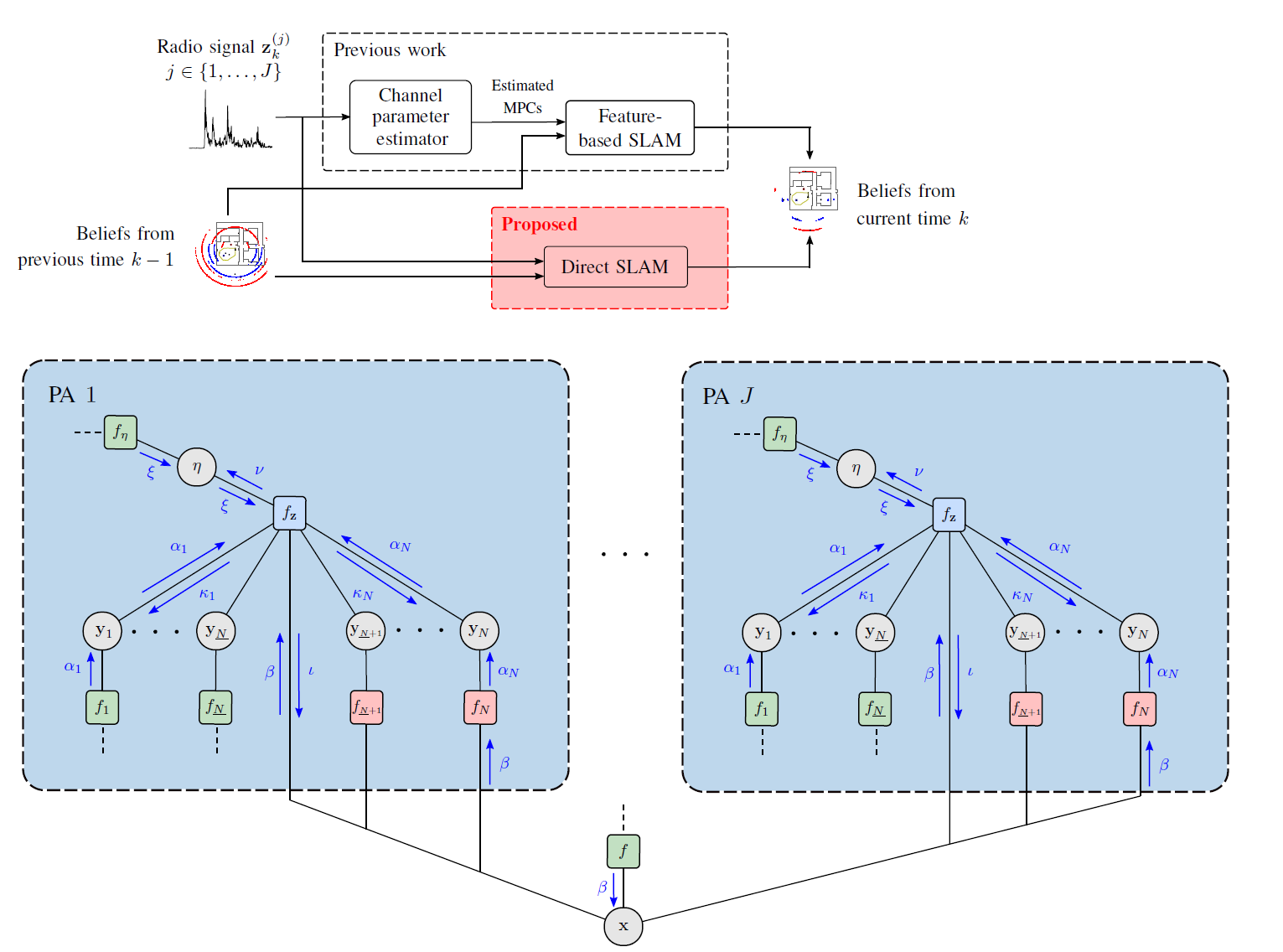

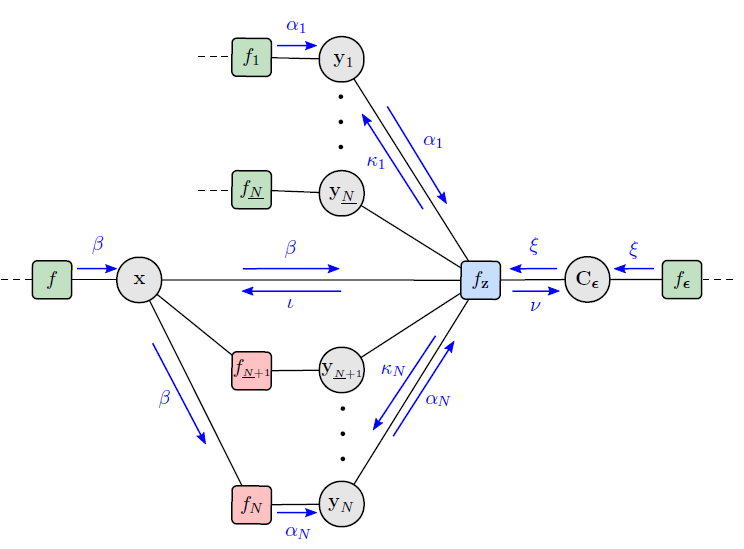

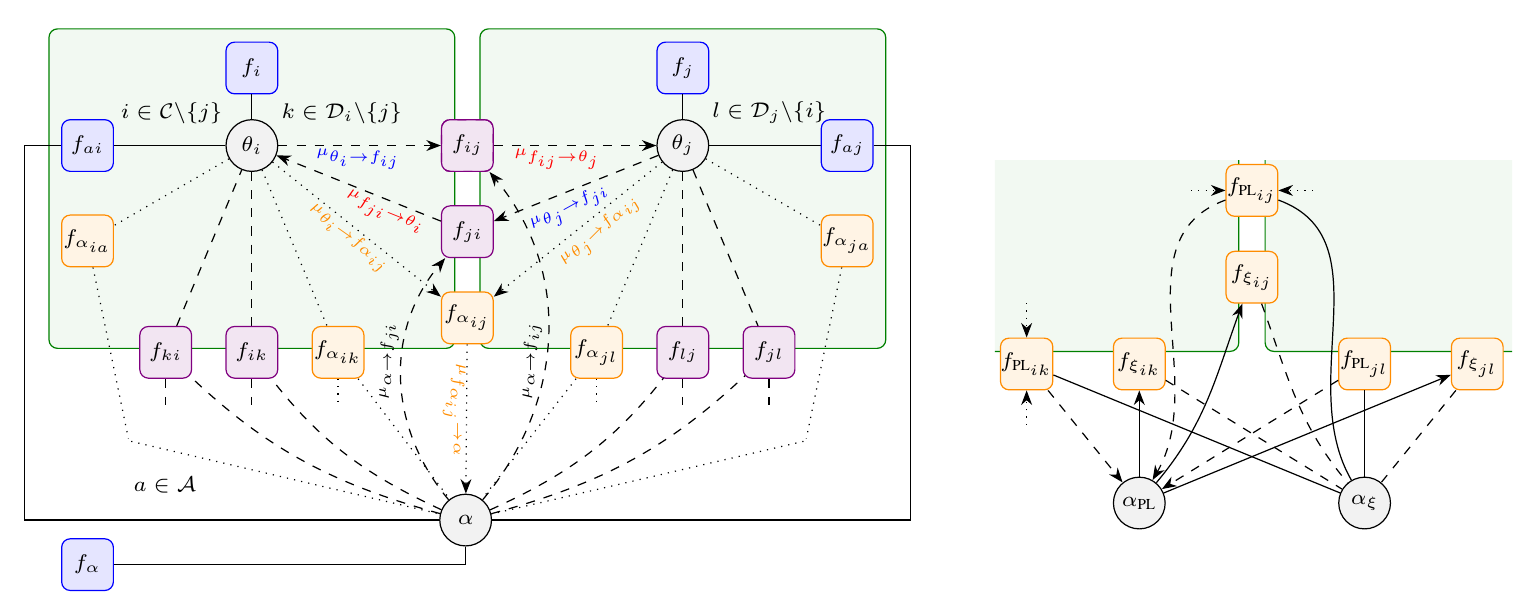

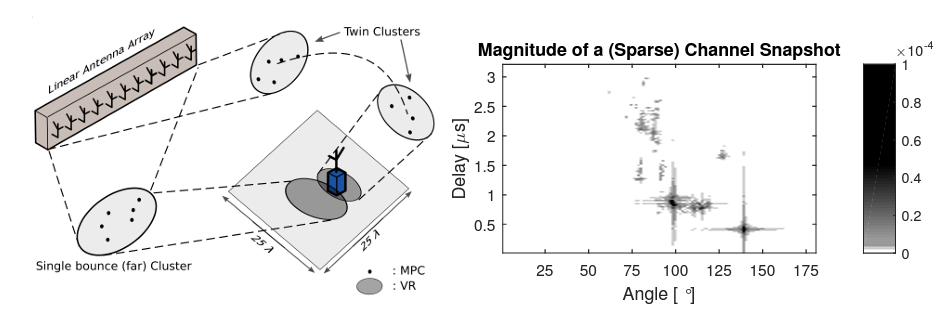

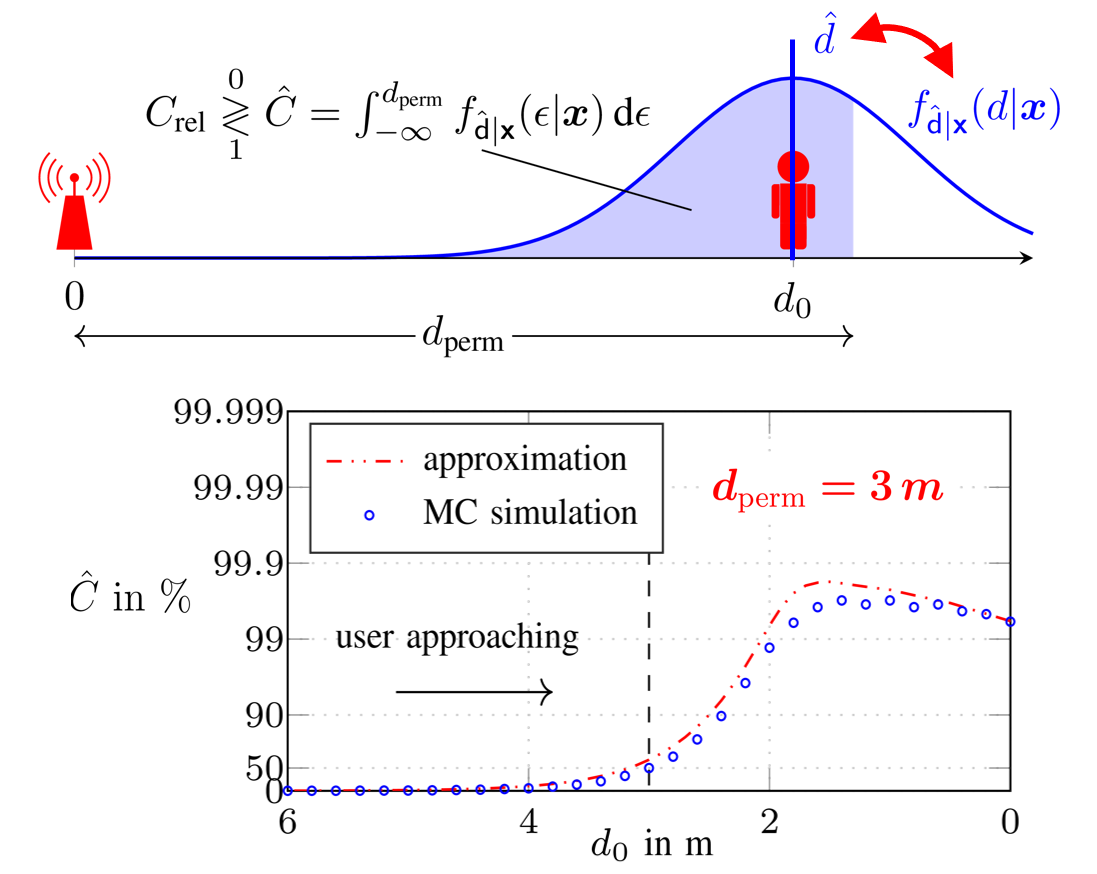

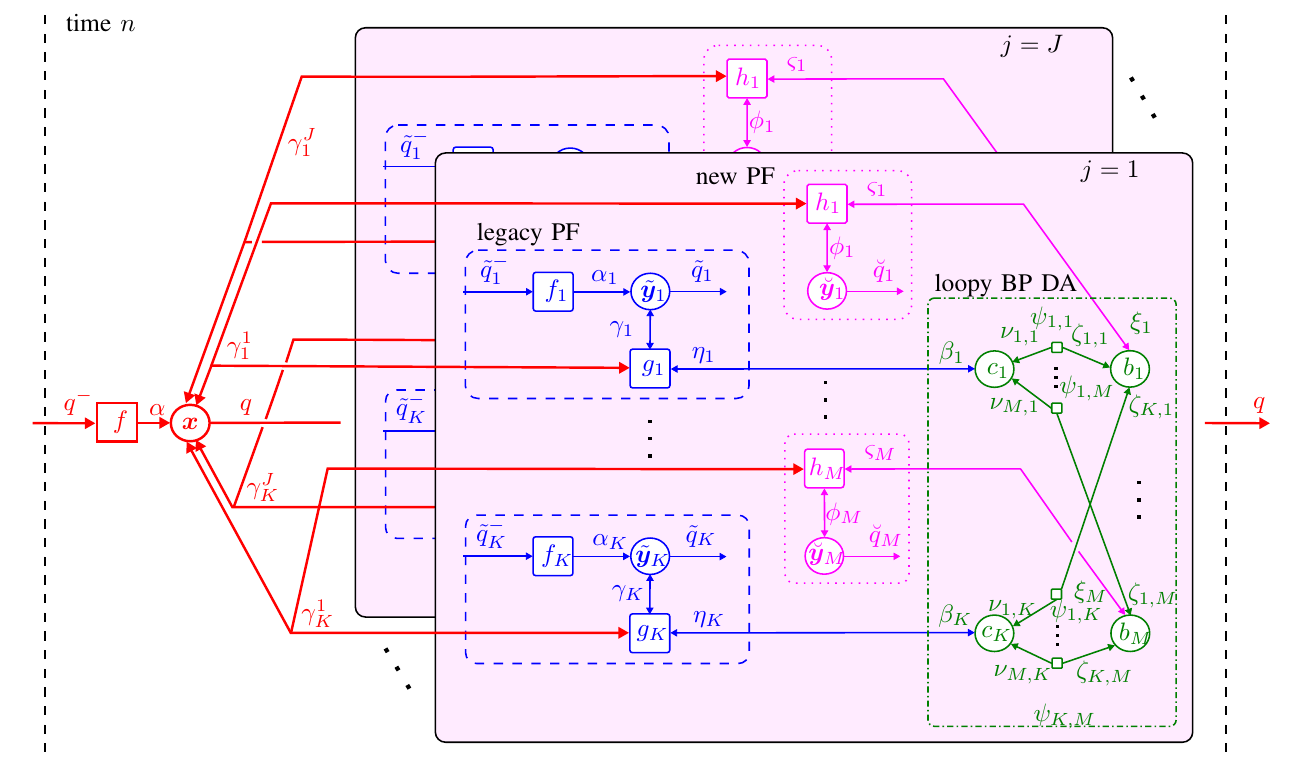

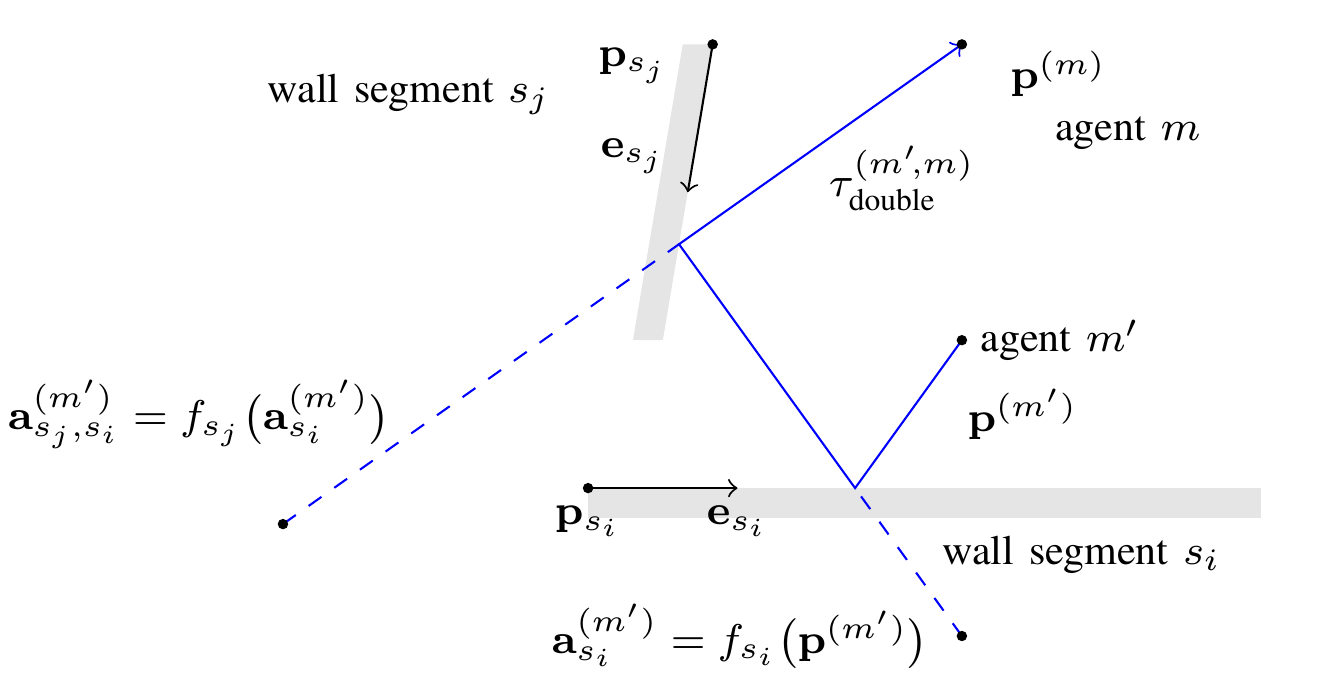

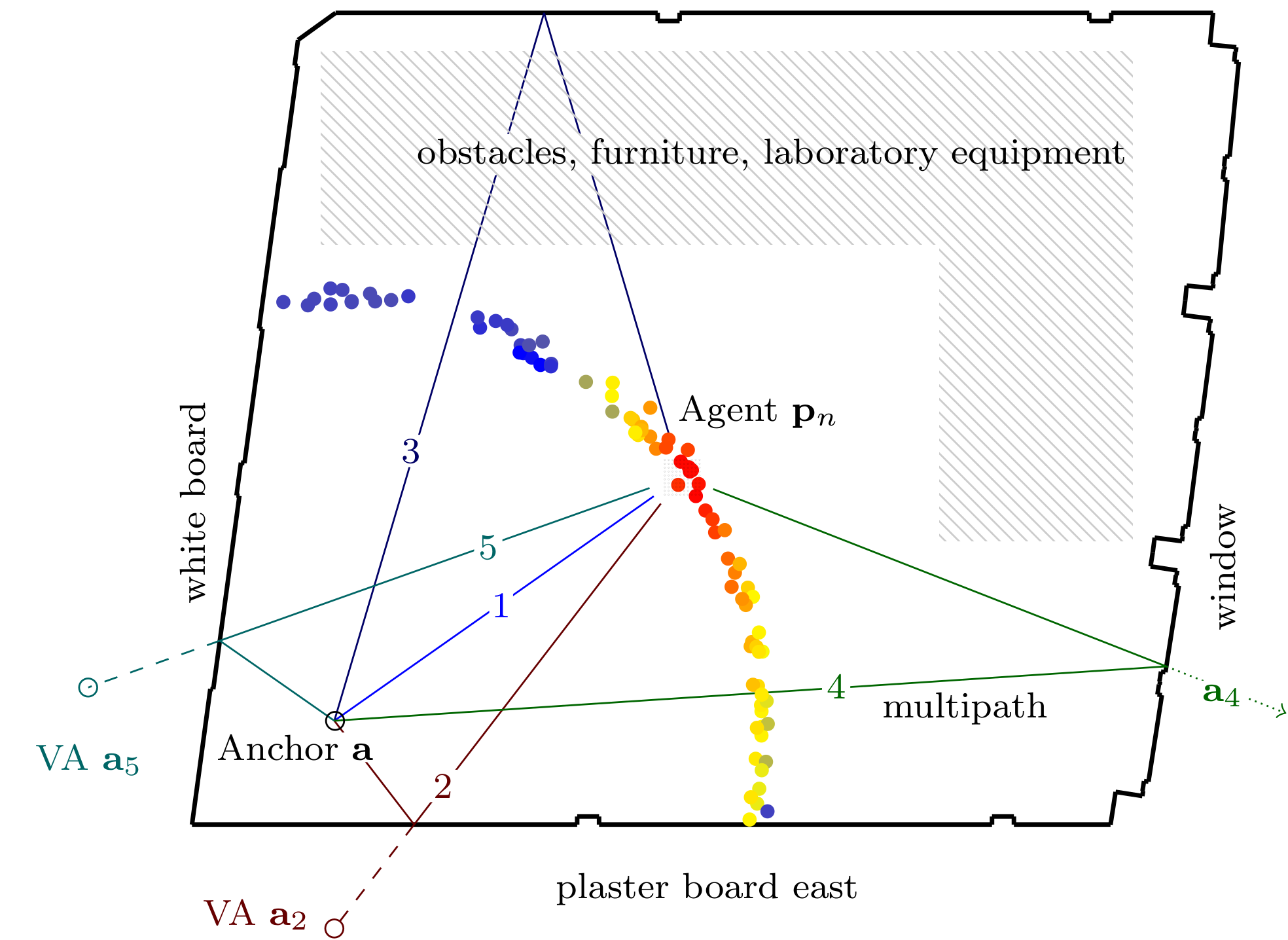

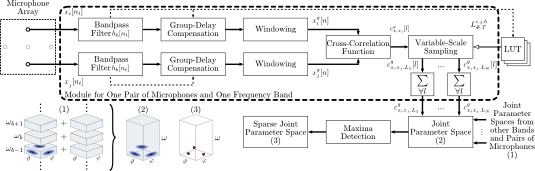

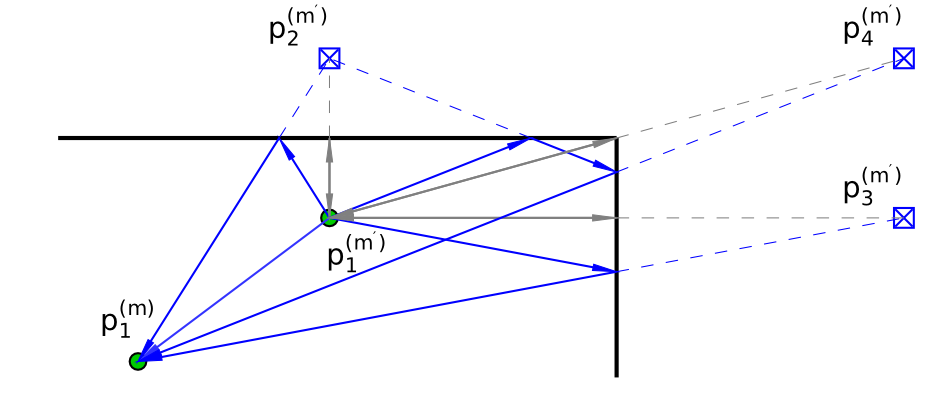

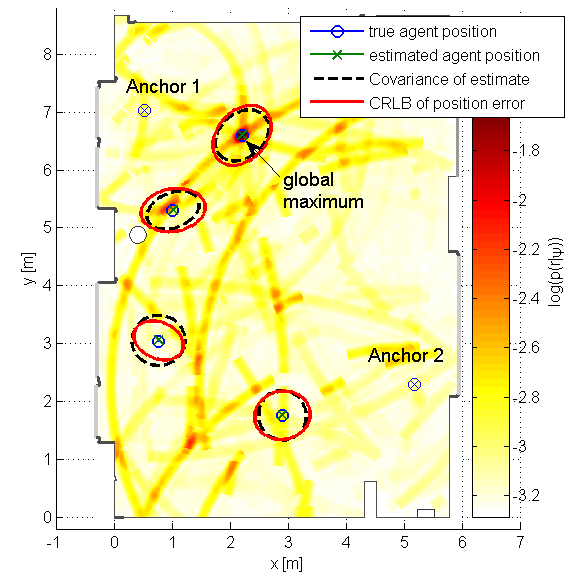

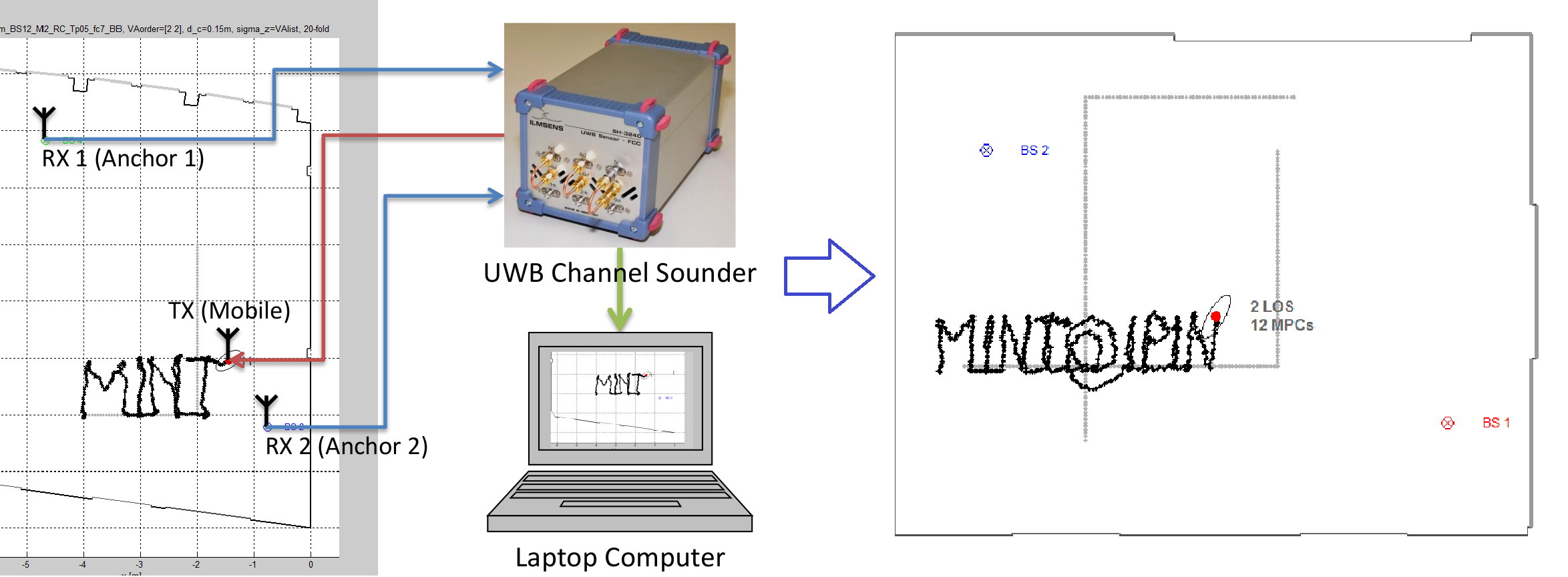

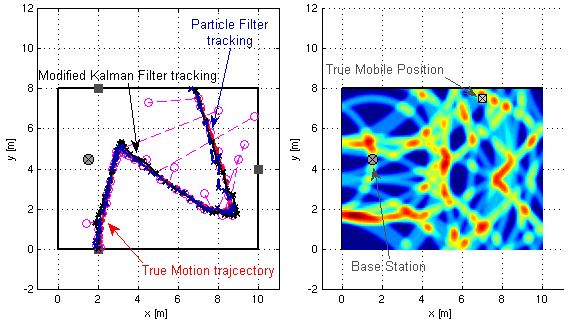

Localizing users and mapping the environment using radio signals is a key task in emerging applications such as reliable, low-latency communications, location-aware security, and safety-critical navigation. Recently introduced multipath-based simultaneous localization and mapping (MP-SLAM) can jointly localize a mobile agent (i.e., the user) and the reflective surfaces (such as walls) in radio frequency (RF) environments with convex geometries. Most existing MP-SLAM methods assume that map features and their corresponding RF propagation paths are statistically independent. These existing methods neglect inherent dependencies that arise when a single reflective surface contributes to different propagation paths or when an agent communicates with more than one base station (BS). In our paper [1], we propose a Bayesian MP-SLAM method for distributed MIMO systems that addresses this limitation. In particular, we make use of amplitude statistics to establish adaptive time-varying detection probabilities. Based on the resulting “soft” ray-tracing strategy, our method can fuse information across...

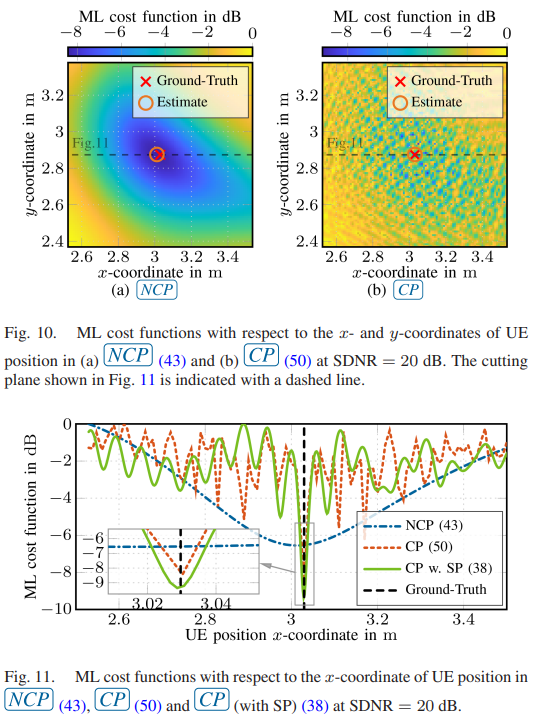

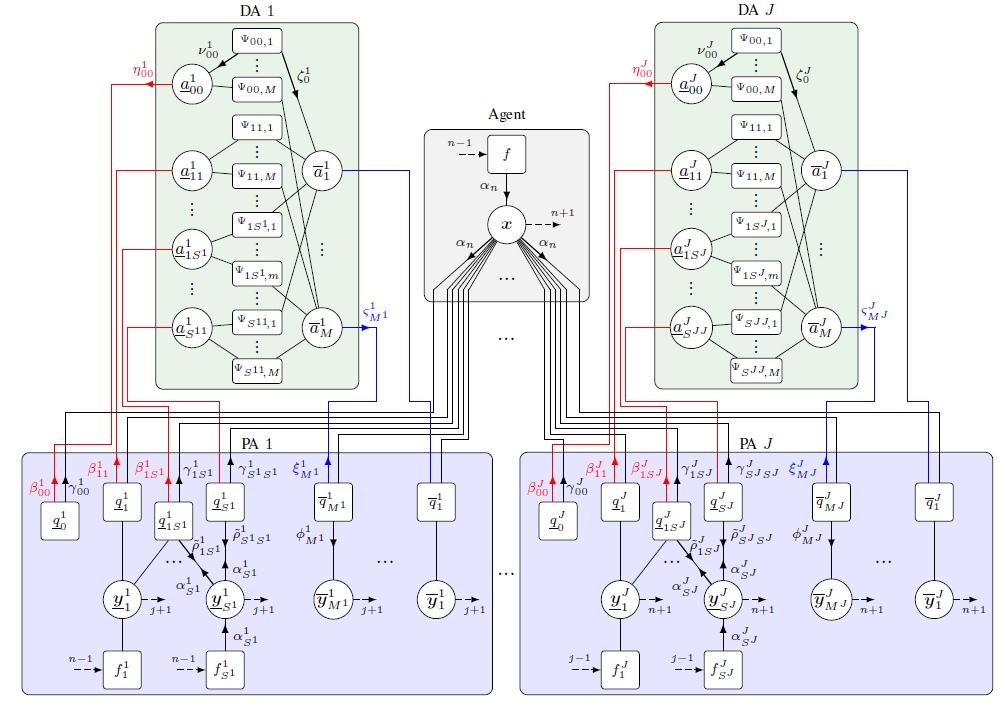

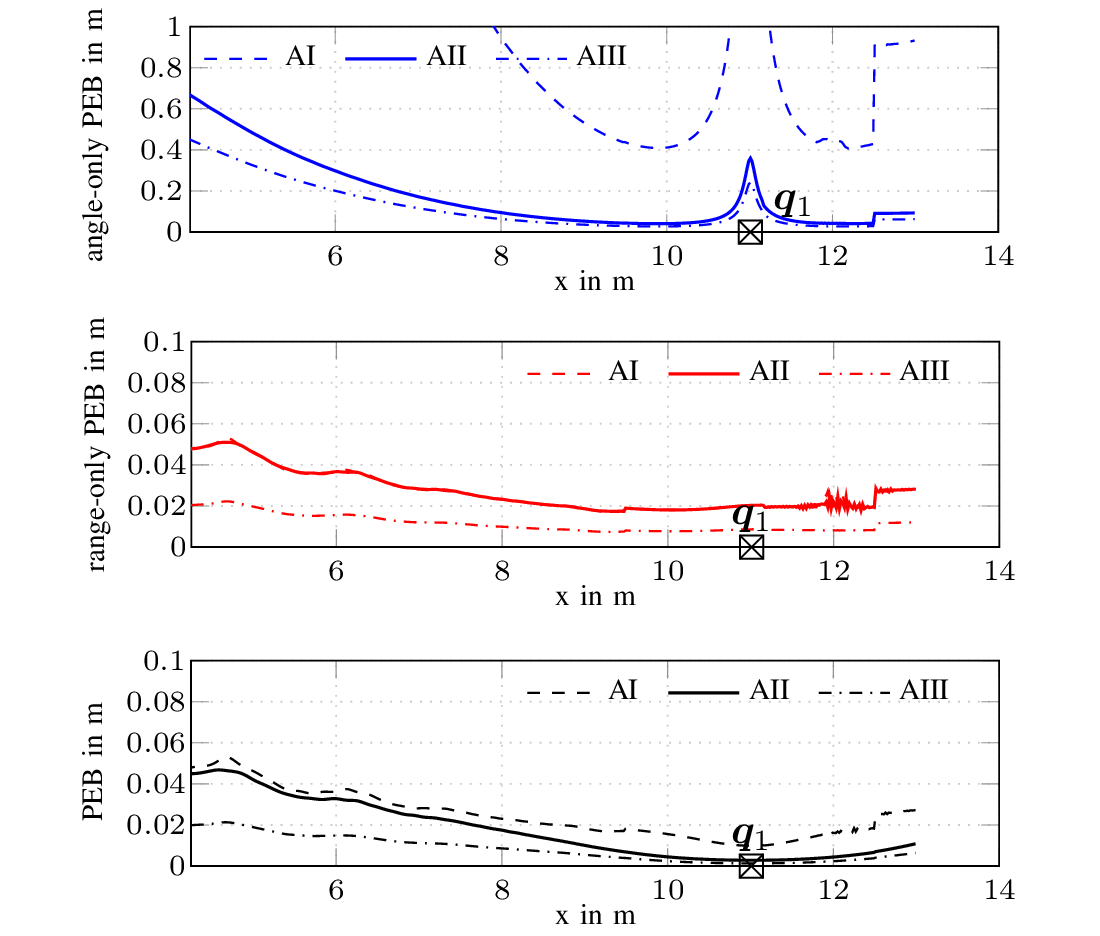

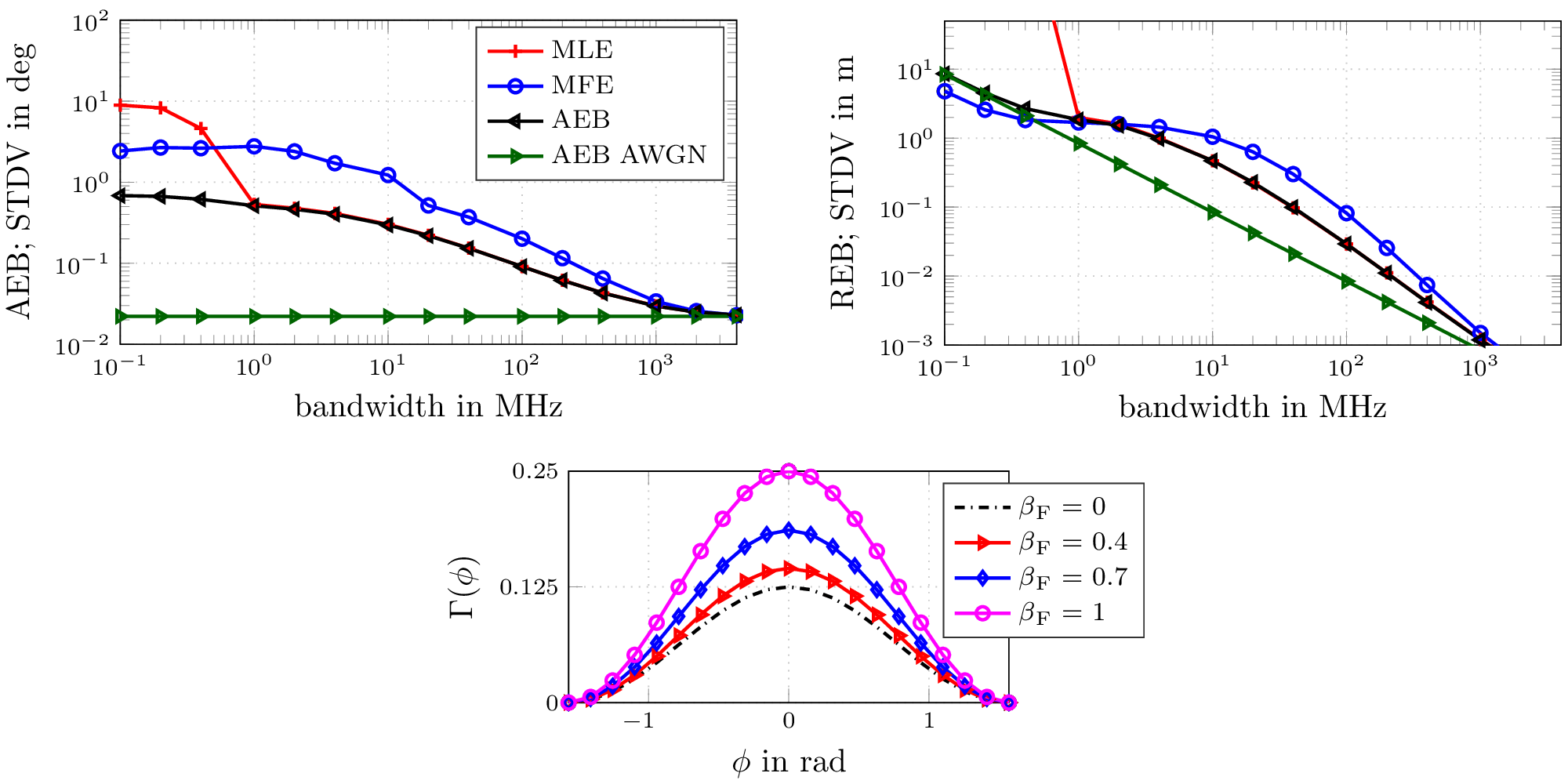

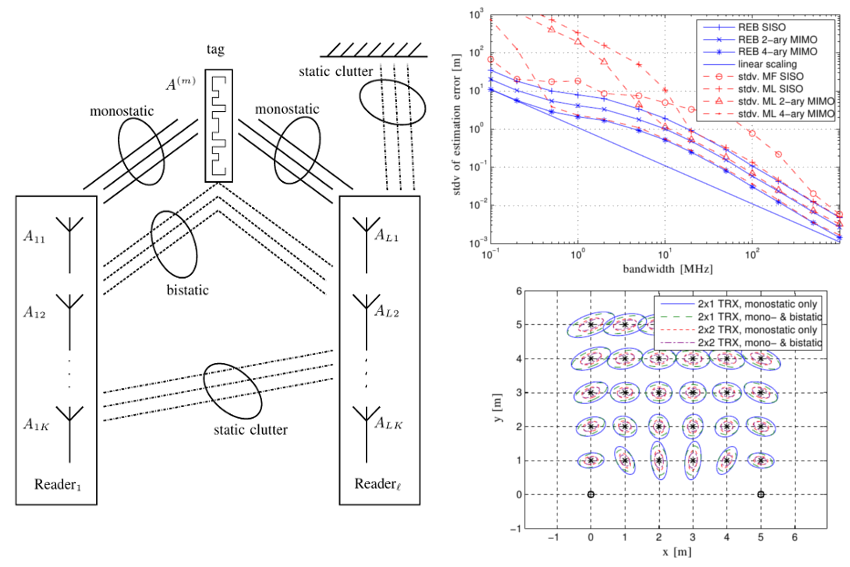

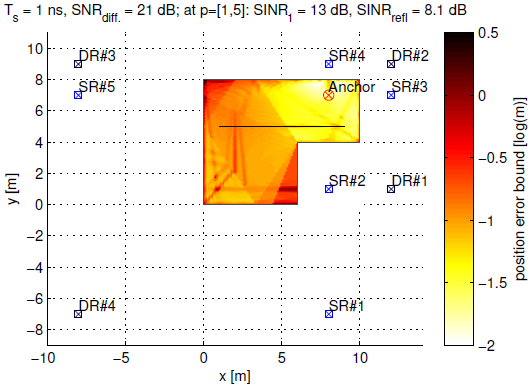

Extremely large-scale antenna array (ELAA) systems emerge as a promising technology in beyond 5G and 6G wireless networks to support the deployment of distributed architectures. This paper explores the use of ELAAs to enable joint localization, synchronization and mapping in sub-6 GHz uplink channels, capitalizing on the near-field effects of phase-coherent distributedm arrays. We focus on a scenario where a single-antenna user equipment (UE) communicates with a network of access points (APs) distributed in an indoor environment, considering both specular reflections from walls and scattering from objects. The UE is assumed to be unsynchronized to the network, while the APs can be timeand phase-synchronized to each other. We formulate the problem of joint estimation of location, clock offset and phase offset of the UE, and the locations of scattering points (SPs) (i.e., mapping). Through comprehensive Fisher information analysis, we assess the impact of bandwidth, AP array size, wall reflections, SPs...

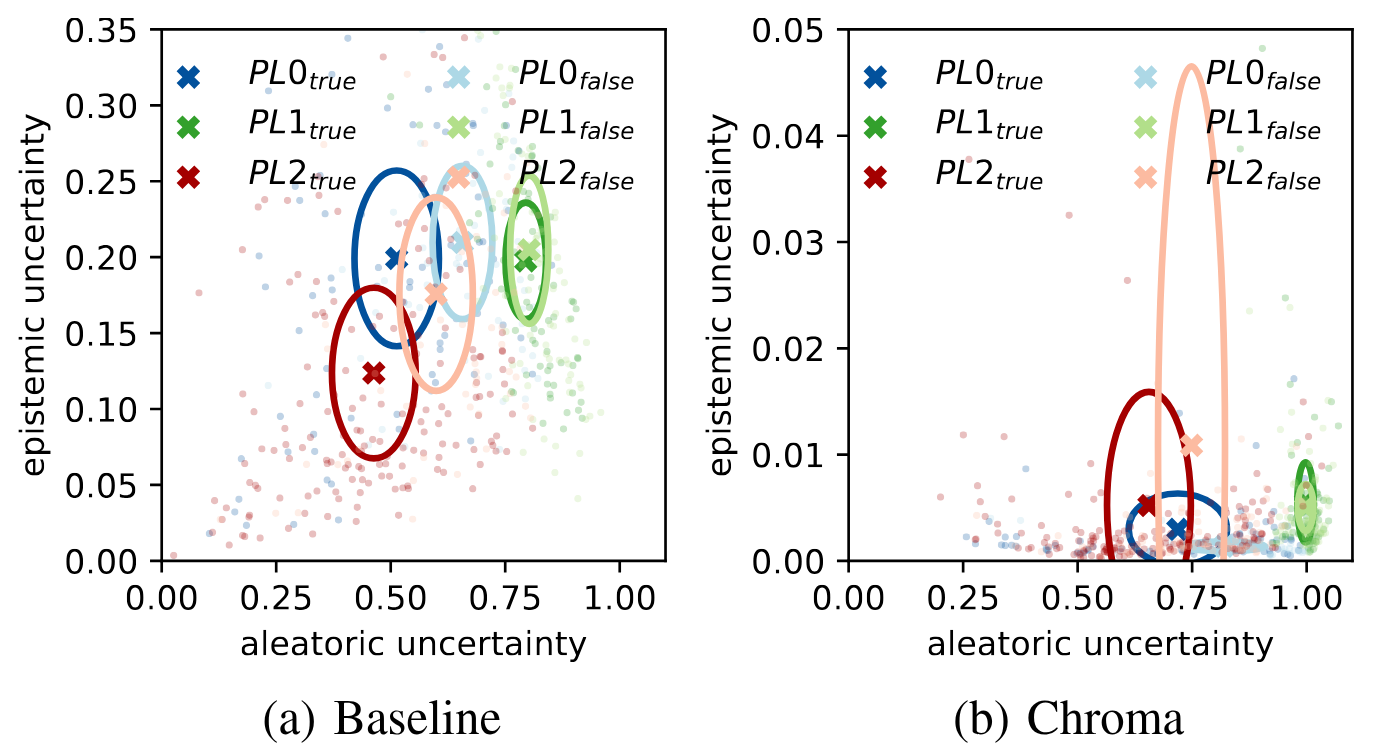

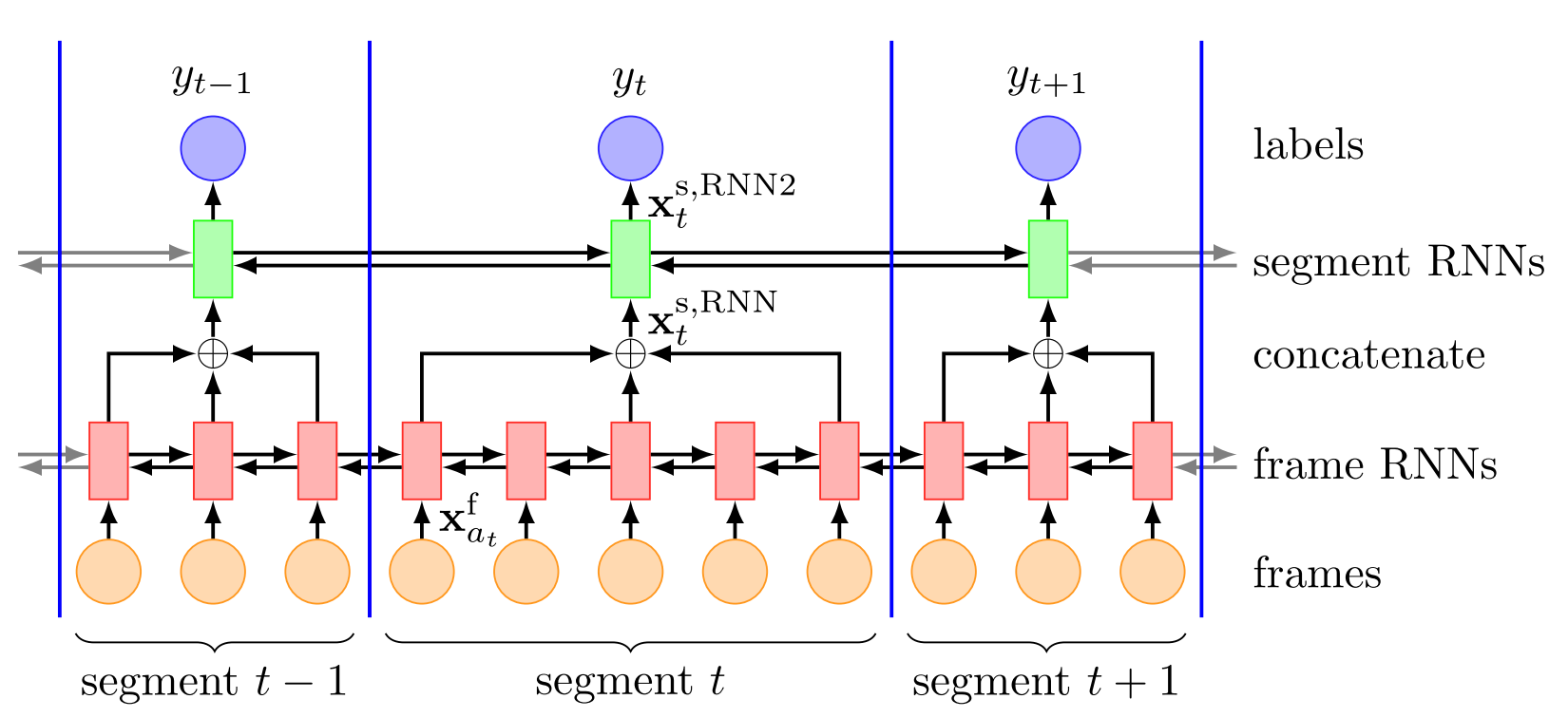

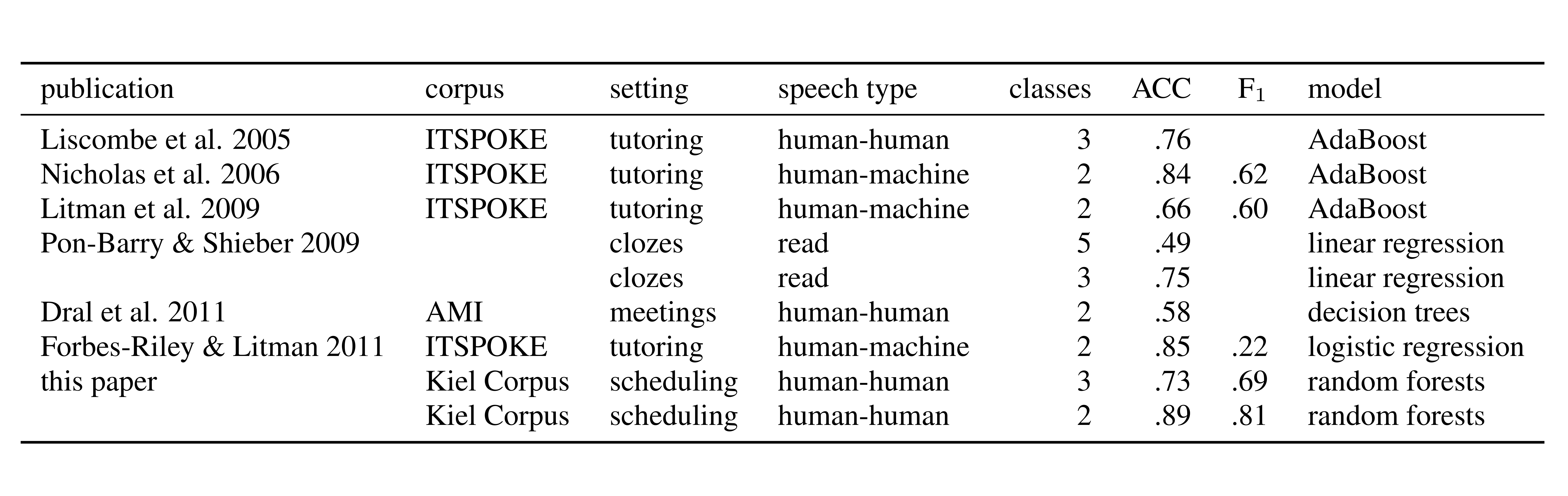

This paper presents methods for prominence classification in conversational speech. Most existing tools rely on prosodic features extracted at syllable- or phone-level, performing well on read speech. This is not the case for conversational speech, where the quality of automatic segmentation is significantly worse. We introduce entropy-based chroma features, requiring only word-level segmentations. They perform equally well as a random forest classifier with prosodic features (requiring phone-level segmentation), with accuracies in the range of the human inter-rater agreement. We further use Bayesian deep learning to quantify the epistemic and aleatoric uncertainty of the prediction for prosodic and chroma features. Whereas the aleatoric uncertainty is, as expected, consistent with inter-rater agreement and similarly high for both feature sets, the epistemic uncertainty is lower for the classifier based on chroma features, indicating higher classification consistency across the corpus.

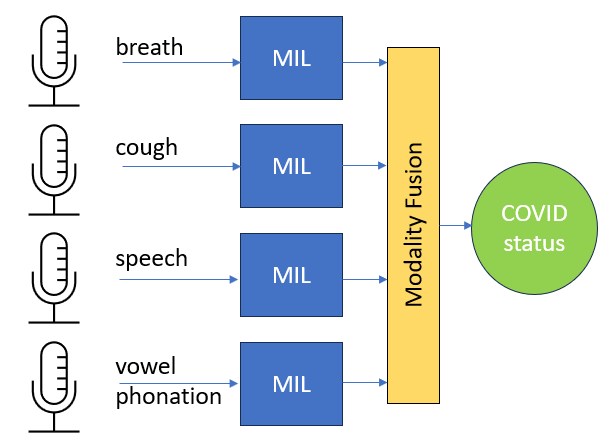

In the COVID-19 pandemic, a rigorous testing scheme was crucial. However, tests can be time-consuming and expensive. A machine learning-based diagnostic tool for audio recordings could enable widespread testing at low costs. In order to achieve comparability between such algorithms, the DiCOVA challenge was created. It is based on the Coswara dataset offering the recording categories cough, speech, breath and vowel phonation. Recording durations vary greatly, ranging from one second to over a minute. A base model is pre-trained on random, short time intervals. Subsequently, a Multiple Instance Learning (MIL) model based on self-attention is incorporated to make collective predictions for multiple time segments within each audio recording, taking advantage of longer durations. In order to compete in the fusion category of the DiCOVA challenge, we utilize a linear regression approach among other fusion methods to combine predictions from the most successful models associated with each sound modality. The application...

DER STANDARD reports on our speech group’s research and the challenges of speech recognition in the Styrian dialect. Thanks to Barbara Schuppler and her PhD students Julian Linke, Saskia Wepner and Anneliese Kelterer for their work! The STANDARD readers were excited about the article and left great comments as for example: Wir befinden uns im Jahre 2100 n.Chr. Die ganze Welt ist von den Maschinen regiert… Die ganze Welt? Nein! Ein von unbeugsamen Steirern bevölkertes Bundesland hört nicht auf, den Maschinen Widerstand zu leisten. Und das Leben ist nicht leicht für die KI, die als Besatzung in den befestigten Lagern Leibnitz, Graz, Deutschlandsberg und Leoben liegen. Trotz intensiver Anstrengungen konnte die superhumane Intelligenz die Kommunikation der Menschen nicht decodieren. Wir begleiten den Murauer Ousterix auf seinen Abenteuern, eine letzte Insel menschlicher Irrationalität zu erhalten. After completing the FWF project on the development of cross-layer models for conversational speech, their work...

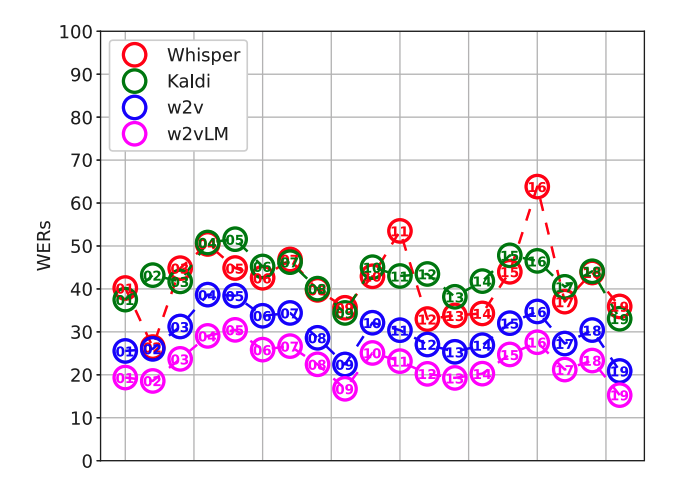

Highly performing speech recognition is important for more fluent human–machine interaction (e.g., dialogue systems). Modern ASR architectures achieve human-level recognition performance on read speech but still perform sub-par on conversational speech, which arguably is or, at least, will be instrumental for human–machine interaction. Understanding the factors behind this shortcoming of modern ASR systems may suggest directions for improving them. In this work, we compare the performances of HMM- vs. transformer-based ASR architectures on a corpus of Austrian German conversational speech. Specifically, we investigate how strongly utterance length, prosody, pronunciation, and utterance complexity as measured by perplexity affect different ASR architectures. Among other findings, we observe that single-word utterances – which are characteristic of conversational speech and constitute roughly 30% of the corpus – are recognized more accurately if their F0 contour is flat; for longer utterances, the effects of the F0 contour tend to be weaker. We further find that...

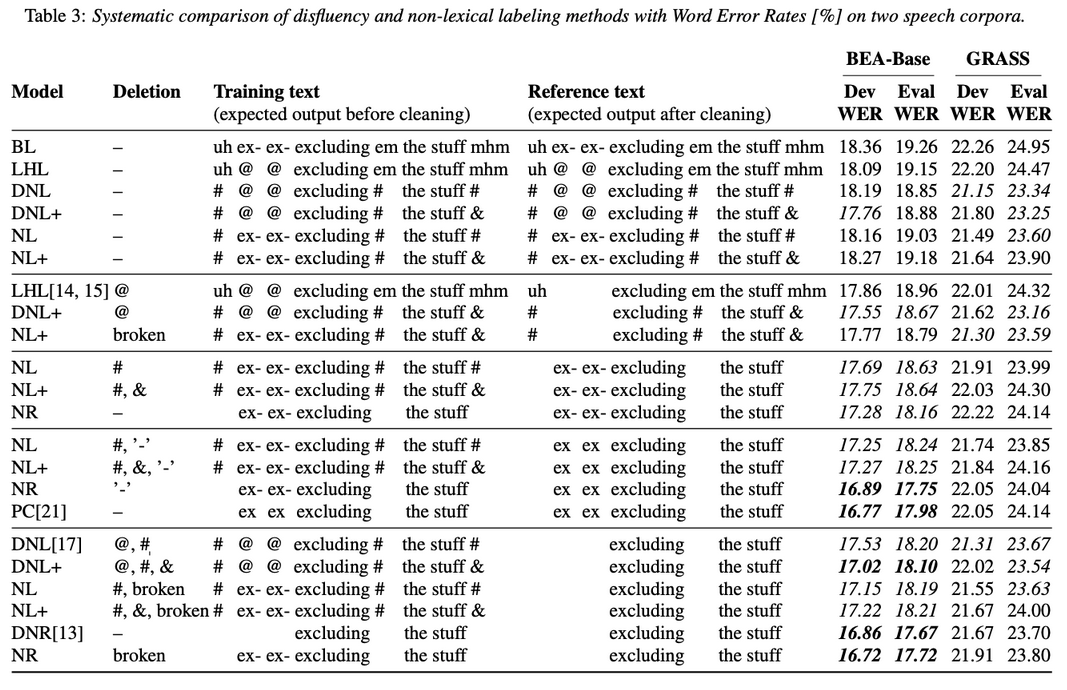

Abstract: Spontaneous speech contains a significant amount of disfluencies and non-lexical sounds (e.g., backchannels, filled pauses), which are often difficult to transcribe. Disfluency labeling for automatic speech recognition (ASR) aims at editing these phenomena in the transcription to improve overall recognition accuracy. Such labeling techniques typically delete nonlexical/disfluent labels from the prediction, where classical ASR techniques either ignore or treat them as lexical items. Our results, obtained by systematic comparison and detailed evaluation of various disfluency labeling methods on two different language conversational corpora, suggest that neither of the previous approaches are optimal. We propose to distinguish between filled pauses and meaningful conversational grunts and show that keeping the non-lexical labels is not only possible but as low as 7% label error rates can be achieved for highly important categories (including ’mhm’) while preserving a decent WER. Index Terms: end-to-end speech recognition, disfluency, conversational speech, filled pauses, Hungarian, Austrian German...

In future wireless networks, the availability of information on the position of mobile agents and the propagation environment can enable new services and increase the throughput and robustness of communications. Multipath-based simultaneous localization and mapping (SLAM) aims at estimating the position of agents and reflecting features in the environment by exploiting the relationship between the local geometry and multipath components (MPCs) in received radio signals. Existing multipath-based SLAM methods preprocess received radio signals using a channel estimator. The channel estimator lowers the data rate by extracting a set of dispersion parameters for each MPC. These parameters are then used as measurements for SLAM. Bayesian estimation for multipath-based SLAM is facilitated by the lower data rate. However, due to finite resolution capabilities limited by signal bandwidth, channel estimation is prone to errors and MPC parameters may be extracted incorrectly and lead to a reduced SLAM performance. We propose a multipath-based SLAM...

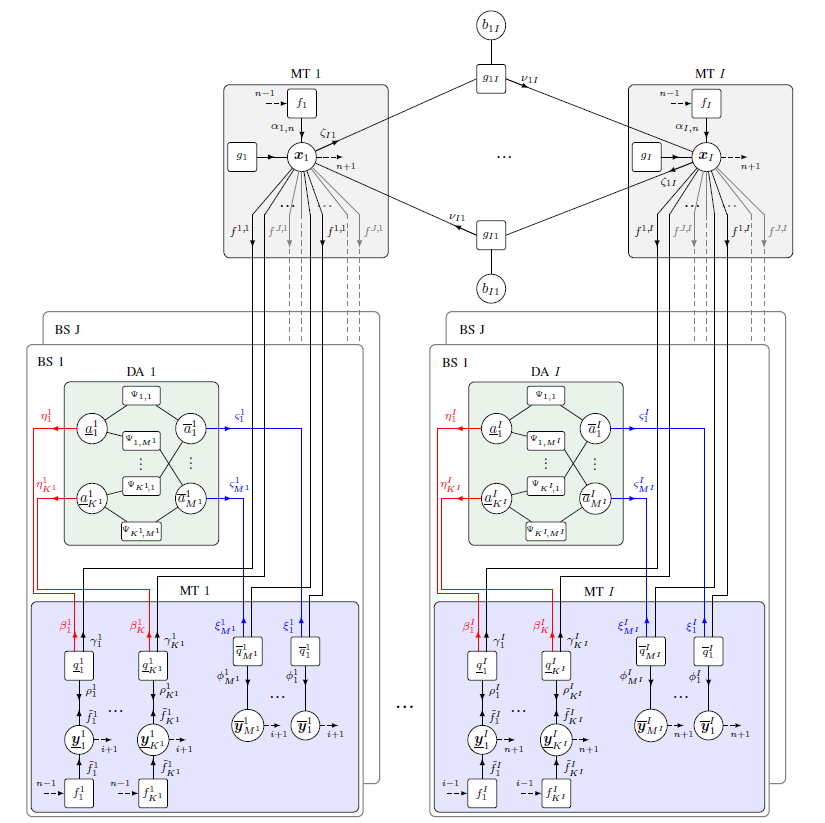

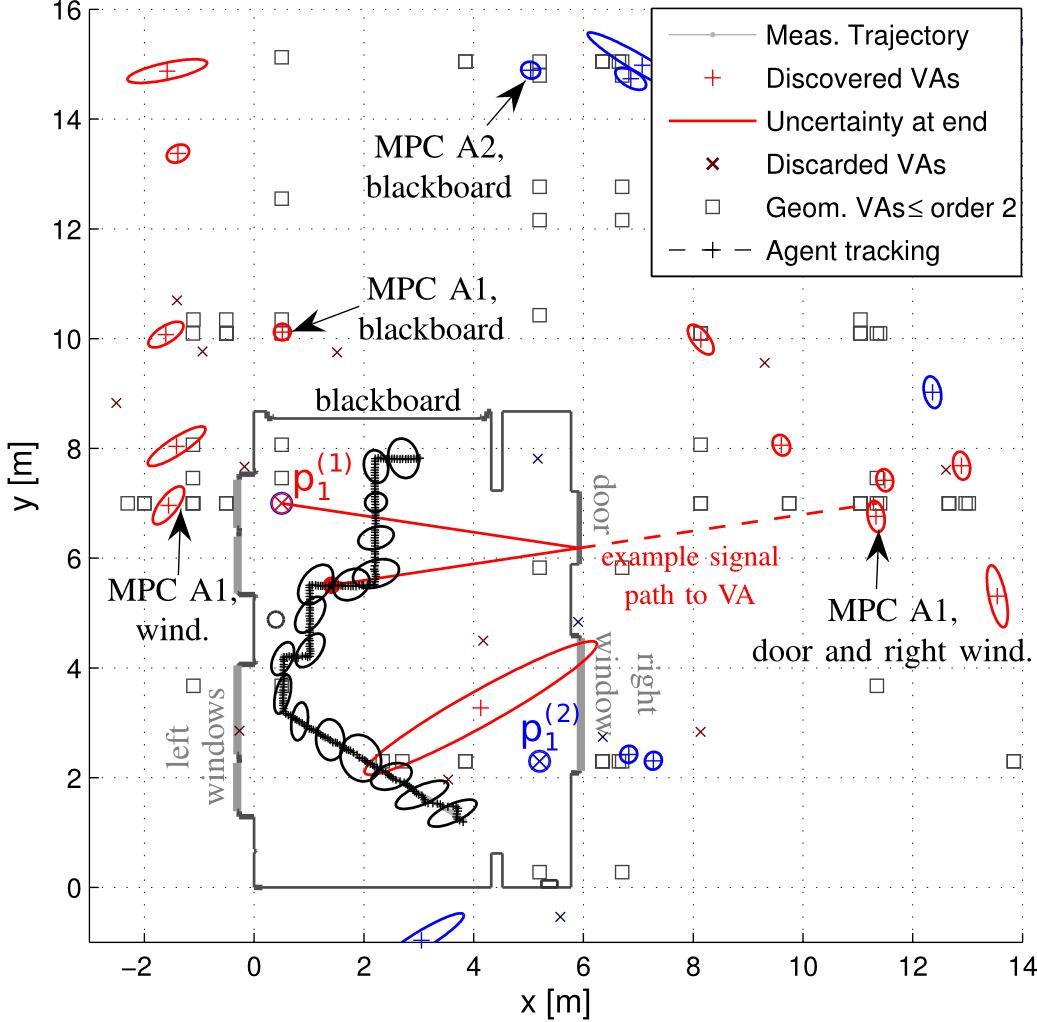

Multipath-based simultaneous localization and mapping (MP-SLAM) is a promising approach in wireless networks for obtaining position information of transmitters and receivers as well as information on the propagation environment. MP-SLAM models specular reflections of radio frequency (RF) signals at flat surfaces as virtual anchors (VAs), the mirror images of base stations (BSs). Conventional methods for MP-SLAM consider a single mobile terminal (MT) which has to be localized. The availability of additional MTs paves the way for utilizing additional information in the scenario. Specifically enabling MTs to exchange information allows for data fusion over different observations of VAs, made by different MTs, and cooperative localization. Furthermore, an inertial measurement unit (IMU) was integrated as an additional sensor for each MT unlocking additional information for orientation and state transition estimation allowing to cope with complex trajectories. Utilizing this additional information enables more robust mapping and higher localization accuracy. The paper was accepted...

This paper investigates the prosody of sentences elicited in three Information Structure (IS) conditions: all-new, theme-rheme and rhematic focus-background. The sentences were produced by 18 speakers of Egyptian Arabic (EA). This is the first quantitative study to provide a comprehensive analysis of holistic f0 contours (by means of GAMM) and configurations of f0, duration and intensity (by means of FPCA) associated with the three IS conditions, both across and within speakers. A significant difference between focus-background and the other information structure conditions was found, but also strong inter-speaker variation in terms of strategies and the degree to which these strategies were applied. The results suggest that post-focus register lowering and the duration of the stressed syllables of the focused and the utterance-final word are more consistent cues to focus than a higher peak of the focus accent. In addition, some independence of duration and intensity from f0 could be identified....

I’m happy to announce the publication of a special issue of the Journal of Advances in Information Fusion (JAIF), which I have guest edited together with my collaborator Florian Meyer of UCSD! It contains the paper “Multipath-Based SLAM for Non-Ideal Reflective Surfaces Exploiting Multiple-Measurements” written by members of our research team: Lukas Wielandner, Alexander Venus, Thomas Wilding, and myself. If you are interested have a look here.

We present a factor graph formulation and particlebased sum-product algorithm for robust localization and tracking in multipath-prone environments. The proposed sequential algorithm jointly estimates the mobile agent’s position together with a time-varying number of multipath components (MPCs). The MPCs are represented by “delay biases” corresponding to the offset between line-of-sight (LOS) component delay and the respective delays of all detectable MPCs. The delay biases of the MPCs capture the geometric features of the propagation environment with respect to the mobile agent. Therefore, they can provide position-related information contained in the MPCs without explicitly building a map of the environment. We demonstrate that the position-related information enables the algorithm to provide high-accuracy position estimates even in fully obstructed line-of-sight (OLOS) situations. Using simulated and real measurements in different scenarios we demonstrate the proposed algorithm to significantly outperform state-of-the-art multipath-aided tracking algorithms and show that the performance of our algorithm constantly attains...

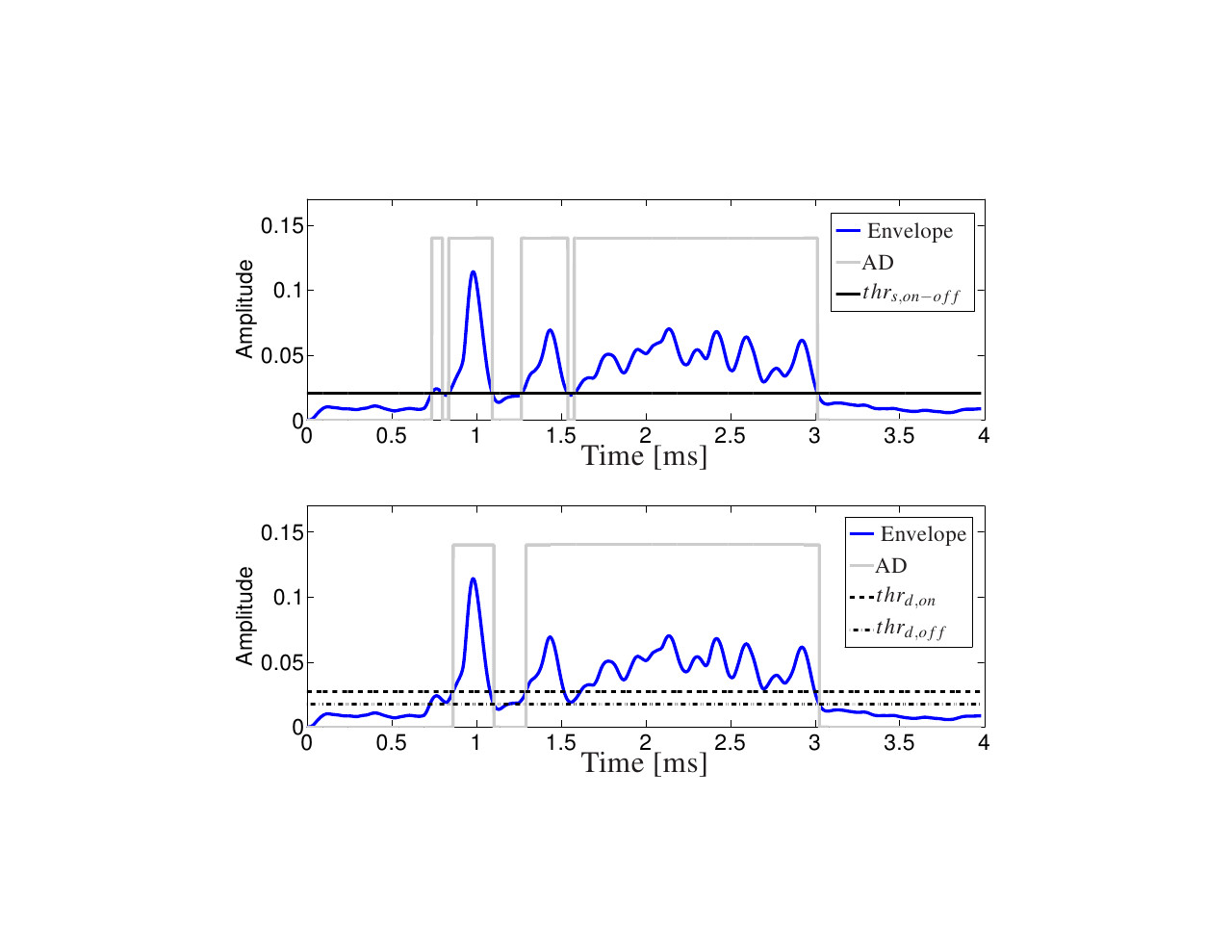

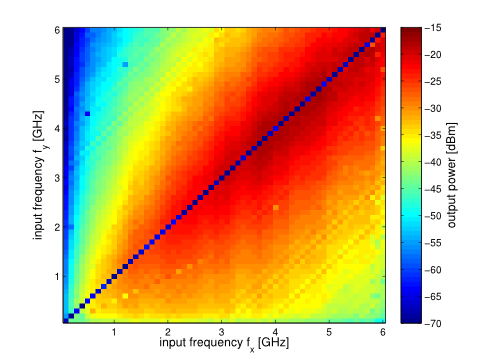

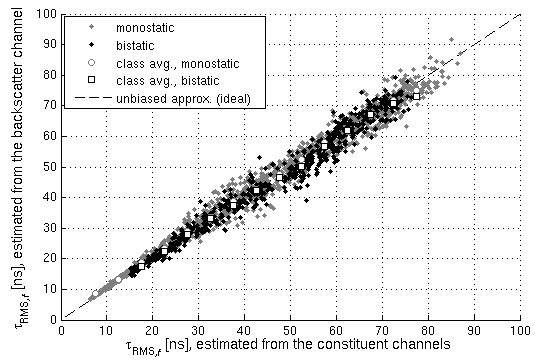

In this paper, we present an iterative algorithm that detects and estimates the specular components (SCs) and estimates the dense component (DC) of single-input—multipleoutput (SIMO) ultra-wide-band (UWB) multipath channels. Specifically, the algorithm super-resolves the SCs in the delay–angle-of-arrival domain and estimates the parameters of a parametric model of the delay-angle power spectrum characterizing the DC. Channel noise is also estimated. In essence, the algorithm solves the problem of estimating spectral lines (the SCs) in colored noise (generated by the DC and channel noise). Its design is inspired by the sparse Bayesian learning (SBL) framework. As a result the iteration process contains a threshold condition that determines whether a candidate SC shall be retained or pruned. By relying to results from extreme-value analysis the threshold of this condition is suitably adapted to ensure a prescribed probability of detecting spurious SCs. Studies using synthetic and real channel measurement data demonstrate the virtues...

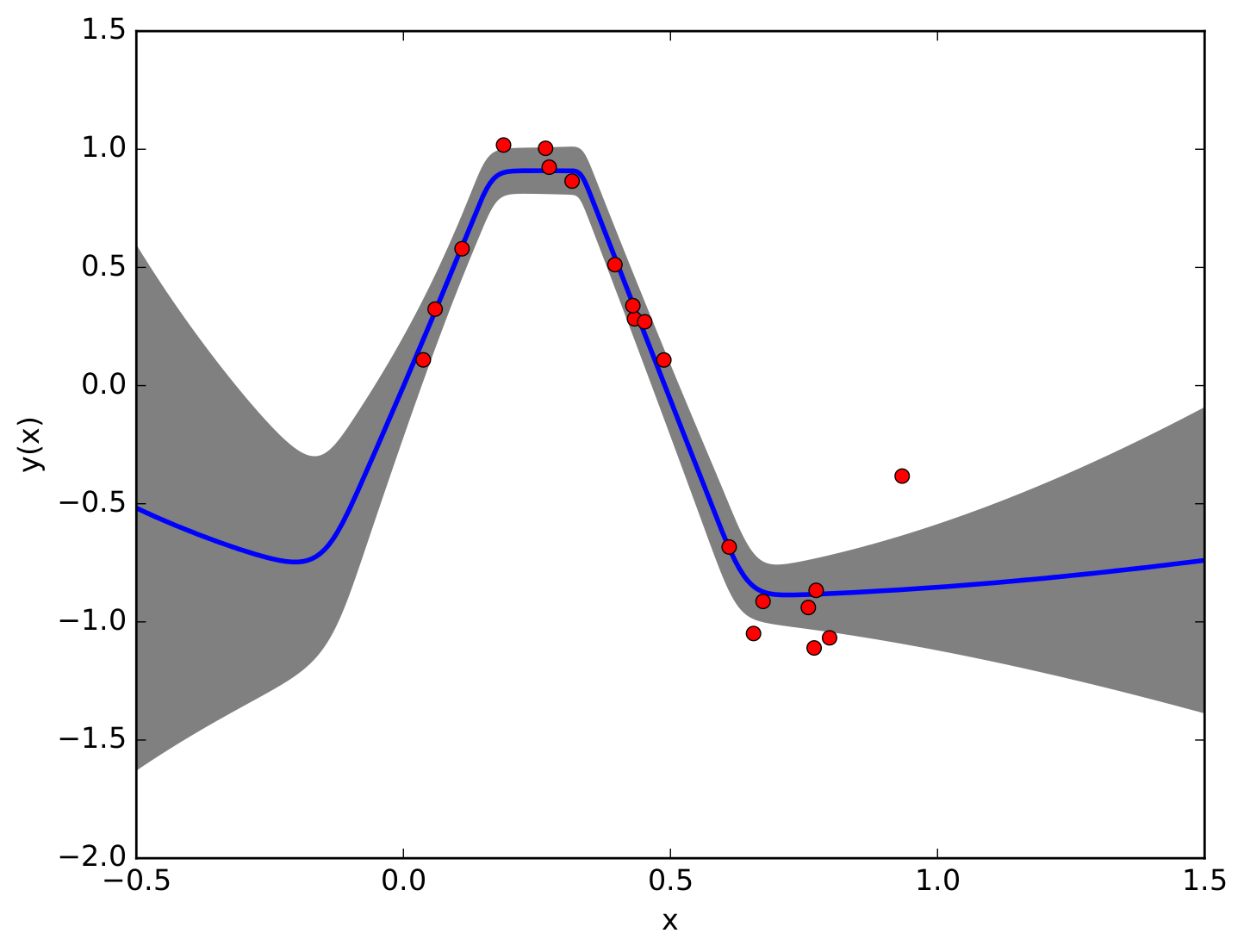

Deep ensembles have shown remarkable empirical success in quantifying uncertainty, albeit at considerable computational cost and memory footprint. Meanwhile, deterministic single-network uncertainty methods have proven as computationally effective alternatives, providing uncertainty estimates based on distributions of latent representations. While those methods are successful at out-of-domain detection, they exhibit poor calibration under distribution shifts. In this work, we propose a method that provides calibrated uncertainty by utilizing particle-based variational inference in function space. Rather than using full deep ensembles to represent particles in function space, we propose a single multi-headed neural network that is regularized to preserve bi-Lipschitz conditions. Sharing a joint latent representation enables a reduction in computational requirements, while prediction diversity is maintained by the multiple heads. We achieve competitive results in disentangling aleatoric and epistemic uncertainty for active learning, detecting out-of-domain data, and providing calibrated uncertainty estimates under distribution shifts while significantly reducing compute and memory requirements.

In this work, we develop a multipath-based simultaneous localization and mapping (SLAM) method that can directly be applied to received radio signals. In existing multipath-based SLAM approaches, a channel estimator is used as a preprocessing stage that reduces data flow and computational complexity by extracting features related to multipath components (MPCs). We aim to avoid any preprocessing stage that may lead to a loss of relevant information. The presented method relies on a new statistical model for the data generation process of the received radio signal that can be represented by a factor graph. This factor graph is the starting point for the development of an efficient belief propagation (BP) method for multipath-based SLAM that directly uses received radio signals as measurements. Simulation results in a realistic scenario with a single-input single-output (SISO) channel demonstrate that the proposed direct method for radio-based SLAM outperforms state-of-the-art methods that rely on a...

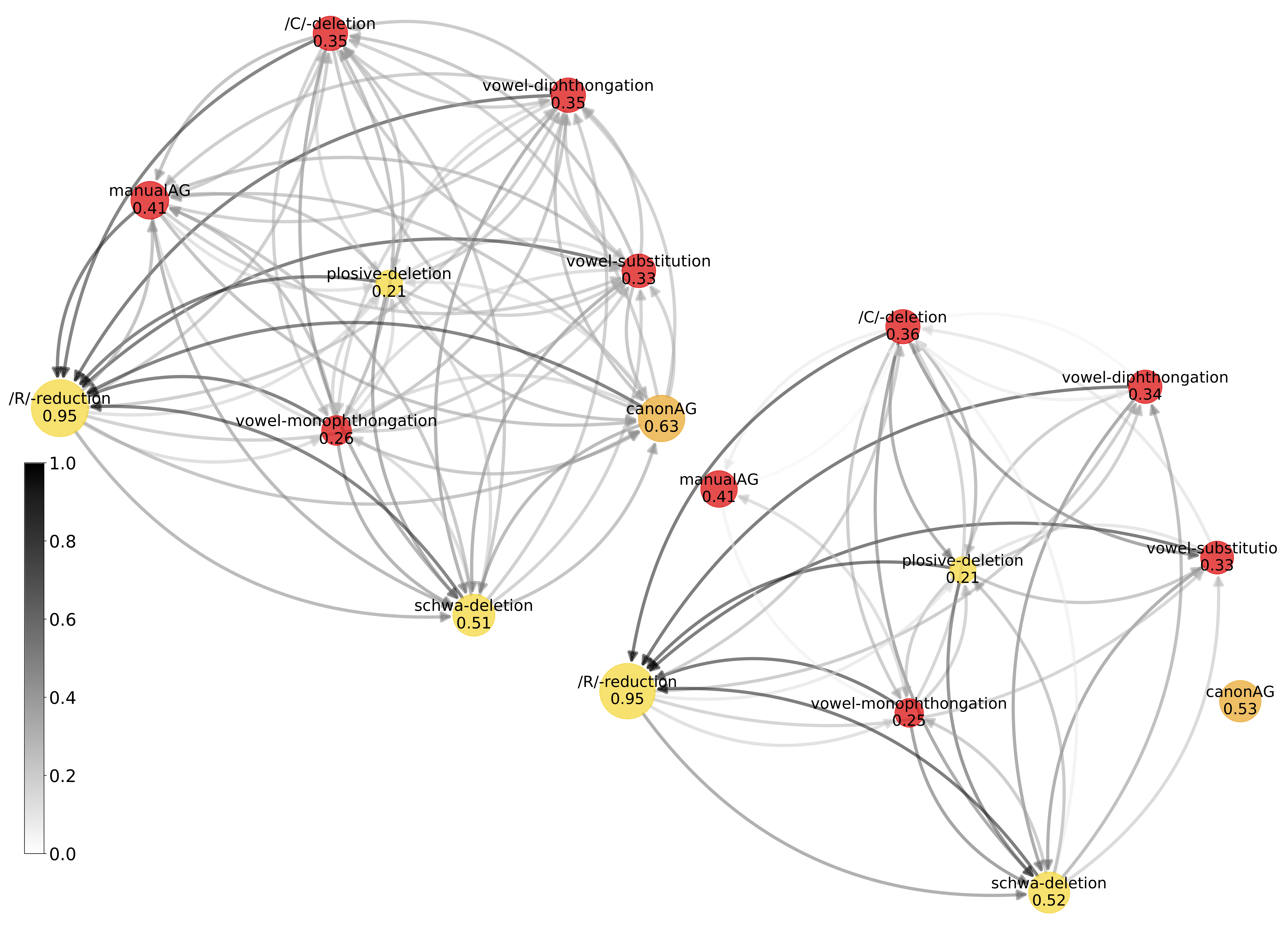

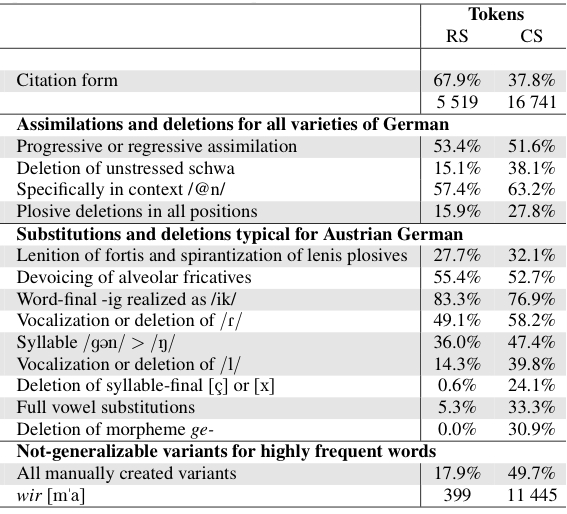

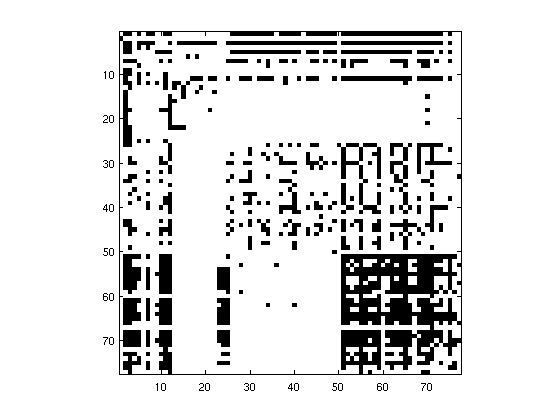

Given the development of automatic speech recognition based techniques for creating phonetic annotations of large speech corpora, there has been a growing interest in investigating the frequencies of occurrence of phonological and reduction processes. Given that most studies have analyzed these processes separately, they did not provide insights about their co-occurrences. This paper contributes with introducing graph theory methods for the analysis of pronunciation variation in GRASS, a large corpus of Austrian German conversational speech. More specifically, we investigate how reduction processes that are typical for spontaneous German in general (figure: yellow) co-occur with phonological processes typical for the Austrian German variety (figure: red). Whereas our concrete findings are of special interest to scientists investigating variation in German, the approach presented opens new possibilities to analyze pronunciation variation in large corpora of across speakers and across speaking styles in any language. This work has been presented at Interspeech 2023, Dublin....

Multipath-based simultaneous localization and mapping (SLAM) is a promising approach to obtain position information of transmitters and receivers as well as information regarding the propagation environments in future mobile communication systems. Usually, specular reflections of the radio signals occurring at flat surfaces are modeled by virtual anchors (VAs) that are mirror images of the physical anchors (PAs). In existing methods for multipath-based SLAM, each VA is assumed to generate only a single measurement. However, due to imperfections of the measurement equipment such as non-calibrated antennas or model mismatch due to roughness of the reflective surfaces, there are potentially multiple multipath components (MPCs) that are associated to one single VA. In this paper, we introduce a Bayesian particle-based sum-product algorithm (SPA) for multipath-based SLAM that can cope with multiplemeasurements being associated to a single VA. Furthermore, we introduce a novel statistical measurement model that is strongly related to the radio signal....

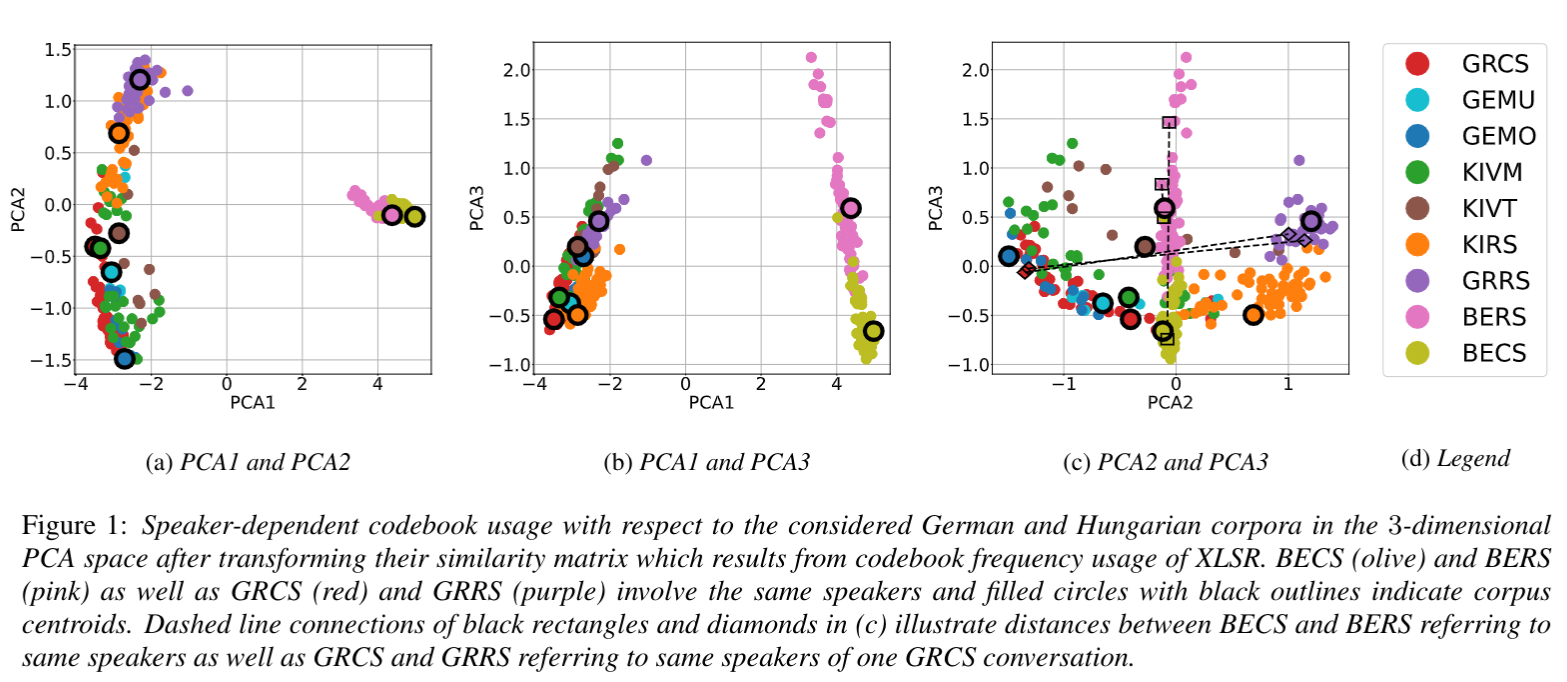

Automatic speech recognition systems based on self-supervised learning yield excellent performance for read, but not so for conversational speech. This work contributes insights into how corpora from different languages and speaking styles are encoded in shared discrete speech representations (based on wav2vec2 XLSR). We analyze codebook entries of data from two languages from different language families (i.e., German and Hungarian), of data from different varieties from the same language (i.e., German and Austrian German) and of data from different speaking styles (read and conversational speech). We find that – as expected – the two languages are clearly separable. With respect to speaking style, conversational Austrian German has the highest similarity with a corpus of similar spontaneity from a different German variety, and speakers differ more among themselves when using different speaking styles than from other speakers of a different region when using the same speaking style. This work is published...

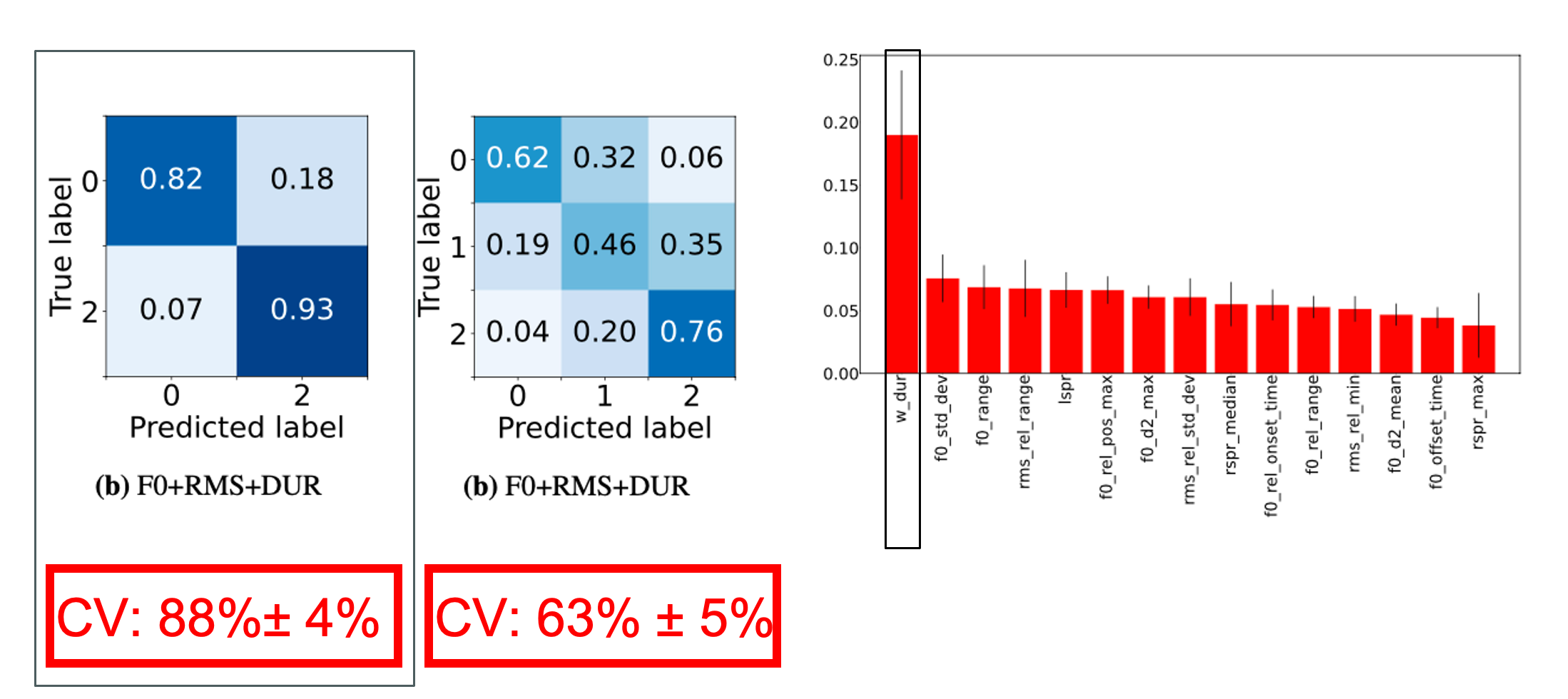

Left: Confusion matrices from experiments with 15 best features (F0+RMS+DUR). Right: Random Forest feature importances for 3 class problem with F0, RMS and DUR. This work focuses on the automatic detection of prominent words in conversational speech. Most tools for prominence detection rely on prosodic features extracted at a syllable- or phone level and their accuracy thus strongly depends on the quality of the given phone-level segmentation. Given the high degree of pronunciation variation in conversational speech, automatic phonetic segmentation is not accurate enough to detect prominence reliably. Here we explore different approaches to prominence detection that require merely a prior word-level segmentation. The first experiment shows that by using word-level prosodic features cross-validation accuracies of 88%+-4% can be reached, and that word duration is the most important feature. The second experiment introduces entropy-based fundamental frequency and intensity features for prominence detection. Our findings suggest that entropy-based, word-level features can...

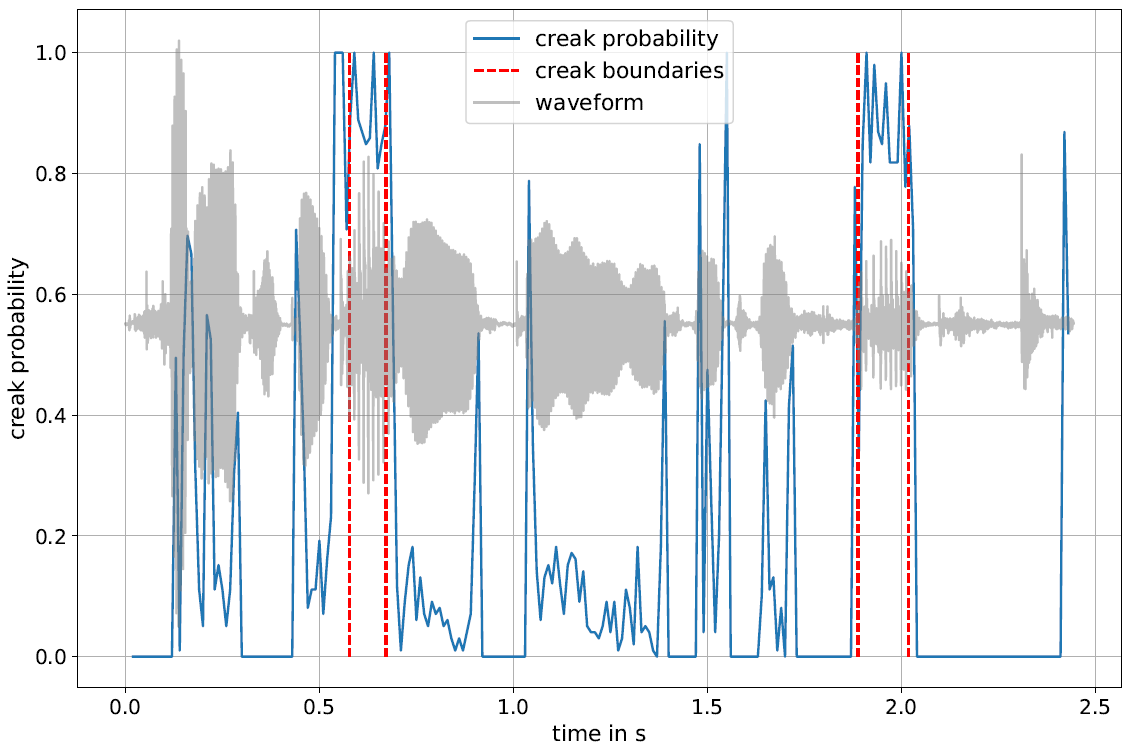

The annotation of creaky voice is relevant for various linguistic topics, from phonological analyses to the investigation of turn-taking, but manual annotation is a time-consuming process. In this paper, we present creapy, a Python-based tool to detect creaky intervals in speech signals. creapy does not require prior phonetic segmentation and supports the export of Praat TextGrid files, allowing for manual revision of the automatically labelled intervals. creapy was developed and tested using Austrian German conversational speech. It was optimised for recall to facilitate a semi-automatic annotation process, and it achieved a better performance for men’s (recall: .79) than for women’s voices (recall: .60). This work by Michael Paierl and Thomas Röck is accepted for presentation at the 20th International Congress of Phonetic Sciences – ICPhS 2023. To use creapy, checkout this repository.

Physics-informed neural networks are a deep learning approach to solving differential equations given only information about the initial and boundary conditions. PINNs are easy to implement and have many desirable properties, such as being mesh-free. Unfortunately, it has been shown that training PINNs is not so straightforward - convergence problems often arise when simulating dynamical systems with high-frequency components, chaotic or turbulent behavior. In this work, we have focused on understanding the underlying reasons for the difficulties in training PINNs by performing experiments on the double pendulum. Our results show that PINNs are not sensitive to perturbations in the initial condition. Instead, the PINN optimization consistently converges to physically correct solutions that only marginally violate the initial condition, but diverge significantly from the desired solution due to the chaotic nature of the system. We hypothesize that the PINNs “cheat” by shifting the initial conditions to values that correspond to physically...

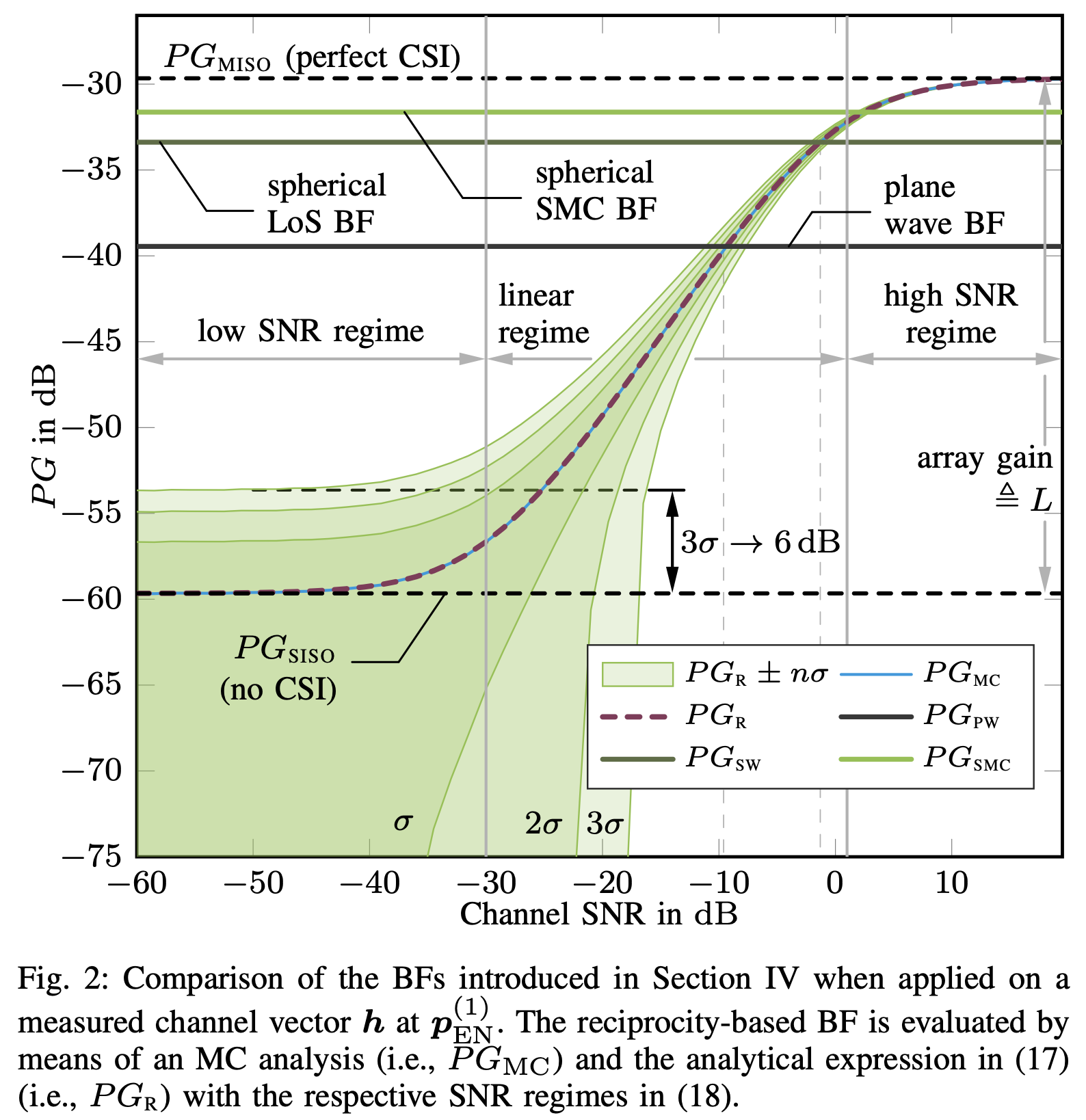

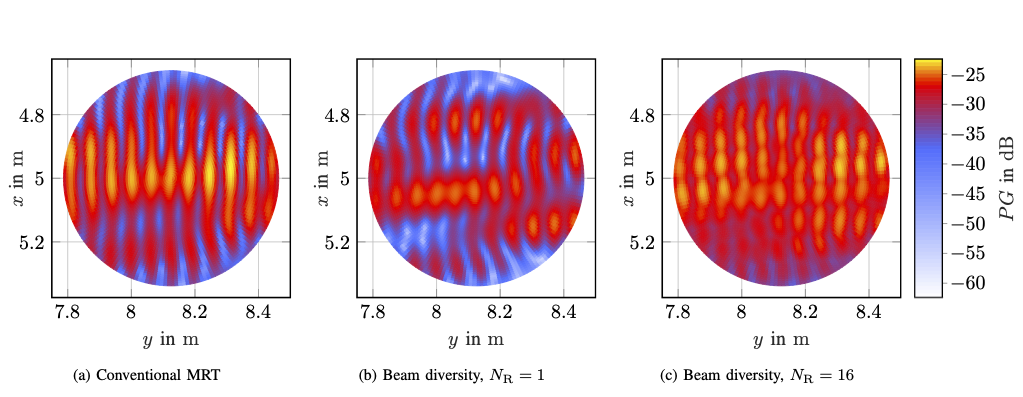

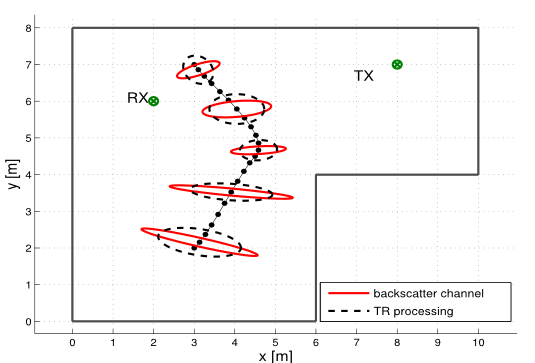

Massive antenna arrays form physically large apertures with a beam-focusing capability, leading to outstanding wireless power transfer (WPT) efficiency paired with low radiation levels outside the focusing region. However, leveraging these features requires accurate knowledge of the multipath propagation channel and overcoming the (Rayleigh) fading channel present in typical application scenarios. For that, reciprocity-based beamforming is an optimal solution that estimates the actual channel gains from pilot transmissions on the uplink. But this solution is unsuitable for passive backscatter nodes that are not capable of sending any pilots in the initial access phase. Using measured channel data from an extremely large-scale MIMO (XL-MIMO) testbed, we compare geometry-based planar wavefront and spherical wavefront beamformers with a reciprocity-based beamformer, to address this initial access problem. We also show that we can predict specular multipath components (SMCs) based only on geometric environment information. We demonstrate that a transmit power of 1W is sufficient...

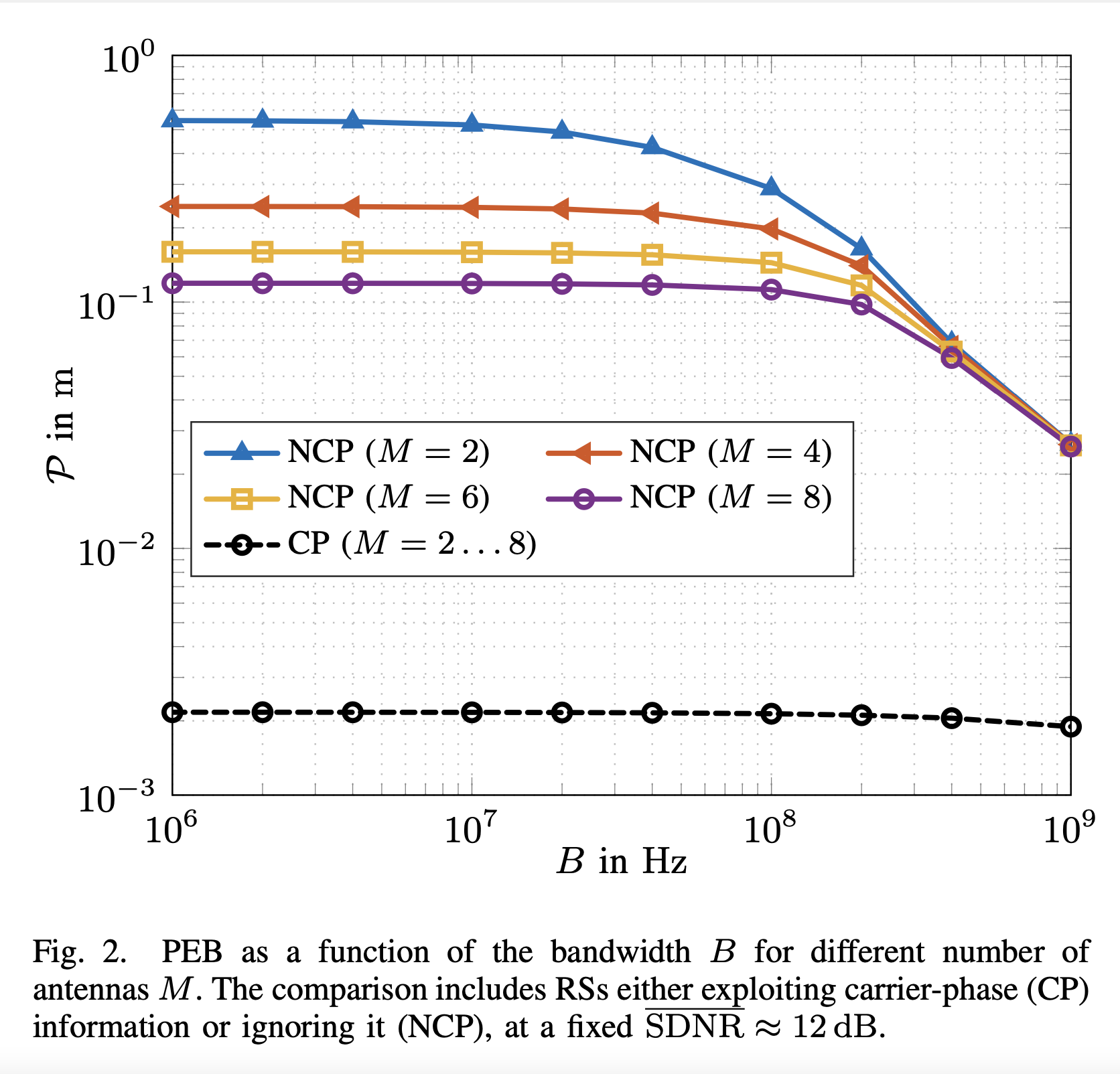

Radio stripes (RSs) is an emerging technology in beyond 5G and 6G wireless networks to support the deployment of cell-free architectures. This joint work investigates the potential use of RSs to enable joint positioning and synchronization in the uplink channel at sub-6 GHz bands. The considered scenario consists of a single-antenna user equipment (UE) that communicates with a network of multiple-antenna RSs distributed over a wide area. The UE is assumed to be unsynchronized to the RSs network, while individual RSs are time- and phase-synchronized. We formulate the problem of joint estimation of position, clock offset, and phase offset of the UE and derive the corresponding maximum-likelihood (ML) estimator, both with and without exploiting carrier phase information. Our team at the SPSC Lab contributed a Fisher information analysis to gain fundamental insights into the achievable performance and to inspect the theoretical lower bounds numerically. Simulation results demonstrate that a promising...

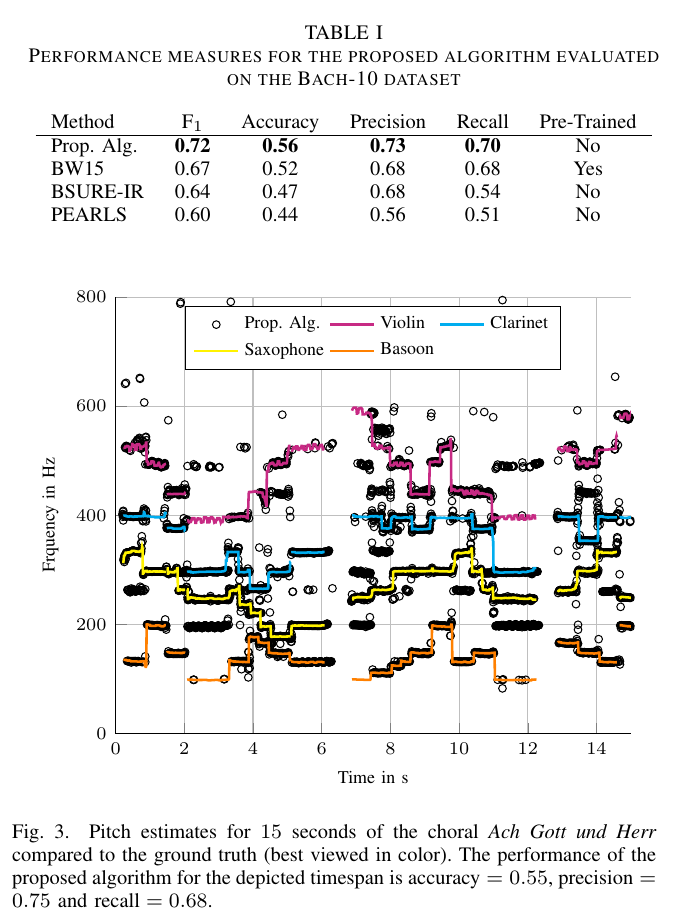

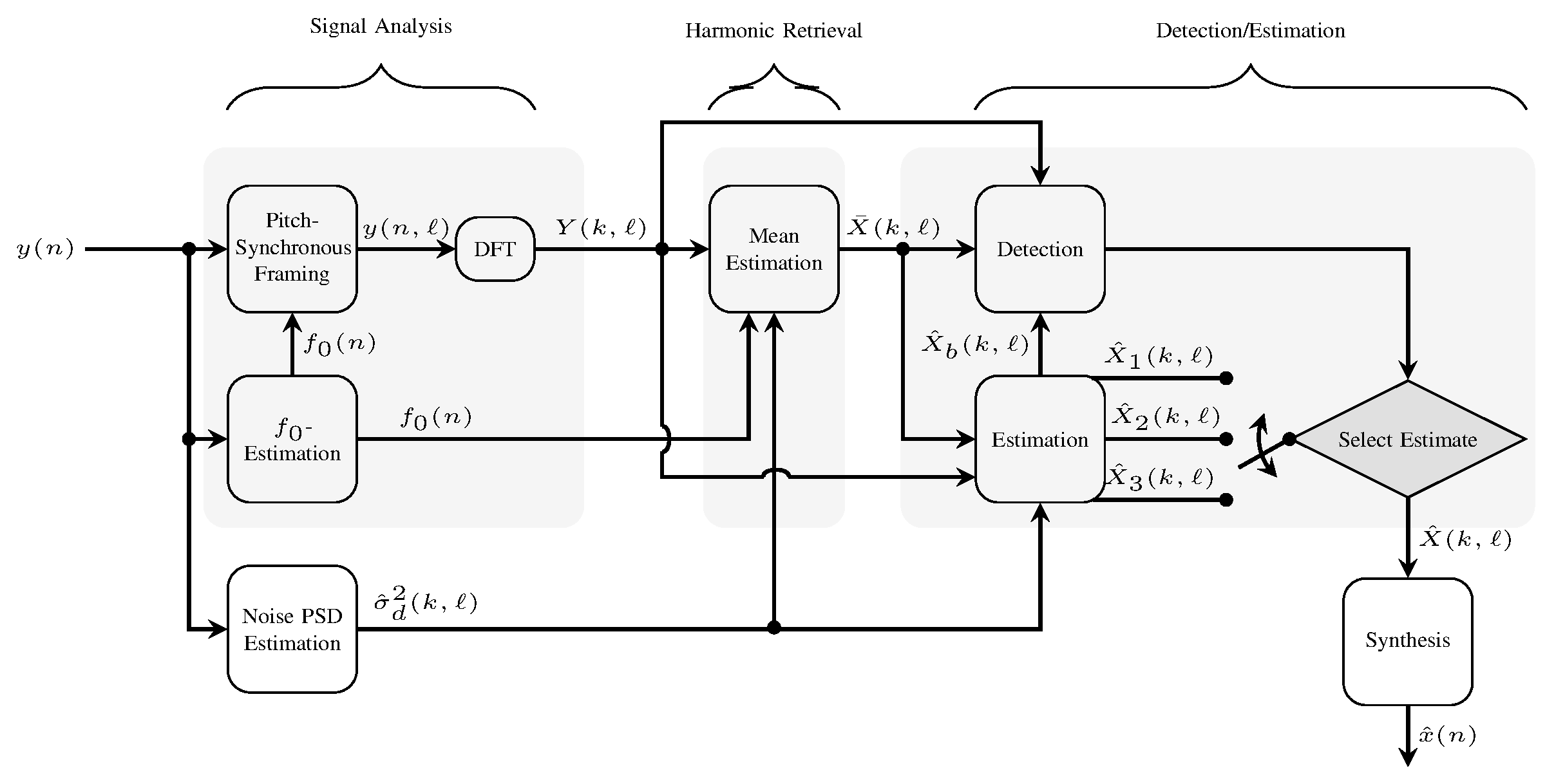

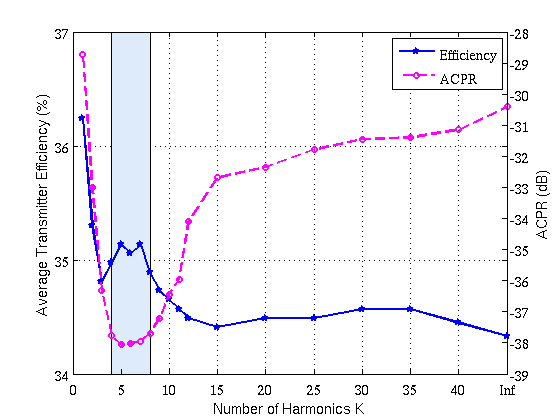

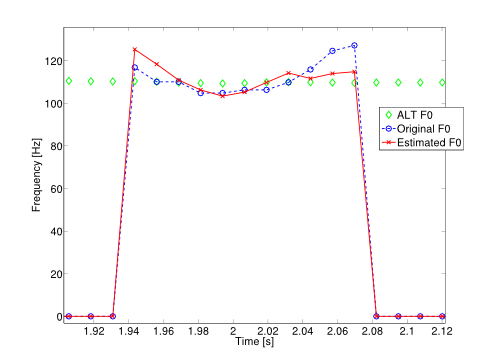

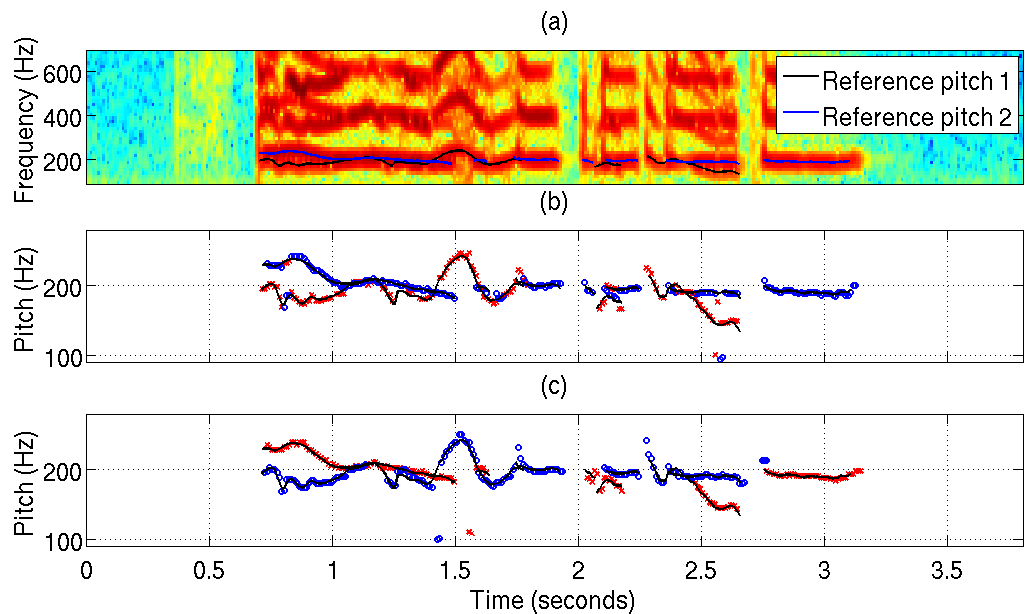

We developed a variational Bayesian inference algorithm for structured line spectra that actively exploits the structure that naturally occurs in many applications to improve estimation performance. For example, consider the audio signal produced by several notes played together in a chord. Each note is a line spectrum with a harmonic structure, i.e. each line is at a multiple of some fundamental frequency - the pitch of the note. When several notes are played together, the result is a linespectrum that is a mixture of several harmonic spectra. By explicitly considering the structure in each harmonic spectrum, our proposed method is able to outperform state-of-the-art multi-pitch estimation methods on the Bach-10 dataset, even machine learning methods pre-trained on the instruments in the dataset. An example of the detected pitch for several seconds of the chorale “Ach Gott und Herr” from the dataset is shown in the figure. Structured line spectra occur...

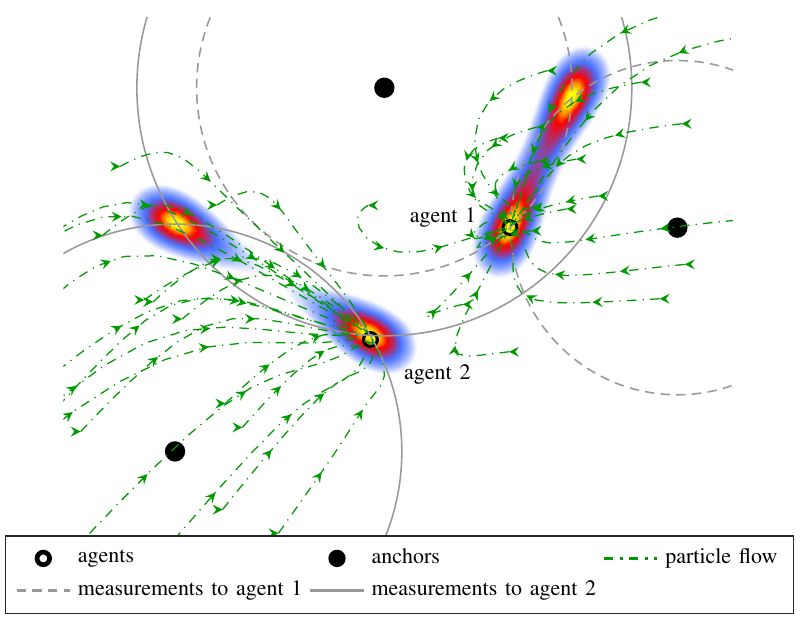

This paper derives the messages of belief propagation (BP) for cooperative localization by means of particle flow, leading to the development of a distributed particle-based message-passing algorithm which avoids particle degeneracy. Our combined particle flow-based BP approach allows the calculation of highly accurate proposal distributions for agent states with a minimal number of particles. It outperforms conventional particle-based BP algorithms in terms of accuracy and runtime. Furthermore, we compare the proposed method to a centralized particle flow-based implementation, known as the exact Daum-Huang filter, and to sigma point BP in terms of position accuracy, runtime, and memory requirement versus the network size. We further contrast all methods to the theoretical performance limit provided by the posterior Cramer-Rao lower bound. Based on three different scenarios, we demonstrate the superiority of the proposed method. Figure: Visualization of the particle flow (dash-dotted green lines) of two cooperating agents in the vicinity of three...

Multipath-based simultaneous localization and mapping (SLAM) is an emerging paradigm for accurate indoor localization with limited resources. The goal of multipath-based SLAM is to detect and localize radio reflective surfaces to support the estimation of time-varying positions of mobile agents. Radio reflective surfaces are typically represented by so-called virtual anchors (VAs), which are mirror images of base stations at the surfaces. In existing multipath-based SLAM methods, a VA is introduced for each propagation path, even if the goal is to map the reflective surfaces. The fact that not every reflective surface but every propagation path is modeled by a VA, complicates a consistent combination “fusion” of statistical information across multiple paths and base stations and thus limits the accuracy and mapping speed of existing multipath-based SLAM methods. In this paper, we introduce an improved statistical model and estimation method that enables data fusion for multipath-based SLAM by representing each surface...

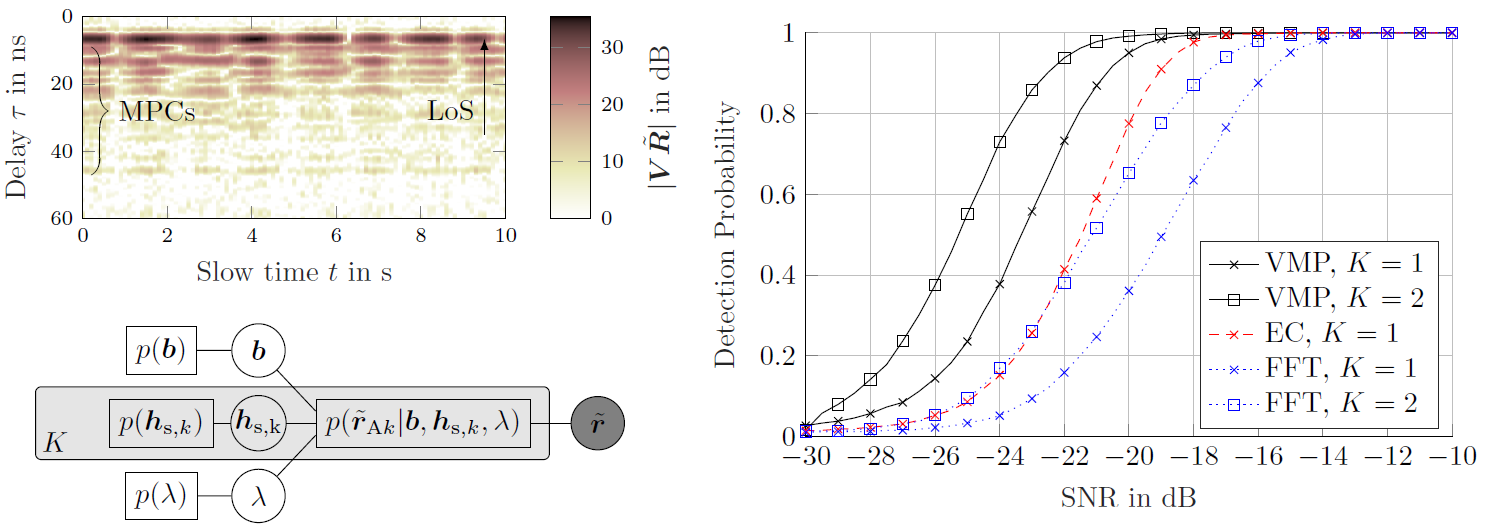

We apply an variational message passing scheme in order to detect the presence of children im parked cars using multistatic UWB radar. To detect a person in a car, we use a structured mean field approach an apply variational message passing to maximize the ELBO, a lower bound on the model evidence. The ELBO is then used to calculate the odds-ratio of the two cases (the car being either empty or occupied). During the inference process, the radar channel and the respiratory chest motion of the target ar estimated, in order to coherently add up all of the energy from the target present in the received signal. Therefore, we make not only use of the direct interaction of the target with the transmitted signal (line-of-sight, LoS), but also of the multipath components (MPCs) that bounce around in the car before interacting with the target, which increases the SNR. Since the...

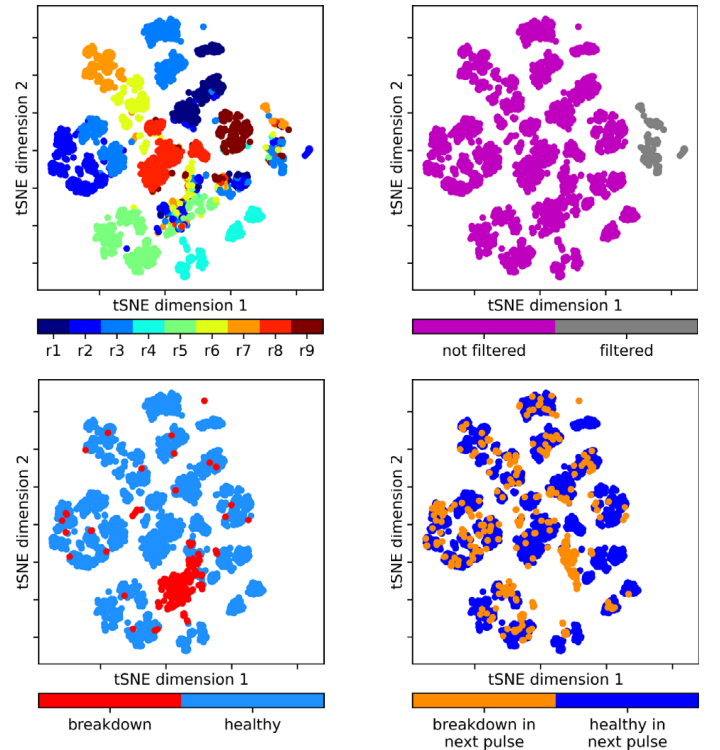

In our latest project with CERN, we used machine learning to analyze breakdowns in a test bench for the CLIC accelerator. In particle accelerators, one of the most prevalent limits on high-gradient operation is the occurrence of vacuum arcs, commonly known as radio frequency (RF) breakdowns. During a breakdown, field enhancement, associated with small deformations on the cavity surface, results in electrical arcs which may irreparably damage the RF cavity surface. In the project, supervised and unsupervised methods were used for data analysis and a breakdown prediction study. ‘Explainable-AI’ made it possible to interpret learned model parameters and to reverse engineer physical properties in the test bench. Similar models could be applied to cancer treatment, light sources, and CERN next generation high energy physics facilities. The work was recently published in the Journal of Physical Review Accelerators and Beams (PRAB) and is available here.

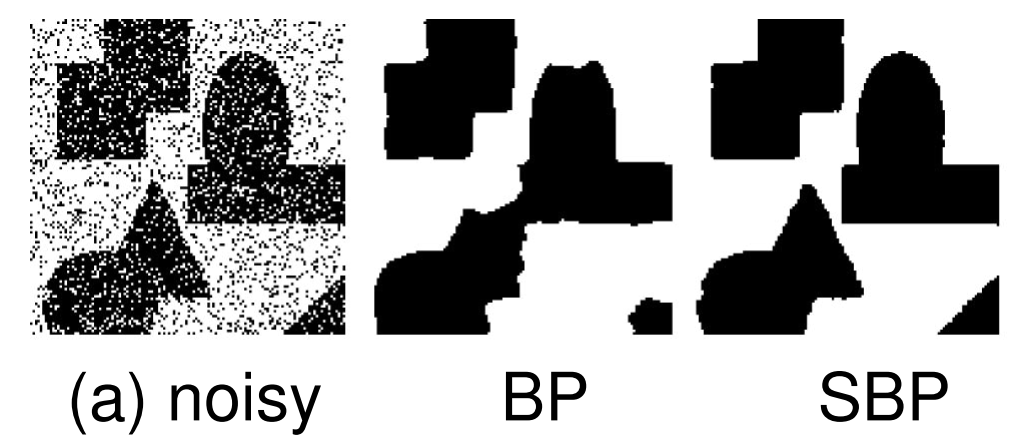

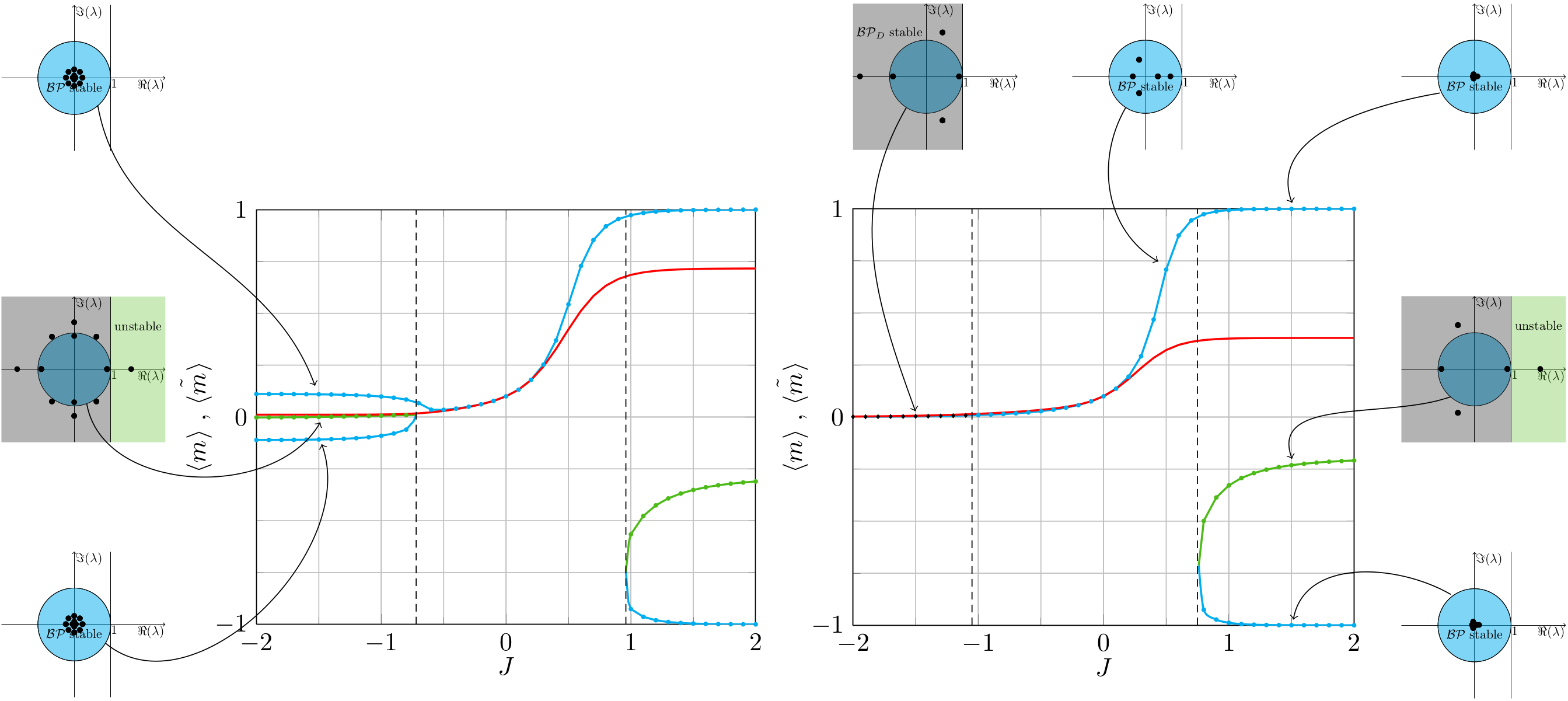

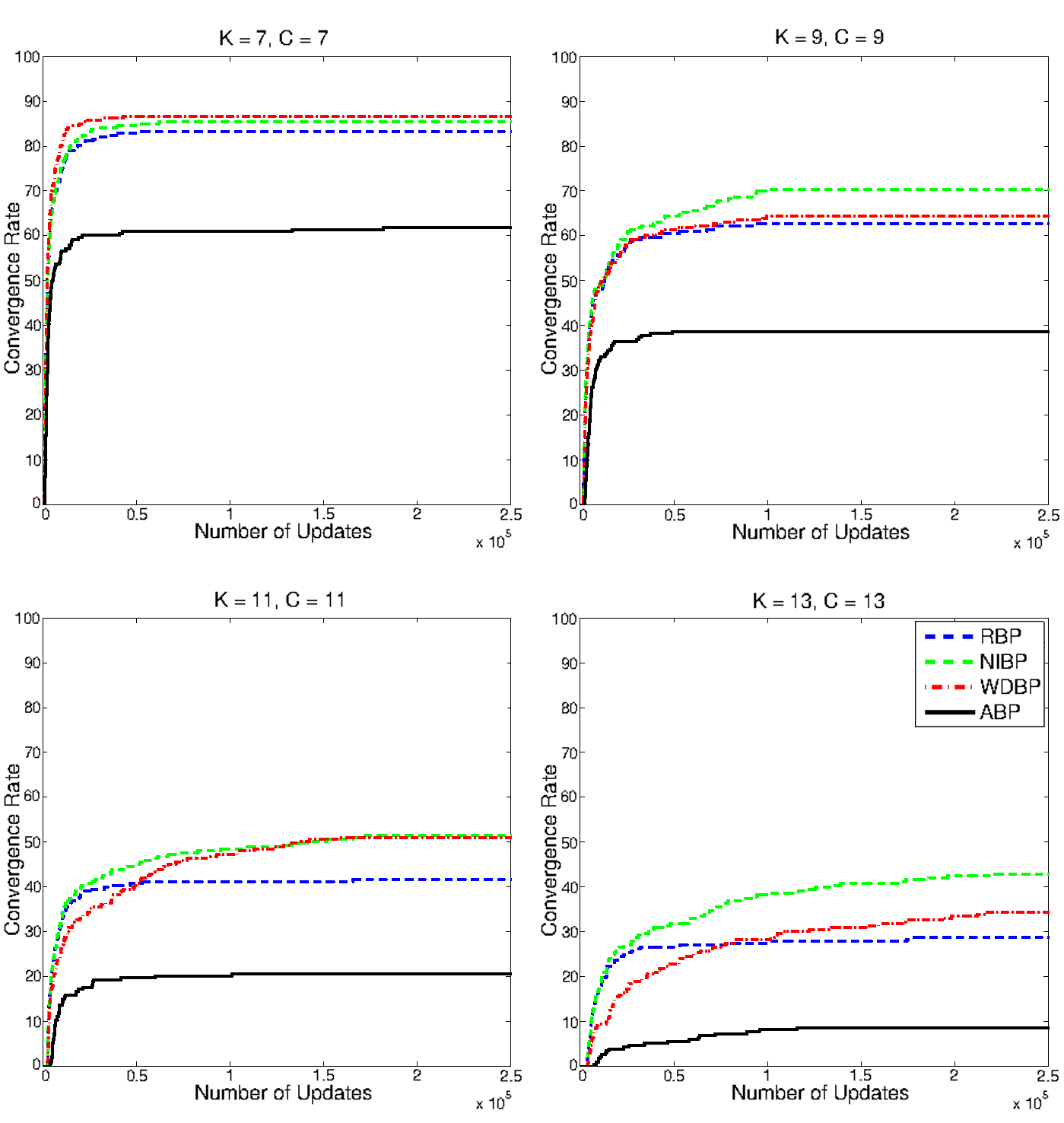

Belief propagation (BP) is a popular method for performing probabilistic inference on graphical models. In this work we show how one can improve the performance of BP by solving a sequence of models that starts with independent variables. We term this approach self-guided belief propagation (SBP) and theoretically demonstrate that SBP finds the global optimum of the Bethe approximation for attractive models where all variables favor the same state .Moreover, we apply SBP to various graphs (random ones, and graphs corresponding to problems in wireless communications and computer vision) and show that (i) SBP is superior in terms of accuracy whenever BP converges, and (ii) SBP obtains a unique, stable, and accurate solution whenever BP does not converge. More information can be found in our [paper][https://ieeexplore.ieee.org/abstract/document/9852264] Figure: Image corrupted with salt and pepper noise. BP reduces the noise but struggles with reconstructing the boundary regions; SBP reduces the noise as...

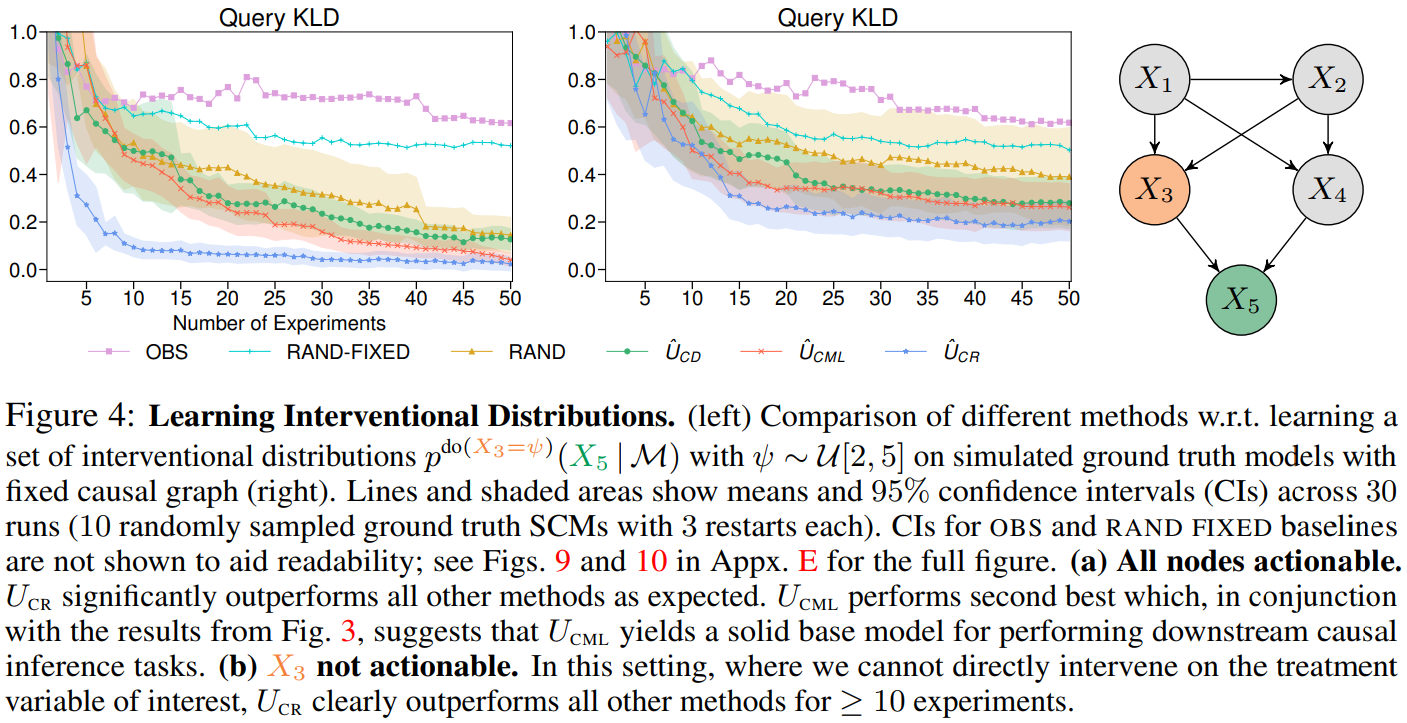

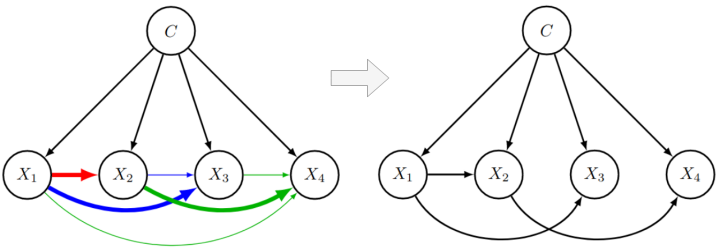

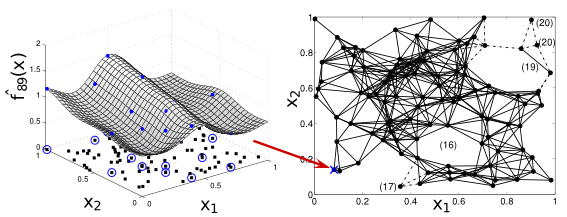

Causal discovery and causal reasoning are classically treated as separate and consecutive tasks: one first infers the causal graph, and then uses it to estimate causal effects of interventions. However, such a two-stage approach is uneconomical, especially in terms of actively collected interventional data, since the causal query of interest may not require a fully-specified causal model. From a Bayesian perspective, it is also unnatural, since a causal query (e.g., the causal graph or some causal effect) can be viewed as a latent quantity subject to posterior inference—other unobserved quantities that are not of direct interest (e.g., the full causal model) ought to be marginalized out in this process and contribute to our epistemic uncertainty. In this work, we propose Active Bayesian Causal Inference (ABCI), a fully-Bayesian active learning framework for integrated causal discovery and reasoning, which jointly infers a posterior over causal models and queries of interest. In our...

Within the REINDEER H2020 project, we investigate the potential of using physically large, or distributed antenna arrays to transmit power wirelessly to batteryless energy neutral (EN) devices. An enabling milestone to make the technology feasible is solving the initial-access problem, i.e., waking up an EN device with unknown channel state information (CSI). One possible approach for initial access is beam sweeping, where the transmit array sweeps beams sequentially according to a predefined codebook to power up an EN device for the first time. However, beam sweeping in indoor scenarios suffers from fading due to severe multipath propagation, possibly originating from unknown objects in the environment. In our paper, we exploit environment-awareness to predict CSI. We establish a simultaneous multibeam transmission which intentionally leverages specular reflections to illuminate an EN device and improve its power budget over what is achievable using a single line-of-sight beam only. We vary the phases of...

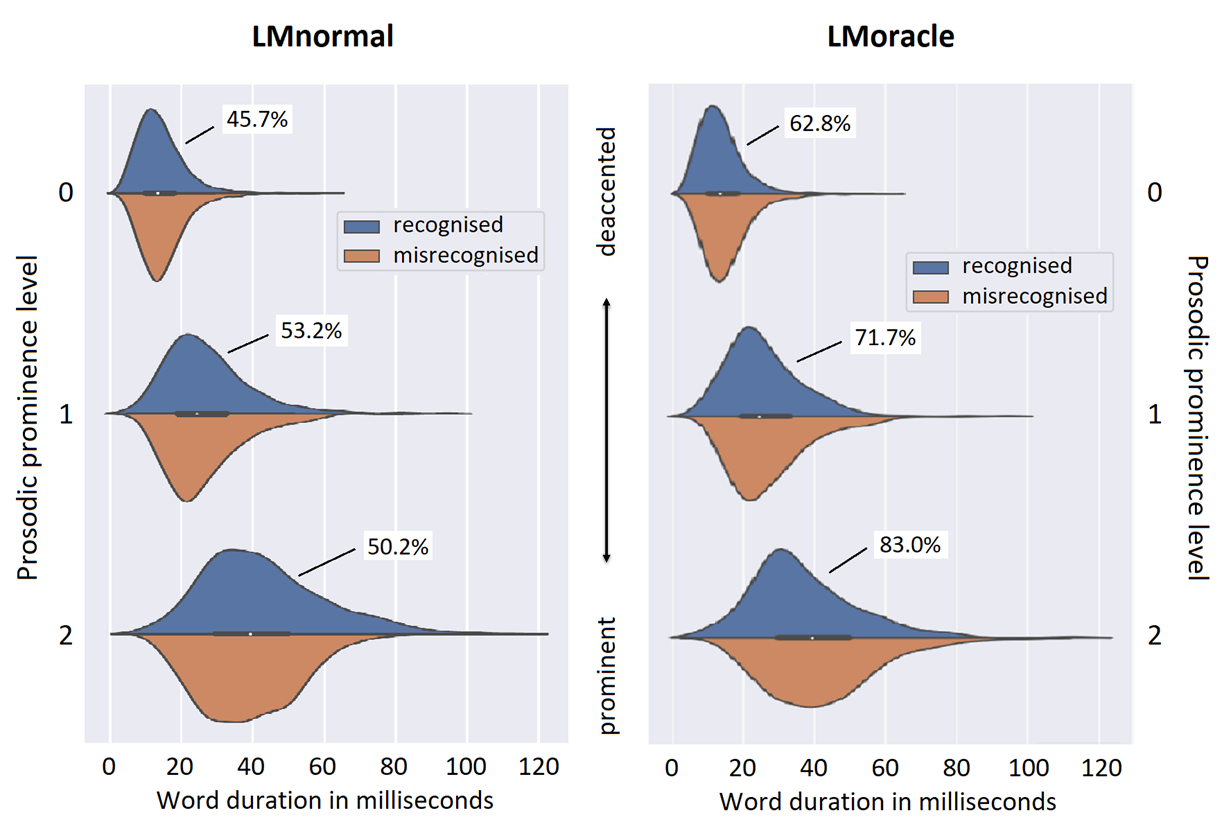

The performance of Automatic Speech Recognition (ASR) systems varies with the speaking style of the data that is to be recognised. Where read speech, voice commands and also broadcast news are nowadays well recognised by standard ASR systems, conversational speech remains to be challenging for multiple reasons. We compared recognition performance of two Language Models (LMs): 1) an ordinary 4-gram “LMnormal” and 2) an oracle 4-gram “LMoracle” that was trained on all the utterances of a corpus of conversational speech (GRASS), including data of the evaluation set. We analysed specific (mis-)recognised word tokens for their prosodic characteristics. In general, high-frequent words are easy to recognise since they are well-known to both the acoustic models and the language model in various contexts. For both LMs, we found that short, high-frequent words are misrecognised more often than longer words that have lower frequency of occurence in our data. Short, high-frequent tokens are...

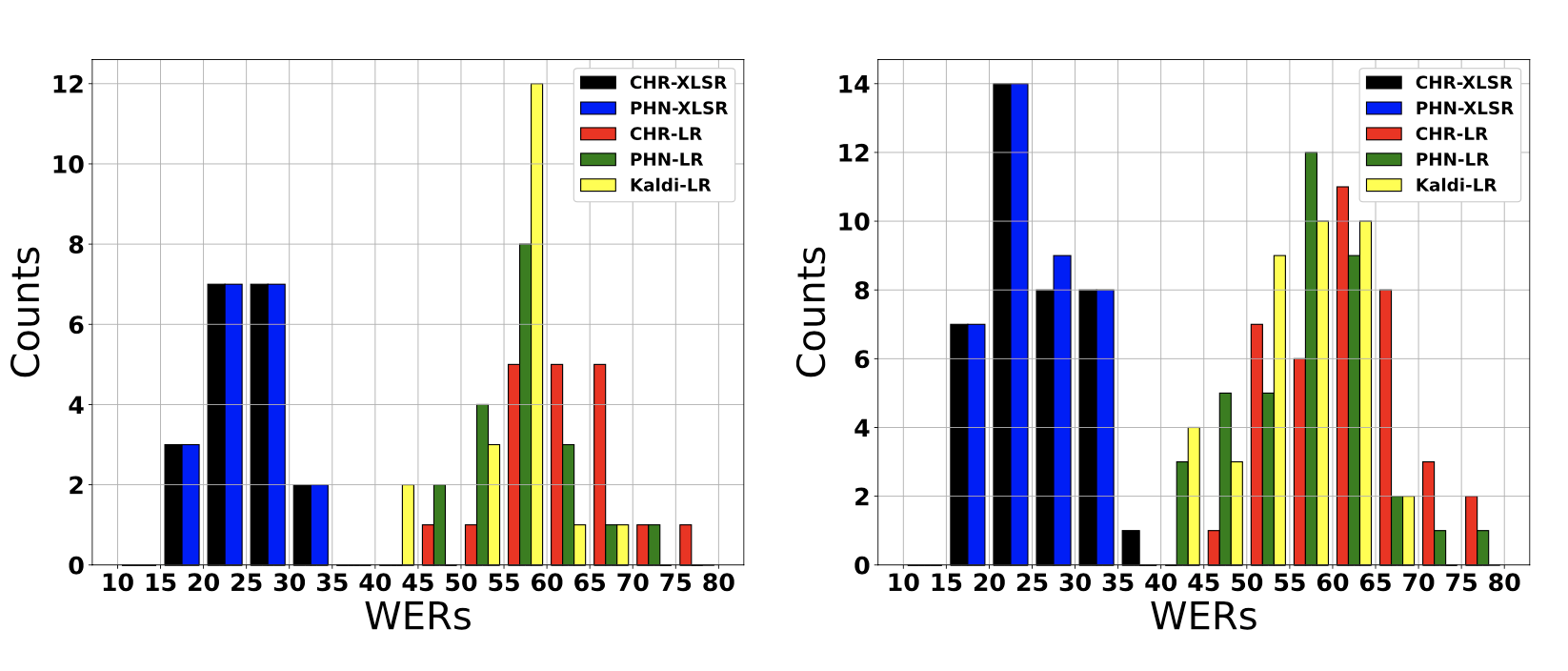

Left: Histogram showing conversation-dependent WERs of low-resource (LR) and data-driven (XLSR) 4-gram models. Right: Histogram showing speaker-dependent WERs of low-resource (LR) and data-driven (XLSR) 4-gram models. We show that data-driven speech recognition systems are effective for Austrian German conversational speech but we still observe a lack of robustness to inter-speaker and inter-conversation variation. Low-resource (LR) speech recognition is challenging since two humans who interact spontaneously with each other introduce complex inter- and intra-speaker variation depending on for instance the speaker’s attitude towards the listener and the speaking task. Recent developments in self-supervision have allowed LR-scenarios to take advantage of large amounts of otherwise unrelated data. In this study, we characterize an (LR) Austrian German conversational task. We begin with a non-pre-trained baseline (Kaldi-LR) and show that fine-tuning of a model pre-trained using self-supervision (XLSR) leads to improvements consistent with those in the literature; this extends to cases where a lexicon...

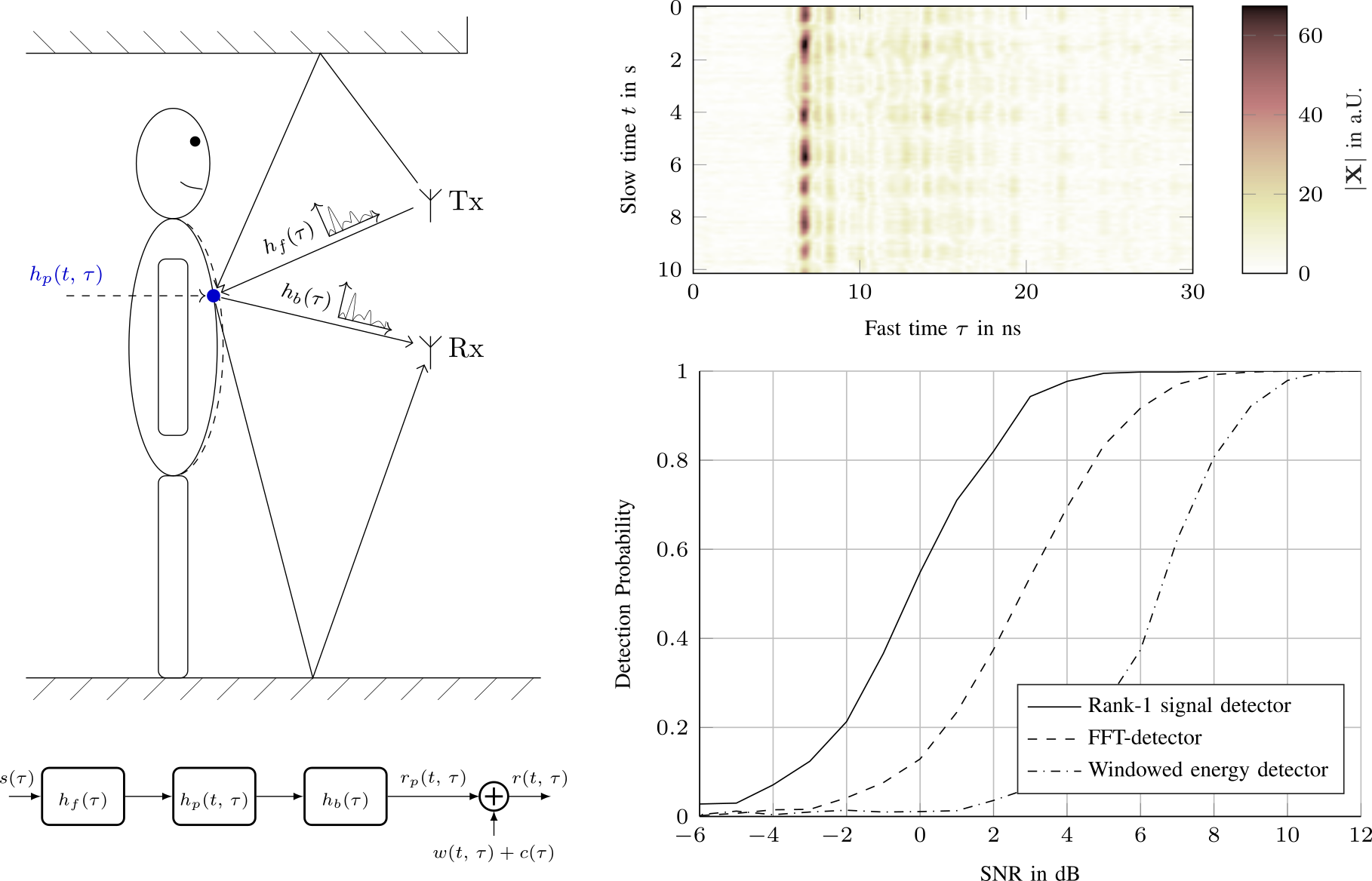

We show, that the UWB nodes of the keyless-access system of a car can be used as radar sensors to detect if the car is occupied. To distinguish the occupant from the static clutter background, we detect the breathing motion of the occupant’s chest. The influence of the chest motion on the received signal is modelled as a backscatter channel. Evaluating this model revealed, that the received signal is the outer product of the breathing motion and channel delay profile. Modelling the breathing motion and channel as gaussian processes with known covariance, the optimal decision criterion is given by the estimator-correlator. Monte-Carlo performance analysis showed a 3dB increase in the detection threshold compared to a FFT-based detector and, thus, confirms our results. This work was presented at the 2021 European Radar Conference and will be published in the accompanying proceedings.

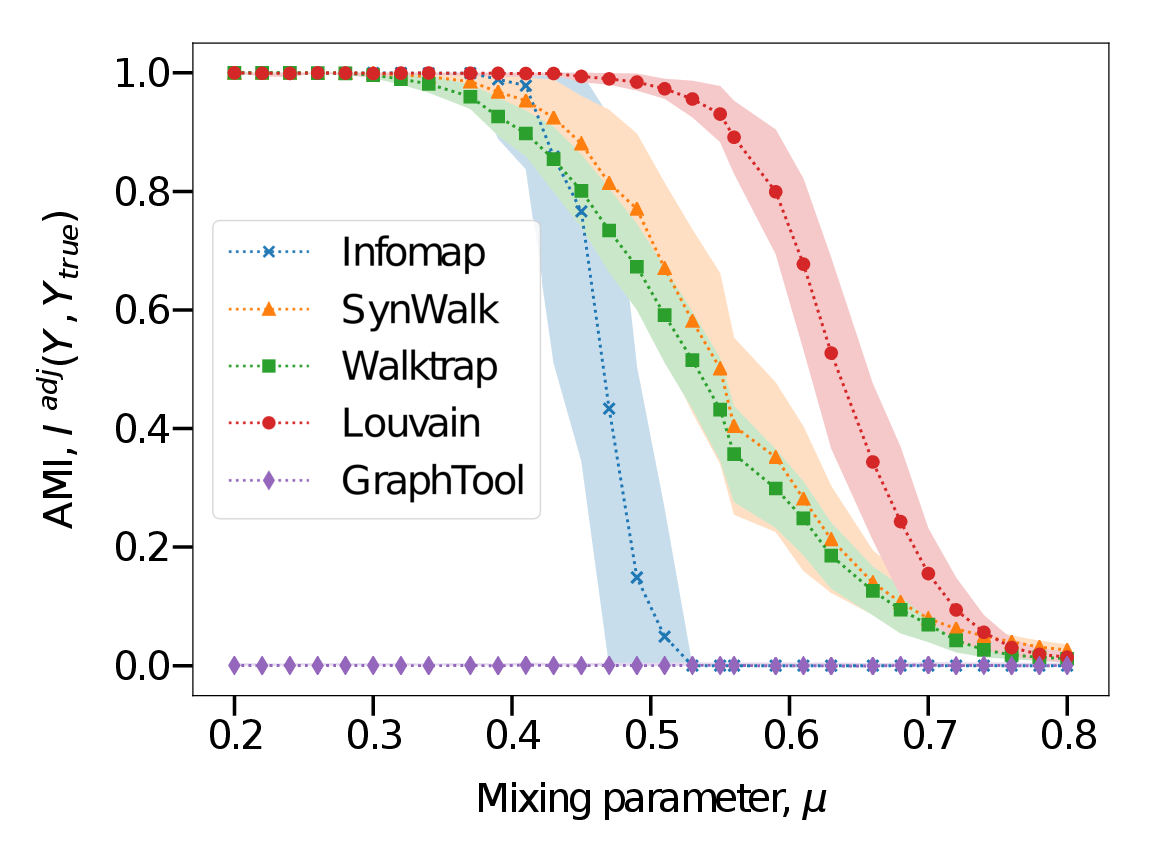

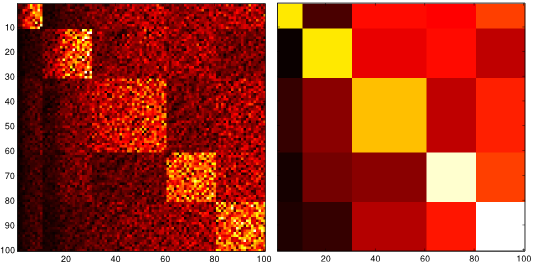

Complex systems, abstractly represented as networks, are ubiquitous in everyday life. Analyzing and understanding these systems requires, among others, tools for community detection. As no single best community detection algorithm can exist, robustness across a wide variety of problem settings is desirable. In this work, we present Synwalk, a random walk-based community detection method. Synwalk builds upon a solid theoretical basis and detects communities by synthesizing the random walk induced by the given network from a class of candidate random walks. We thoroughly validate the effectiveness of our approach on synthetic and empirical networks, respectively, and compare Synwalk’s performance with the performance of Infomap and Walktrap (also random walk-based), Louvain (based on modularity maximization) and stochastic block model inference. Our results indicate that Synwalk performs robustly on networks with varying mixing parameters and degree distributions. We outperform Infomap on networks with high mixing parameter, and Infomap and Walktrap on networks...

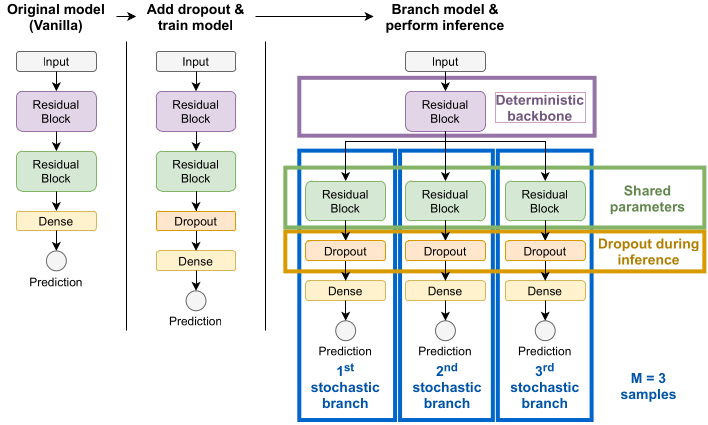

Uncertainty estimation and out-of-distribution robustness are vital aspects of modern deep learning. Predictive uncertainty supplements model predictions and enables improved functionality of downstream tasks including various resource-constrained embedded and mobile applications. Popular examples are virtual reality (VR), augmented reality (AR), sensor fusion, perception, and health applications including fitness indicators, arrhythmia detection, and skin lesion detection. Robust and reliable predictions with uncertainty estimates are increasingly important when operating on noisy in-the-wild data from sensory inputs. A large variety of deep learning architectures have been applied to various tasks with great success in terms of prediction quality, however, producing reliable and robust uncertainty without additional computational and memory overhead remains a challenge. This issue is further aggravated due to the limited computational and memory budget available in typical battery-powered mobile devices. In this paper, we aim to investigate more resource-efficient methods for uncertainty estimation that also provide good performance and robustness under...

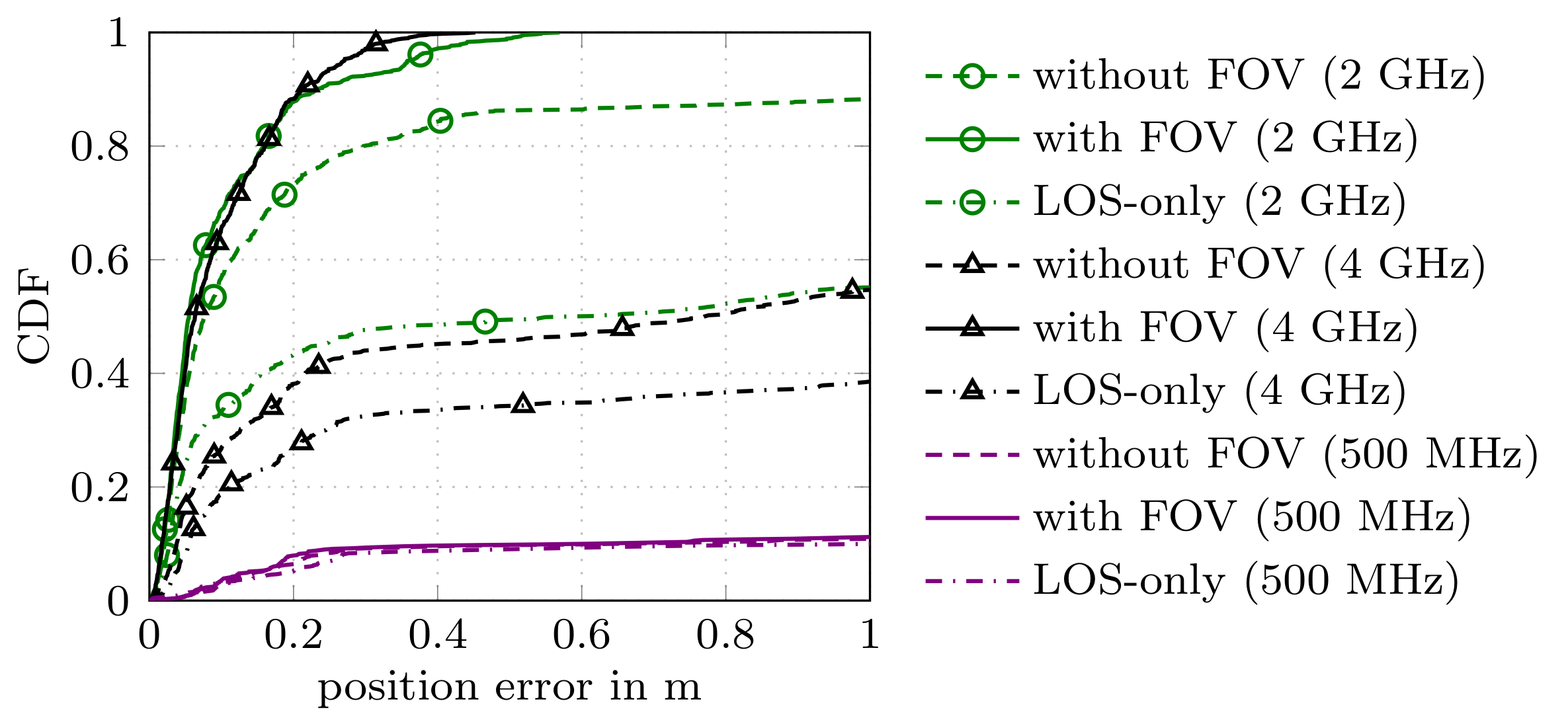

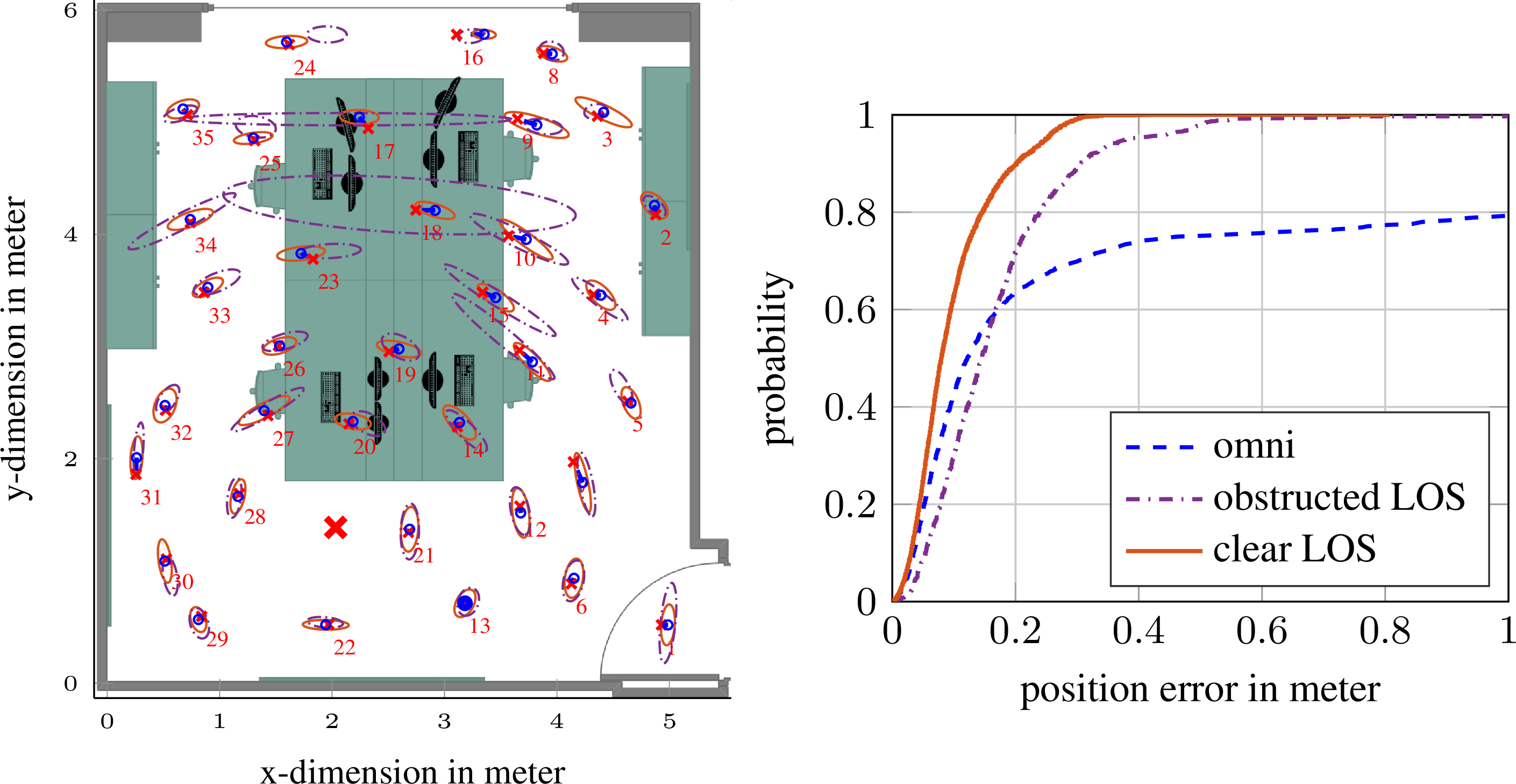

In this work we consider multipath-based positioning and tracking in off-body channels. We analyse the effects introduced by the human body and the resulting effects that are of interest in positioning and tracking based on channel measurements obtained in an indoor scenario. As the signal bandwidth is known to effect the achievable accuracy in positioning, the bandwidth dependency of the field of view (FOV) induced by human body via shadowing and the number of multipath components (MPCs) detected and estimated by a deterministic maximum likelihood (ML) algorithm is investigated. A multipath-based positioning and tracking algorithm is proposed that associates estimated MPC parameters with floor plan features and exploits a human body-dependent FOV function. The proposed algorithm is able to provide accurate position estimates even for an off-body radio channel in a multipath-prone environment with the signal bandwidth found to be a limiting factor. The figure shows the CDFs for the...

In cooperative localization applications, measurement-model related model parameters are often assumed to be known even though they can depend strongly on the environment. This assumption can lead to a reduced localization accuracy due to parameter mismatch. In this paper, we propose an adaptive factor-graph-based algorithm for joint cooperative localization and orientation estimation which inherently estimates all unknown model parameters as well as the measurement uncertainty. We use RSS radio measurements and account for the directivity of the antennas with a parametric antenna pattern. We validate our proposed methods with simulations in a static scenario and show that there is only a small loss in positioning accuracy compared to known model parameters and measurement noise. Figure: This figure shows a FG for joint cooperative localization and model parameter estimation. The left Figure shows the FG where all model parameters are captured in one node whereas the left figure shows how variable...

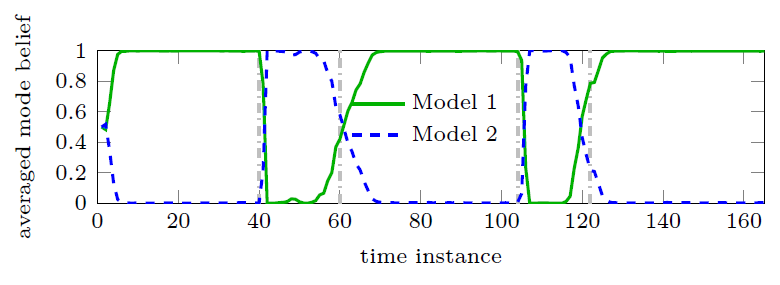

In this work, we present a Bayesian multipath-based simultaneous localization and mapping (SLAM) algorithm that continuously adapts interacting multiple models (IMM) parameters to describe the mobile agent state dynamics. The time-evolution of the IMM parameters is described by a Markov chain and the parameters are incorporated into the factor graph structure that represents the statistical structure of the SLAM problem. The proposed belief propagation (BP)-based algorithm adapts, in an online manner, to time-varying system models by jointly inferring the model parameters along with the agent and map feature states. The performance of the proposed algorithm is finally evaluating with a simulated scenario. Our numerical simulation results show that the proposed multipath-based SLAM algorithm is able to cope with strongly changing agent state dynamics. The full version of this paper can be found on Arxiv and on IEEE Xplore IEEE Xplore.

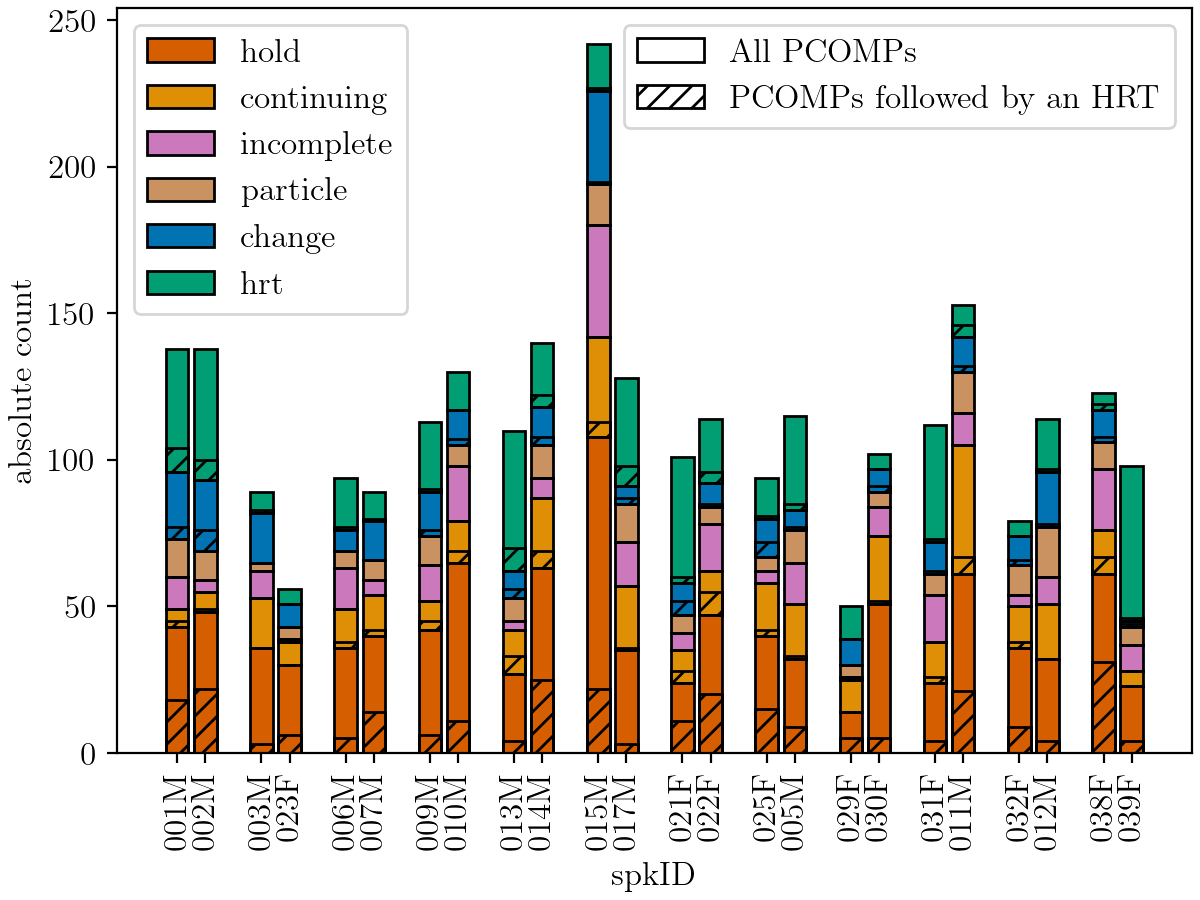

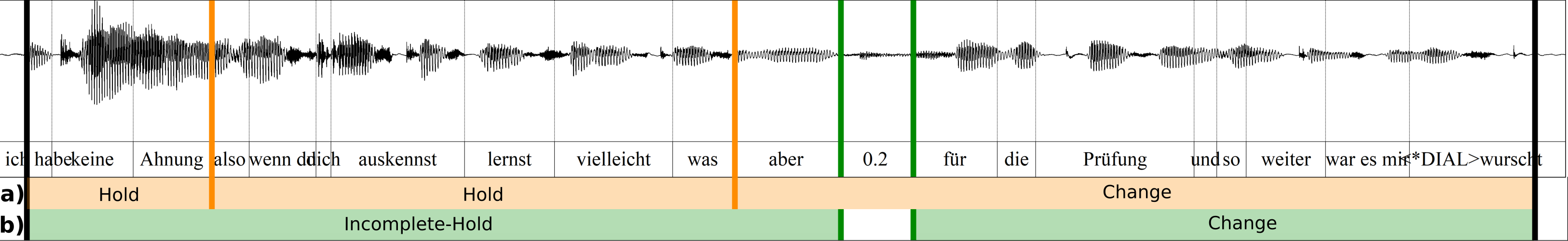

The investigation of conversational speech requires the close collaboration of linguists and speech technologists to develop new modeling techniques that allow the incorporation of various knowledge sources. This paper presents a progress report on the ongoing interdisciplinary project “Cross-layer language models for conversational speech” with a focus on the development of an annotation system for communicative functions. We discuss the requirements of such a system for the application in ASR as well as for the use in phonetic studies of talk-in-interaction, and illustrate emerging issues with the example of turn management. Our annotation system on the communicative functions level has two independent tiers. The IPU tier (“Inter Pausal Units”) and the PCOMP tier (“Points of potential syntactic COMPletion”). The figure shows an example of how PCOMP and IPU annotations are mapped onto each other. In this example, Speaker 2 holds his turn by making a pause at a point of...

This work provides an initial investigation on the application of convolutional neural networks (CNNs) for fingerprint-based positioning using measured massive MIMO channels. When represented in appropriate domains, massive MIMO channels have a sparse structure which can be efficiently learned by CNNs for positioning purposes. We evaluate the positioning accuracy of state-of-the-art CNNs with channel fingerprints generated from a channel model with a rich clustered structure: the COST 2100 channel model. We find that moderately deep CNNs can achieve fractional-wavelength positioning accuracies, provided that an enough representative data set is available for training. The full version of this paper can be found on Arxiv or on IEEE Xplore.

We present a message passing algorithm for localization and tracking in multipath-prone environments that implicitly considers obstructed line-of-sight situations. The proposed adaptive probabilistic data association algorithm infers the position of a mobile agent using multiple anchors by utilizing delay and amplitude of the multipath components (MPCs) as well as their respective uncertainties. By employing a nonuniform clutter model, we enable the algorithm to facilitate the position information contained in the MPCs to support the estimation of the agent position without exact knowledge about the environment geometry. Our algorithm adapts in an online manner to both, the time-varying signal-to-noise-ratio and line-of-sight (LOS) existence probability of each anchor. In a numerical analysis we show that the algorithm is able to operate reliably in environments characterized by strong multipath propagation, even if a temporary obstruction of all anchors occurs simultaneously The full version of this paper can be found on Arxiv or on...

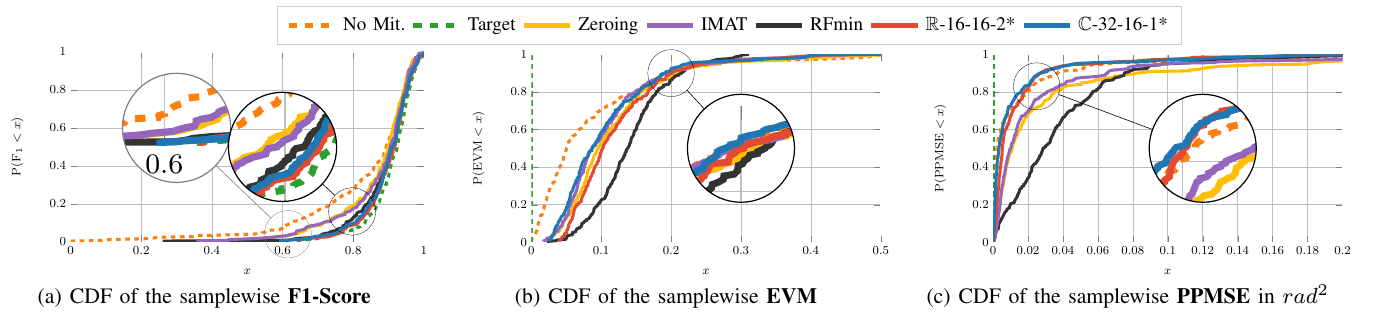

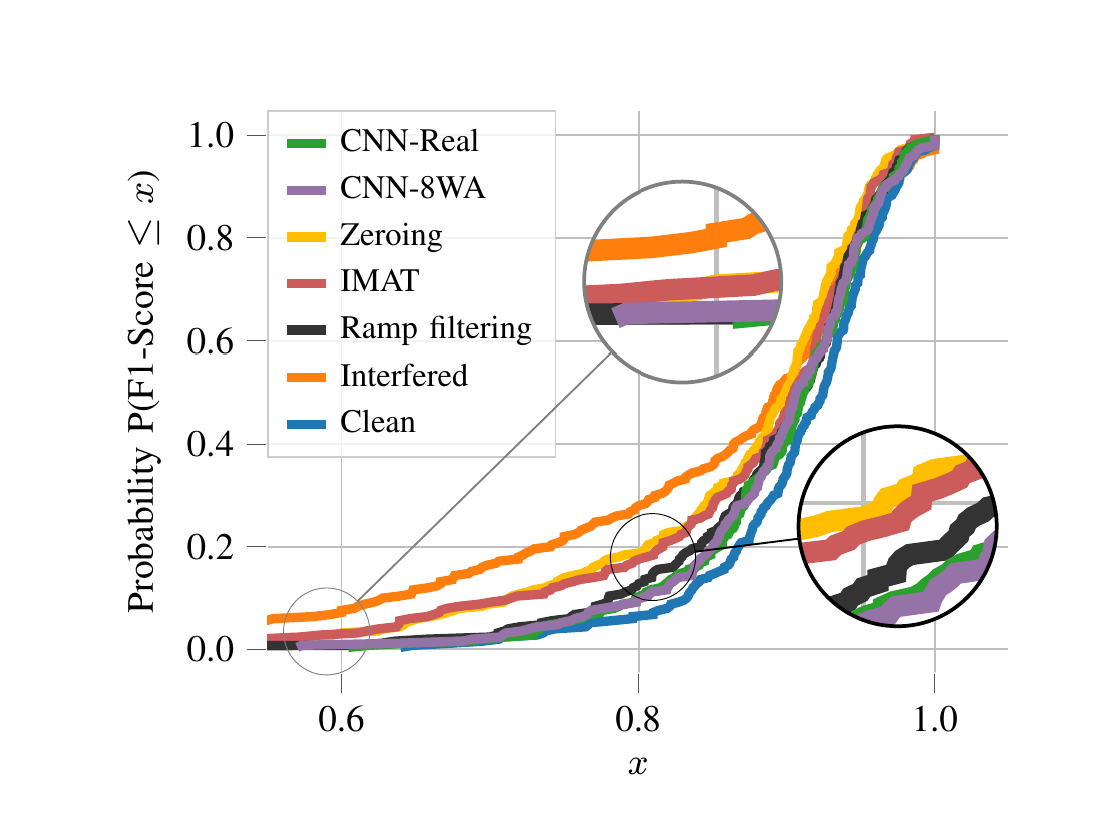

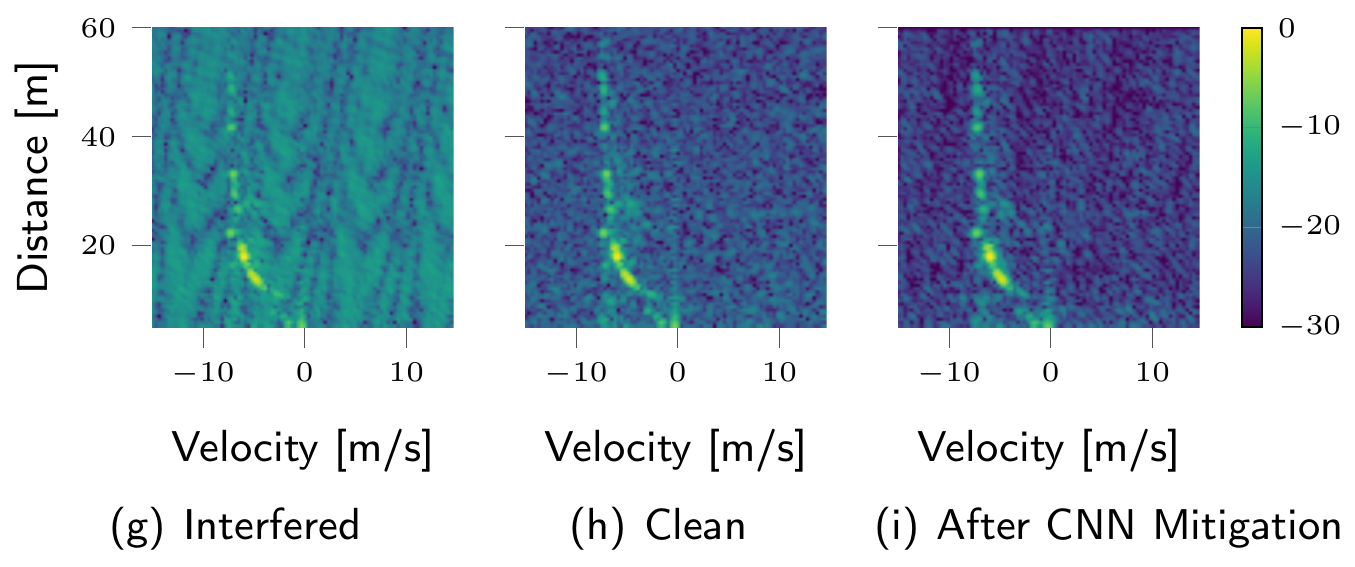

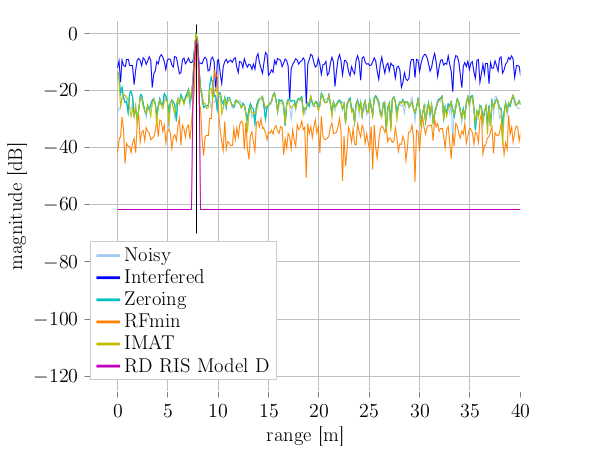

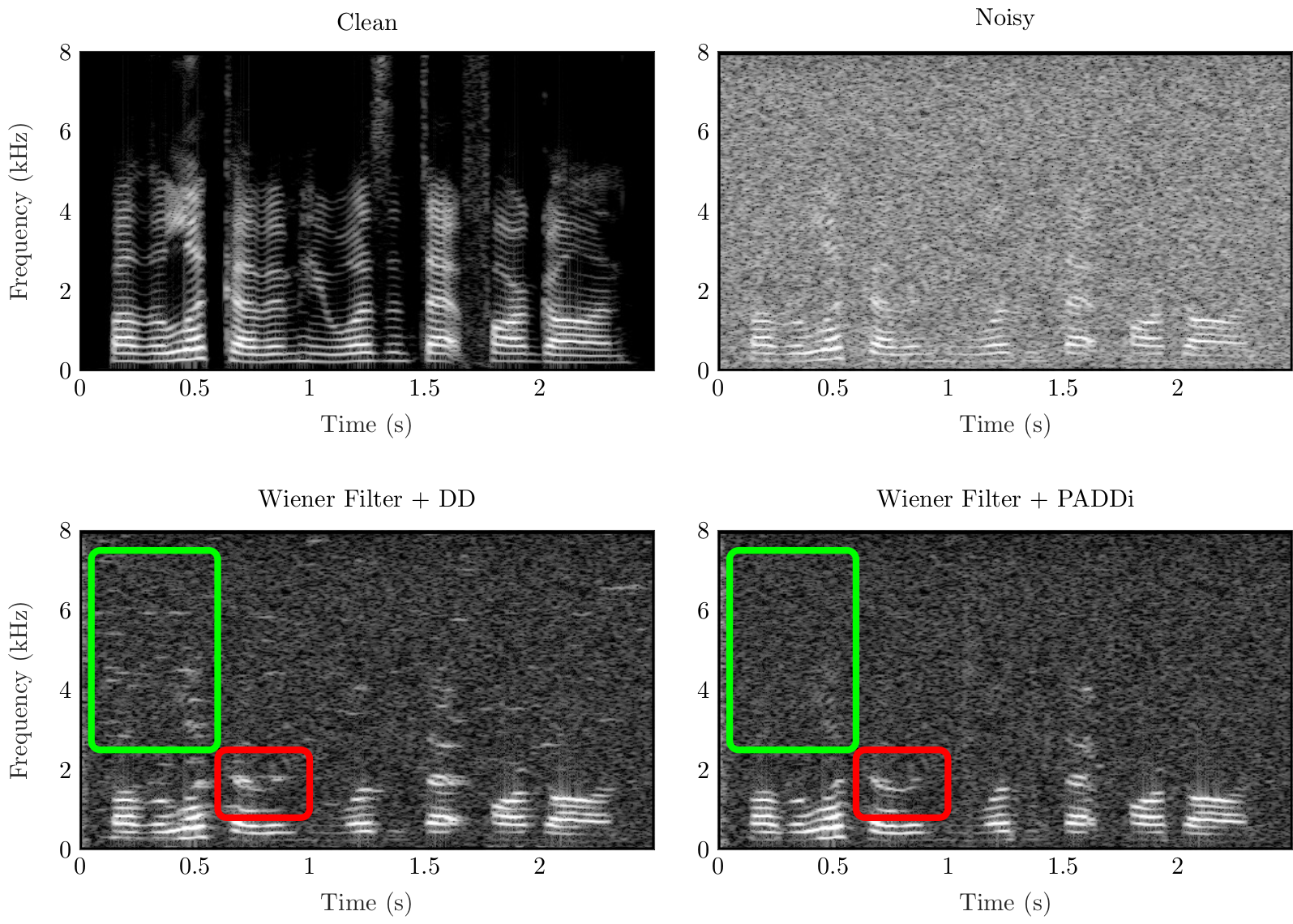

Autonomous driving highly depends on capable sensors to perceive the environment and to deliver reliable information to the vehicles’ control systems. To increase its robustness, a diversified set of sensors is used, including radar sensors. Radar is a vital contribution of sensory information, providing high resolution range as well as velocity measurements. The increased use of radar sensors in road traffic introduces new challenges. As the so far unregulated frequency band becomes increasingly crowded, radar sensors suffer from mutual interference between multiple radar sensors. This interference must be mitigated in order to ensure a high and consistent detection sensitivity. In this paper, we propose the use of Complex-valued Convolutional Neural Networks (CVCNNs) to address the issue of mutual interference between radar sensors. We extend previously developed methods to the complex domain in order to process radar data according to its physical characteristics. This not only increases data efficiency, but also...

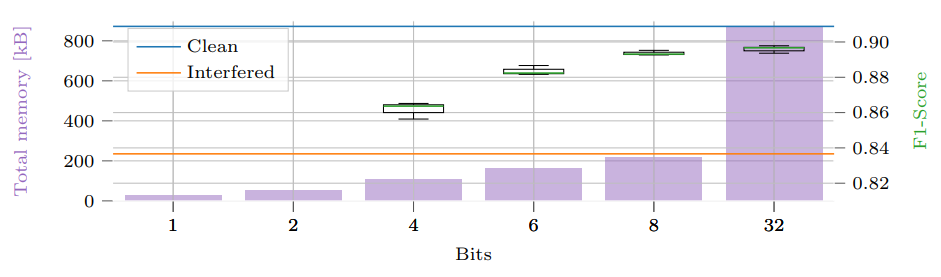

Radar sensors are crucial for environment perception of driver assistance systems as well as autonomous vehicles. Key performance factors are weather resistance and the possibility to directly measure velocity. With a rising number of radar sensors and the so-far unregulated automotive radar frequency band, mutual interference is inevitable and must be dealt with. Algorithms and models operating on radar data in early processing stages are required to run directly on specialized hardware, i.e. the radar sensor. This specialized hardware typically has strict resource constraints, i.e. a low memory capacity and low computational power. Convolutional Neural Network (CNN)-based approaches for denoising and interference mitigation yield promising results for radar processing in terms of performance. However, these models typically contain millions of parameters, stored in hundreds of megabytes of memory, and require additional memory during execution. In this paper, we investigate quantization techniques for CNN-based denoising and interference mitigation of radar signals....

Multipath-based simultaneous localization and mapping (SLAM) algorithms can detect and localize radio reflective surfaces and jointly estimate the time-varying position of mobile agents. A promising approach is to represent radio reflective surfaces by so called virtual anchors (VAs). In existing multipathbased SLAM algorithms, VAs are modeled and inferred for each physical anchor (PA) and each propagation path individually, even if multiple VAs represent the same physical surface. This limits timeliness and accuracy of mapping and agent localization. In this paper, we introduce an improved statistical model and estimation method that enables data fusion for multipath-based SLAM by representing each surface with a single master virtual anchor (MVA). Our numerical simulation results show that the proposed multipath-based SLAM algorithm can significantly increase map convergence speed and reduce the mapping error compared to a state-of-the-art method. Figure: Factor graph for multipath-based SLAM corresponding to the factorization of the posterior PDF. Factor nodes...

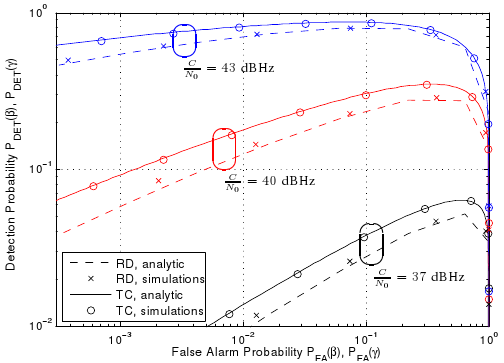

We consider the problem of detecting and estimating radio channel dispersion parameters of a single specular multipath component (SMC) embedded in spatially correlated noise from observations collected using a MIMO measurement setup. The corresponding detection threshold versus the false alarm probability is derived from $\chi^2$-random field with two degrees of freedom defined over a 5-dimensional dispersion space using the theoretical framework of the expected Euler characteristic of random excursion sets. We show that the probability of false alarm is in excellent accordance with the relative-frequency of estimating false alarms using a maximum likelihood estimator and detector for acquiring the 5-dimensional dispersion parameter vector of the SMC. Figure: Results for data generated for a MIMO setup. Comparison of the derived probability of false alarm to the relative frequency of false alarm and a classical bin-based probability of false alarm, and the derived probability of missed detection to the relative frequency of...

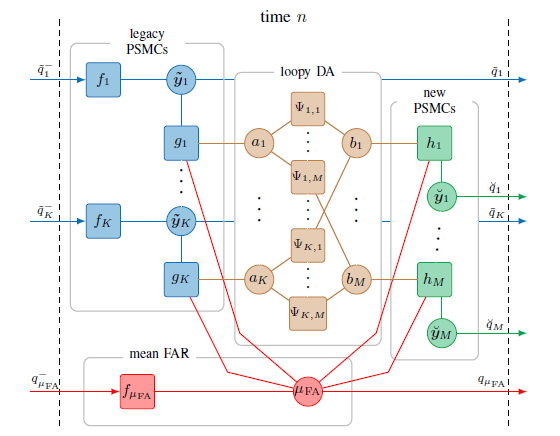

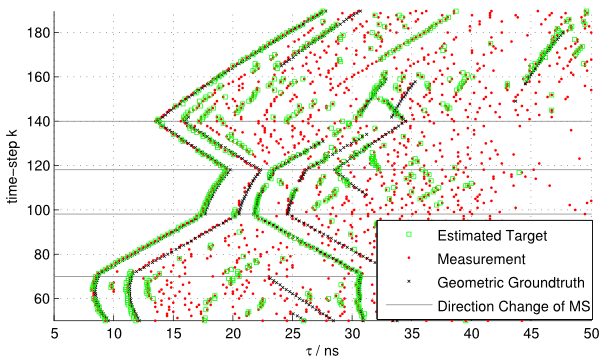

In this work we present a belief propagation (BP) algorithm with probabilistic data association (DA) for detection and tracking of specular multipath components (MPCs). In real dynamic measurement scenarios, the number of MPCs reflected from visible geometric features, the MPC dispersion parameters, and the number of false alarm contributions are unknown and time-varying. We develop a Bayesian model for specular MPC detection and joint estimation problem, and represent it by a factor graph which enables the use of BP for efficient computation of the marginal posterior distributions. A parametric channel estimator is exploited to estimate at each time step a set of MPC parameters, which are further used as noisy measurements by the BP-based algorithm. The algorithm performs probabilistic DA, and joint estimation of the time-varying MPC parameters and mean false alarm rate. Preliminary results using synthetic channel measurements demonstrate the excellent performance of the proposed algorithm in a realistic...

Learning the structure of Bayesian networks is a difficult combinatorial optimization problem. In this paper, we consider learning of tree-augmented naive Bayes (TAN) structures for Bayesian network classifiers with discrete input features. Instead of performing a combinatorial optimization over the space of possible graph structures, the proposed method learns a distribution over graph structures. After training, we select the most probable structure of this distribution. This allows for a joint training of the Bayesian network parameters along with its TAN structure using gradient-based optimization. The proposed method is agnostic to the specific loss and only requires that it is differentiable. We perform extensive experiments using a hybrid generative-discriminative loss based on the discriminative probabilistic margin. Our method consistently outperforms random TAN structures and Chow-Liu TAN structures. The paper was presented at the International Conference on Probabilistic Graphical Models (PGM 2020) and can be found at https://arxiv.org/abs/2008.09566. Code for the experiments...

Radar sensors are crucial for environment perception of driver assistance systems as well as autonomous vehicles. Key performance factors are weather resistance and the possibility to directly measure velocity. With a rising number of radar sensors and the so far unregulated automotive radar frequency band, mutual interference is inevitable and must be dealt with. Algorithms and models operating on radar data in early processing stages are required to run directly on specialized hardware, i.e. the radar sensor. This specialized hardware typically has strict resource-constraints, i.e. a low memory capacity and low computational power. Convolutional Neural Network (CNN)-based approaches for denoising and interference mitigation yield promising results for radar processing in terms of performance. However, these models typically contain millions of parameters, stored in hundreds of megabytes of memory, and require additional memory during execution. In this paper we investigate quantization techniques for CNN-based denoising and interference mitigation of radar signals....

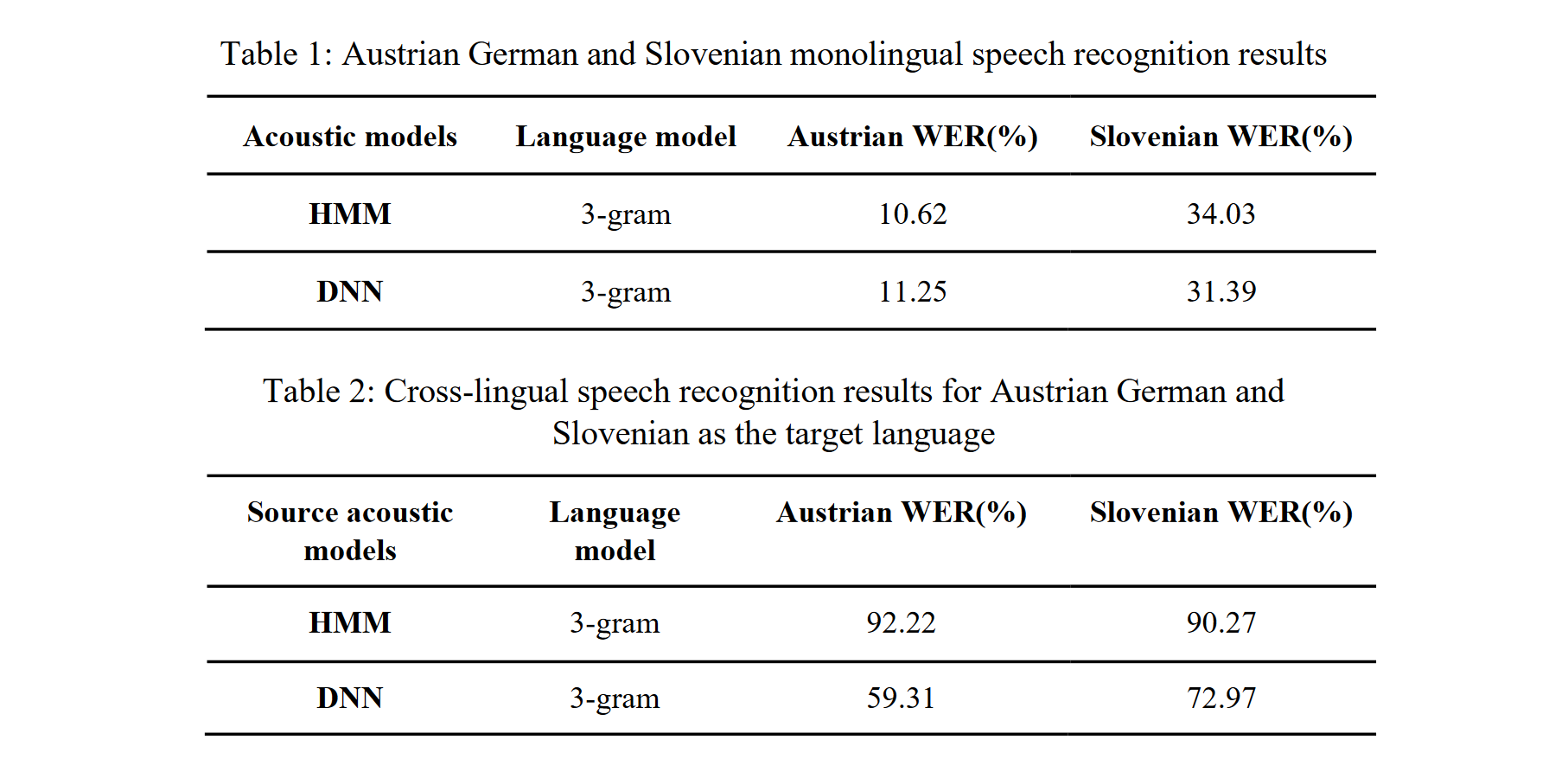

Methods of cross-lingual speech recognition have a high potential to overcome limitations on resources of spoken language in under-resourced languages. Not only can they be applied to build automatic speech recognition (ASR) systems for such languages, they can also be utilized to generate further resources of spoken language. This paper presents a cross-lingual ASR system based on data from two languages, Slovenian and Austrian German. Both were used as a source and target language for cross-lingual transfer (i.e., the acoustic models were trained on material from the source language, and recognition was tested on material from the target language). The cross-lingual mapping between the Slovenian phone set (40 phones) and the Austrian German phone set (33 phones) was carried out using expert knowledge about the acoustic-phonetic properties of the phones. For the experiments, we used data from two speech corpora: the Slovenian BNSI Broadcast News speech database and the Austrian...

In this paper we describe a simple and intuitive model for the effects of the human body of a user close by a receiver. We specifically investigate the UWB channel in off-body condition, where the agent device is located directly on the human body, and another device functioning as anchor is located in the environment. Due to the high time resolution of UWB signals, the effect of the human body can be modeled by means of a extended object producing multiple scattered paths. The geometric stochastic channel model is based on a connection between an (empirically chosen) ellipsoidal body shape and a distribution function for the scattering points chosen to fit measurements in terms of the resulting signal shape as well as in terms of the effects visible when applying a maximum likelihood multipath channel estimator. The effect of the human body manifests itself most notably via strong shadowing, climaxing...

This work, we investigate the reliability of time-of-arrival (TOA) based ranging using maximum-likelihood (ML) estimation in a dense multipath (DM) channel in terms of both the conventional mean squared error (MSE) as well as confidence bounds. We show that in the presence of DM the ML estimator distorts the signal mainlobe due to its whitening property, resulting in a bandwidth (BW) dependent bias, even before the outlier driven threshold region is reached. Low-complexity metrics for accurately determining the performance in terms of the probability density (PDF) of the estimation error of both ML estimation and joint ML estimation and detection are provided. These metrics are based on the well known method of interval estimation (MIE) combined with local error prediction using the normalized noise-free likelihood (NNLIKE) and consider the non-Gaussian effects of outliers as well as mainlobe distortion. The full version of the paper can be found on Researchgate Figure:...

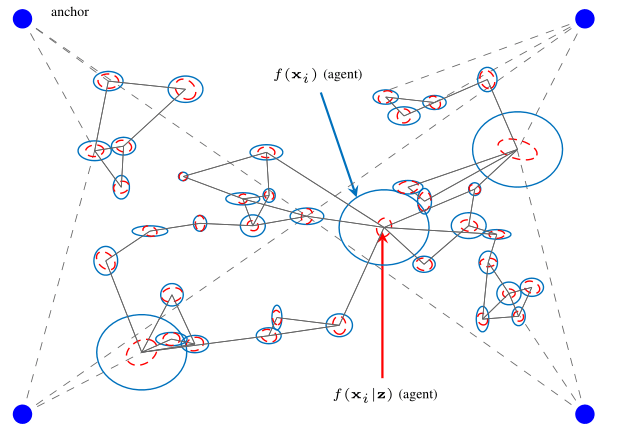

In this work, we propose a Bayesian agent network planning algorithm for information-criterion-based measurement selection for cooperative localization in static networks with anchors. This allows to increase the accuracy of the agent positioning while keeping the number of measurements between agents to a minimum. The proposed algorithm is based on minimizing the conditional differential entropy (CDE) of all agent states to determine the optimal set of measurements between agents. Such combinatorial optimization problems have factorial runtime and quickly become infeasible, even for a rather small number of agents. Therefore, we propose a Bayesian agent network planning algorithm that performs a local optimization for each state. Experimental results demonstrate a performance improvement compared to a random measurement selection strategy, significantly reducing the position RMSE at a smaller number of measurements between agents. The full version of the paper can be found on Researchgate Figure: This figure shows a scenario of a...

Radar sensors are crucial for environment perception of driver assistance systems as well as autonomous cars. Key performance factors are a fine range resolution and the possibility to directly measure velocity. With a rising number of radar sensors and the so far unregulated automotive radar frequency band, mutual interference is inevitable and must be dealt with. Sensors must be capable of detecting, or even mitigating the harmful effects of interference, which include a decreased detection sensitivity. In this paper, we evaluate a Convolutional Neural Network (CNN)-based approach for interference mitigation on real-world radar measurements. We combine real measurements with simulated interference in order to create input-output data suitable for training the model. A finite sample size performance comparison shows the effectiveness of the model trained on either simulated or real data as well as for transfer learning. A comparative performance analysis with the state of the art emphasizes the potential...

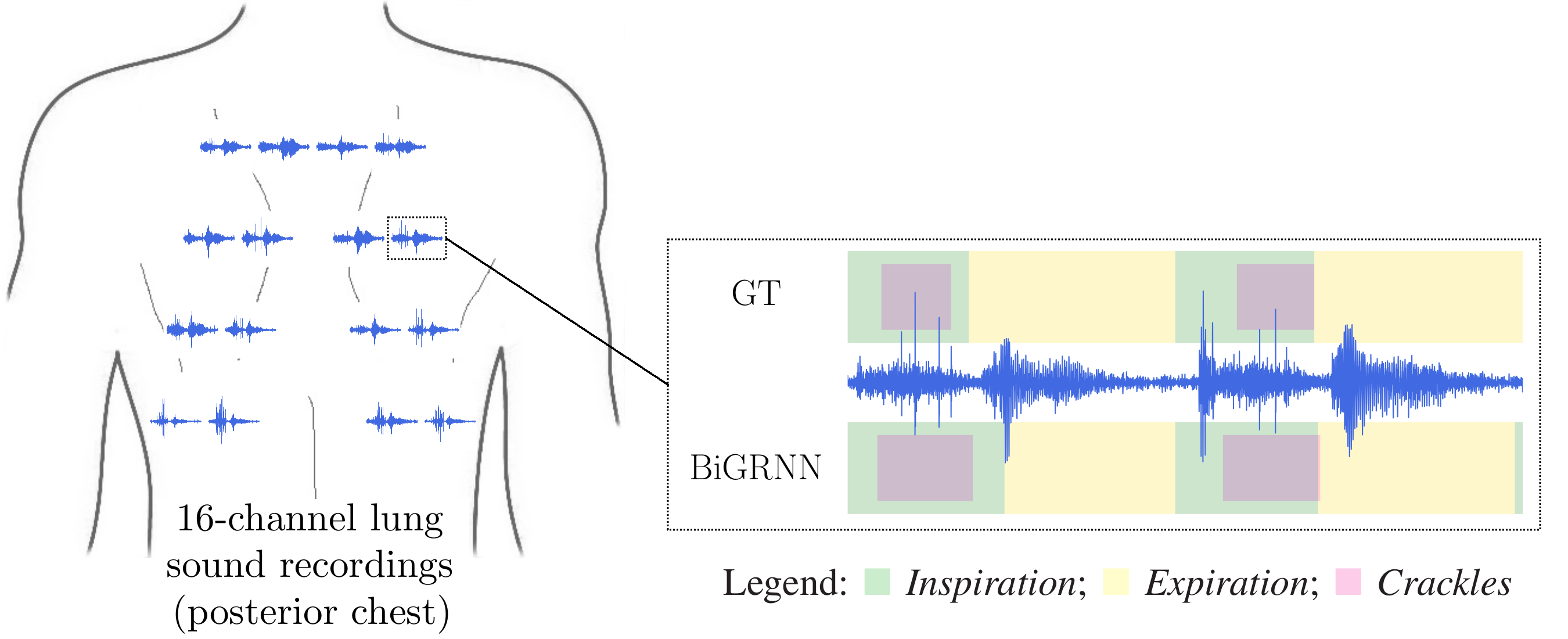

Computational methods for the analysis of lung sounds are beneficial for computer-supported diagnosis, digital storage and monitoring in critical care. Pathological changes of the lung are tightly connected to characteristic sounds enabling a fast and inexpensive diagnosis. Traditional auscultation with a stethoscope has several disadvantages: subjectiveness, i.e. the lung sounds are evaluated depending on the experience of the physician, cannot provide continuous monitoring and a trained expert is required. Furthermore, the characteristics of the sounds are in the low frequency range, where the human hearing has limited sensitivity and is susceptible to noise artifacts. To facilitate a more objective assessment of the lung sounds for diagnosis of pulmonary diseases/conditions we developed a multi-channel recording device (see Figure). Furthermore, in a clinical trial we classified adventitious and normal lung sounds using deep neural networks [1]. Our device enables a reliable easy-to-use lung sound recording for (1) better assistance to patients and...

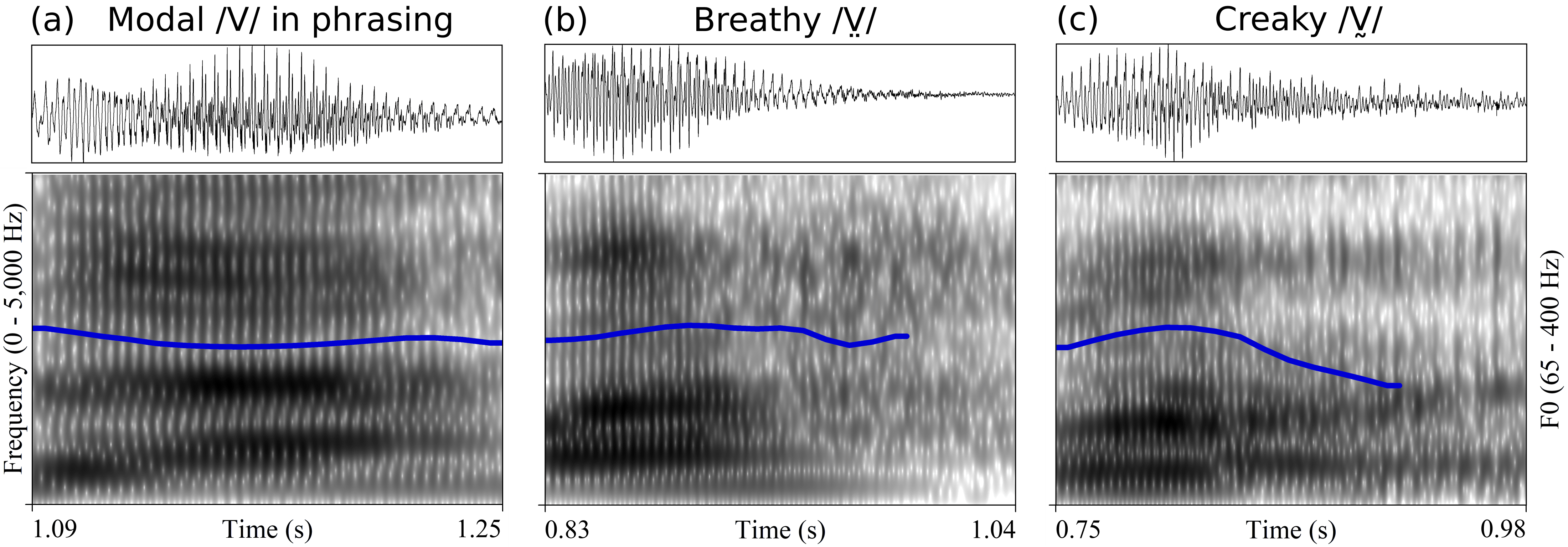

Chichimec (Otomanguean) has two tones, high and low, and a phonological three-way phonation contrast: modal /V/, breathy /V̤/ and creaky /V̰/. Tone and phonation type contrasts are used independently. This paper investigates the acoustic realization of modal, breathy and creaky vowels, the timing of phonation in non-modal vowels, and the production of tone in combination with different phonation types. The results of Cepstral Peak Prominence and three spectral tilt measures showed that phonation type contrasts are not distinguished by the same acoustic measures for women and men. In line with expectations for laryngeally complex languages, phonetic modal and non-modal phonation are sequenced in phonological breathy and creaky vowels. With respect to the timing pattern, however, the results show that non-modal phonation is not, as previously reported, mainly located in the middle of the vowel. Non-modal phonation is instead predominantly realized in the second half of phonological breathy and creaky vowels....

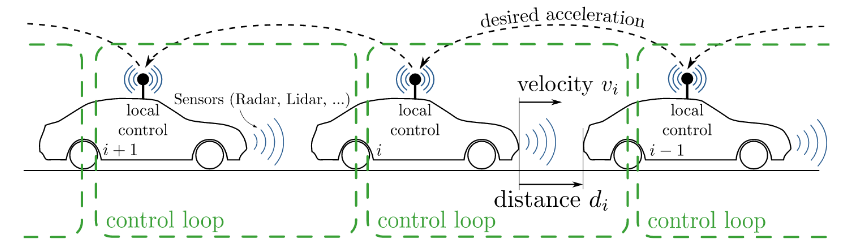

Models play an essential role in the design process of cyber-physical systems. They form the basis for simulation and analysis and help in identifying design problems as early as possible. However, the construction of models that comprise physical and digital behavior is challenging. Consequently, there is considerable interest in learning the behavior of such systems using machine learning. However, the performance of the machine learning techniques depends crucially on sufficient and representative training data covering the behavior of the system adequately not only in standard situations, but also in edge cases that are often particularly important. In this work, we successfully combine methods from automata learning and model-based testing to fully automatically generate training data that is rich of edge cases. Experimental results on a platooning scenario show that recurrent neural networks learned with this data achieved significantly better results compared to models learned from randomly generated data. In particular,...

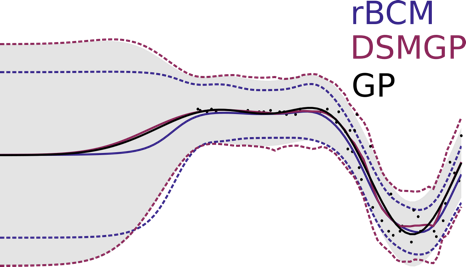

Gaussian Processes (GPs) are powerful non-parametric Bayesian regression models that allow exact posterior inference, but exhibit high computational and memory costs. In order to improve scalability of GPs, approximate posterior inference is frequently employed, where a prominent class of approximation techniques is based on local GP experts. However, the local-expert techniques proposed so far are either not well-principled, come with limited approximation guarantees, or lead to intractable models. In this paper, we introduce deep structured mixtures of GP experts, a well-principled stochastic process model which i) allows exact posterior inference, ii) has attractive computational and memory costs, and iii), when used as GP approximation, captures predictive uncertainties consistently better than previous approximations. Furthermore, deep structured mixtures can optionally be fine-tuned locally – regularised using local similarity constraints – which enables modelling of heteroscedasticity and non-stationarities. In a variety of experiments, we show that deep structured mixtures have a low approximation...

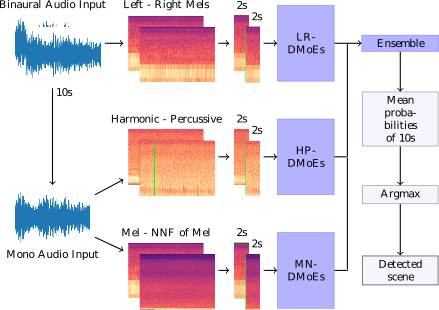

We propose a heterogeneous system of Deep Mixture of Experts (DMoEs) models using different Convolutional Neural Networks (CNNs) for acoustic scene classification (ASC). Each DMoEs module is a mixture of different parallel CNN structures weighted by a gating network. All CNNs use the same input data. The CNN architectures play the role of experts extracting a variety of features. The experts are pre-trained, and kept fixed (frozen) for the DMoEs model. The DMoEs is post-trained by optimizing weights of the gating network, which estimates the contribution of the experts in the mixture. In order to enhance the performance, we use an ensemble of three DMoEs modules each with different pairs of inputs and individual CNN models. The input pairs are spectrogram combinations of binaural audio and mono audio as well as their pre-processed variations using harmonic-percussive source separation (HPSS) and nearest neighbor filters (NNFs). The classification result of the proposed...

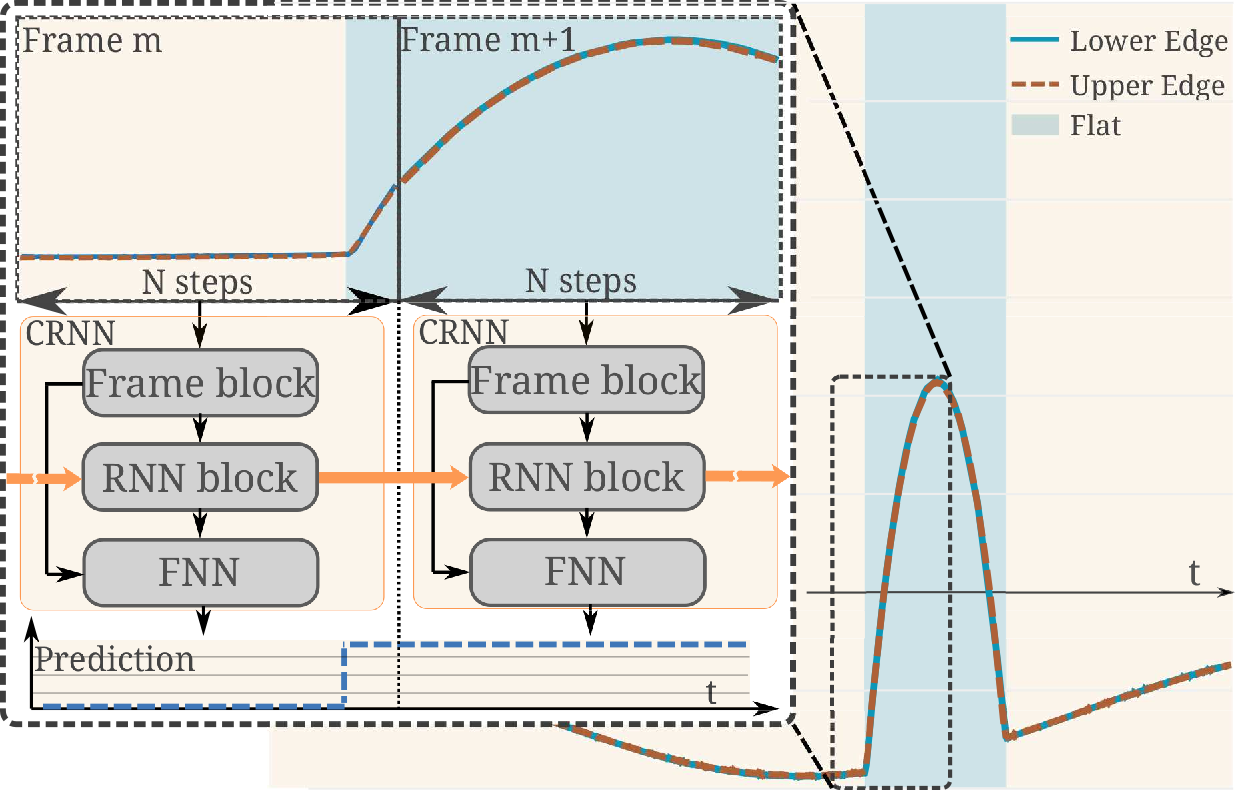

Efficient real-time segmentation and classification of time-series data is key in many applications, including sound and measurement analysis. We propose an efficient convolutional recurrent neural network (CRNN) architecture that is able to deliver improved segmentation performance at lower computational cost than plain RNN methods. We develop a CNN architecture, using dilated DenseNet-like kernels and implement it within the proposed CRNN architecture. For the task of online wafer-edge measurement analysis, we compare our proposed methods to standard RNN methods, such as Long Short Term Memory (LSTM) and Gated Recurrent Units (GRUs). We focus on small models with a low computational complexity, in order to run our model on an embedded device. We show that frame-based methods generally perform better than RNNs in our segmentation task and that our proposed recurrent dilated DenseNet achieves a substantial improvement of over 1.1 % F1-score compared to other frame-based methods. Figure: Principle of the recurrent...

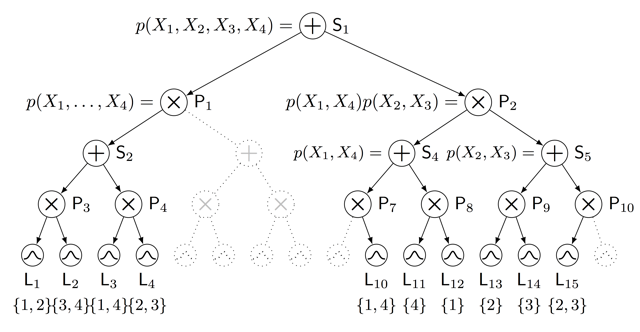

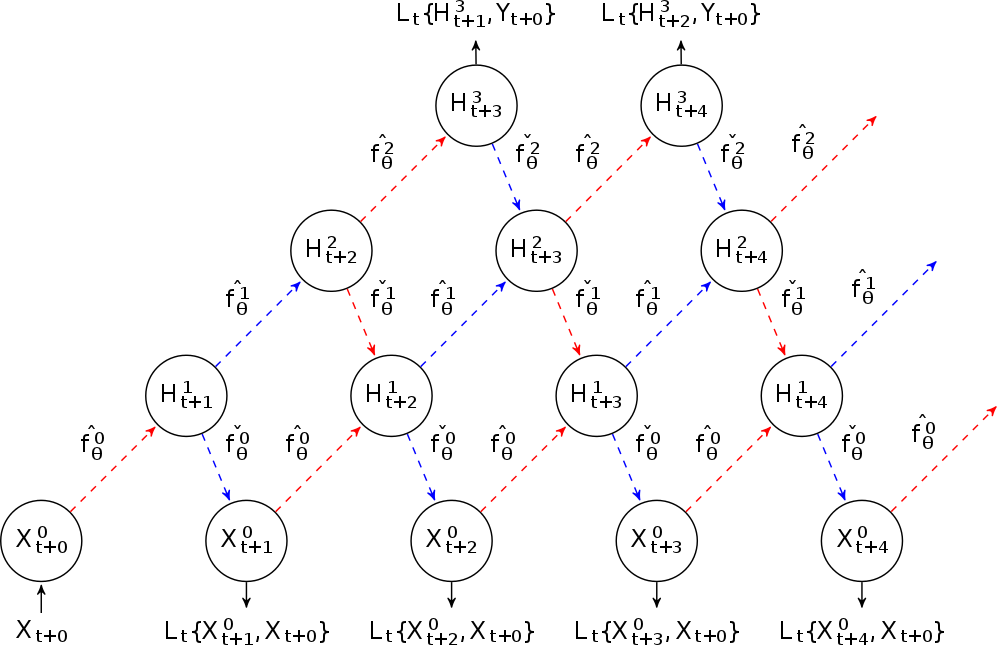

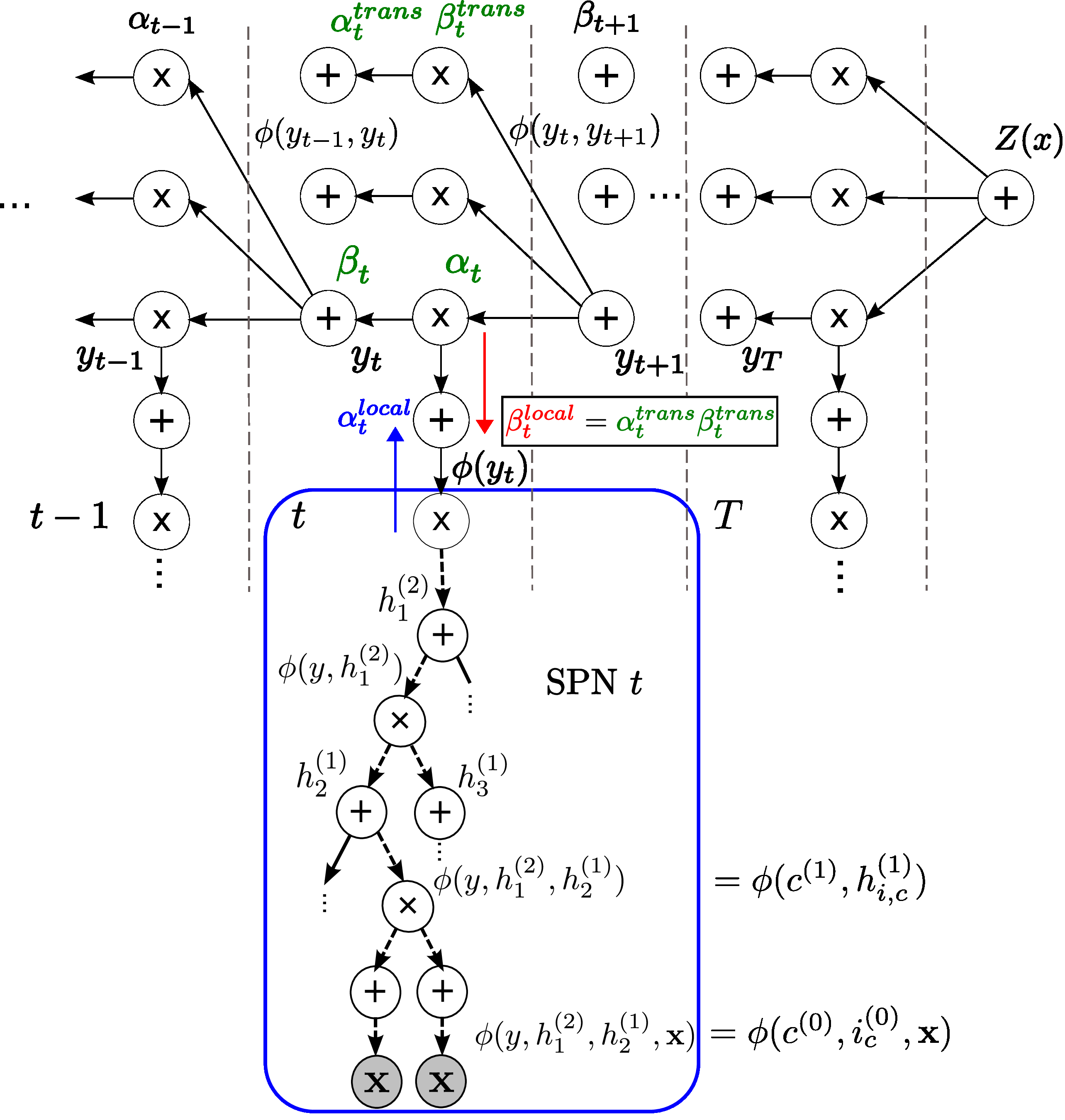

Sum-product networks (SPNs) are flexible density estimators and have received significant attention, due to their attractive inference properties. While parameter learning in SPNs is well developed, structure learning leaves something to be desired: Even though there is a plethora of SPN structure learners, most of them are somewhat ad-hoc, and based on intuition rather than a clear learning principle. In this paper, we introduce a well-principled Bayesian framework for SPN structure learning. First, we decompose the problem into i) laying out a basic computational graph, and ii) learning the so-called scope function over the graph. The first is rather unproblematic and akin to neural network architecture validation. The second characterises the effective structure of the SPN and needs to respect the usual structural constraints in SPN, i.e.~completeness and decomposability. While representing and learning the scope function is rather involved in general, in this paper, we propose a natural parametrisation for...

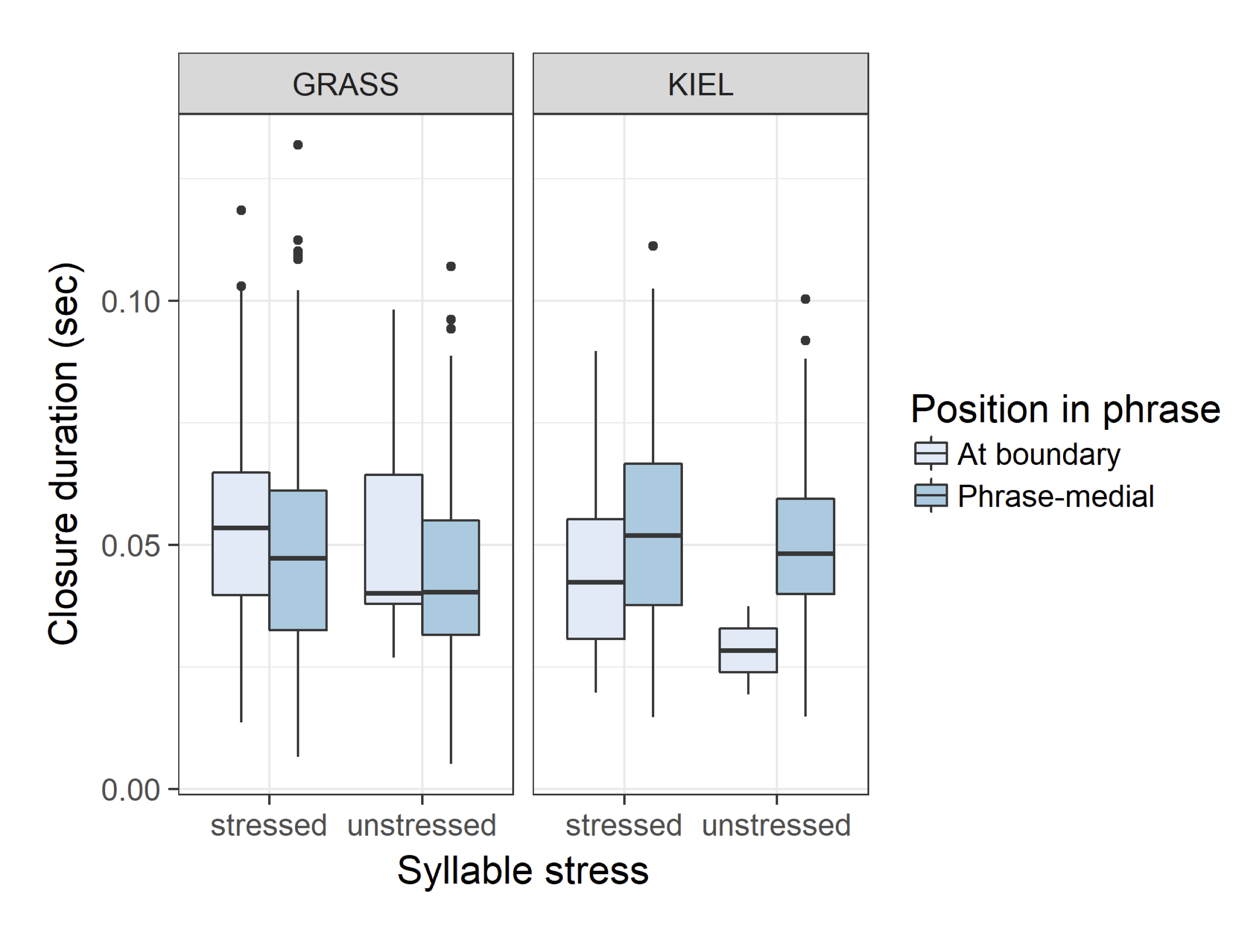

This study investigates the acoustic cues used to mark prosodic boundaries in two varieties of German, with a specific focus on variations in production of fortis and lenis plosives. Based on prosodic-boundary-adjacent and non-boundary-adjacent plosives from GRASS (Austrian German) and the Kiel Corpus of Read Speech (Northern German), we found that closure and burst duration features, as well as duration of a preceding adjacent segment,vary consistently in relationship to the presence or absence of a prosodic boundary, but that the relative weights of these features differ in the two varieties studied. Whereas stress marking in plosives is being driven more by burst duration in the Kiel Corpus data, it is driven more by closure duration in the GRASS data. This study suggests that boundary detection tools require variety-specific training materials, or else information from comparative studies such as the current work, in order to attain optimalfunction in specific varieties or...

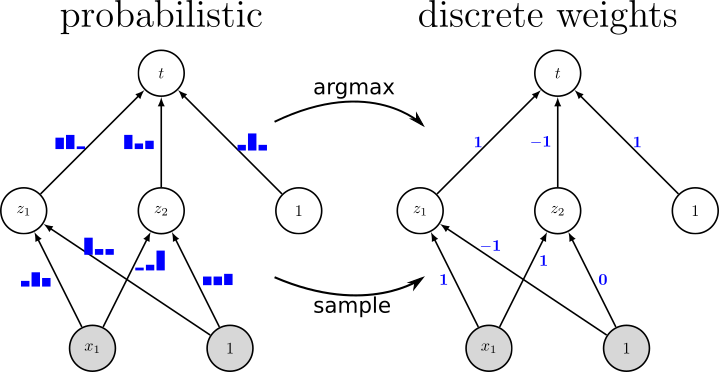

Since resource-constrained devices hardly benefit from the trend towards ever-increasing neural network (NN) structures, there is growing interest in designing more hardware-friendly NNs. In this paper, we consider the training of NNs with discrete-valued weights and sign activation functions that can be implemented more efficiently in terms of inference speed, memory requirements, and power consumption. We build on the framework of probabilistic forward propagations using the local reparameterization trick, where instead of training a single set of NN weights we rather train a distribution over these weights. Using this approach, we can perform gradient-based learning by optimizing the continuous distribution parameters over discrete weights while at the same time perform backpropagation through the sign activation. In our experiments, we investigate the influence of the number of weights on the classification performance on several benchmark datasets, and we show that our method achieves state-of-the-art performance. Figure: Instead of learning conventional real-valued...

Automotive radar is used to perceive the vehicle’s environment due to its capability to measure distance, velocity and angle of surrounding objects with a high resolution. With an increasing number of deployed radar sensors on the streets and because of missing regulations of the automotive radar frequency band, mutual interference must be dealt with in order to retain a sensitive detection capability. In this work we analyze the capability of Convolutional Neural Networks (CNNs) to address the issue of interference mitigation. Since automotive radar is a safety-critical application, interference mitigation and denoising algorithms must fulfill certain requirements. Application-related performance metrics are used to analyze noise suppression capability and ensure that no artifacts are generated by the processing. In this paper we show how NN-based denoising can applied in different steps of the radar signal processing chain. show specific CNN structures capable of denoising radar signals. present numerical results using application-related...

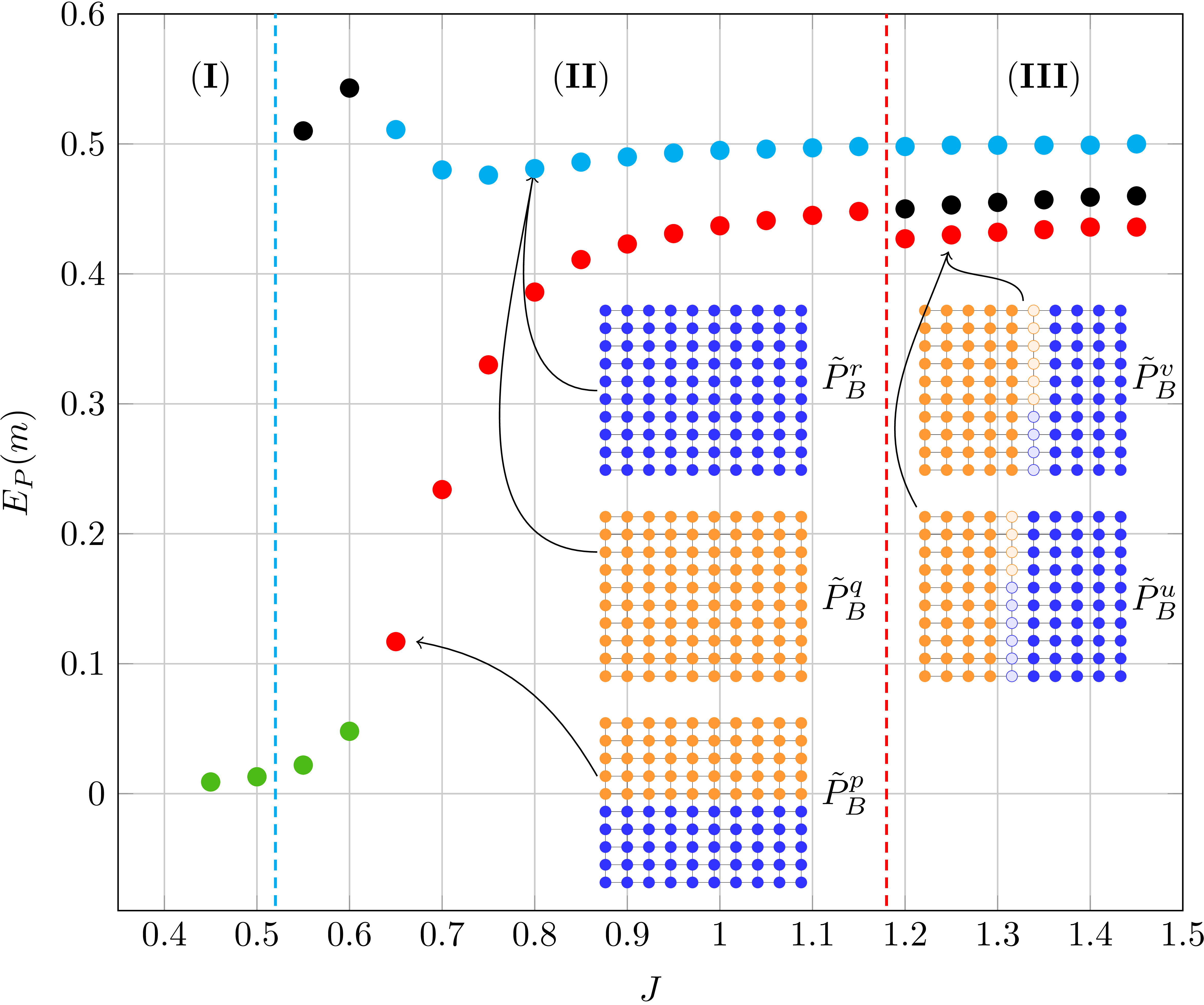

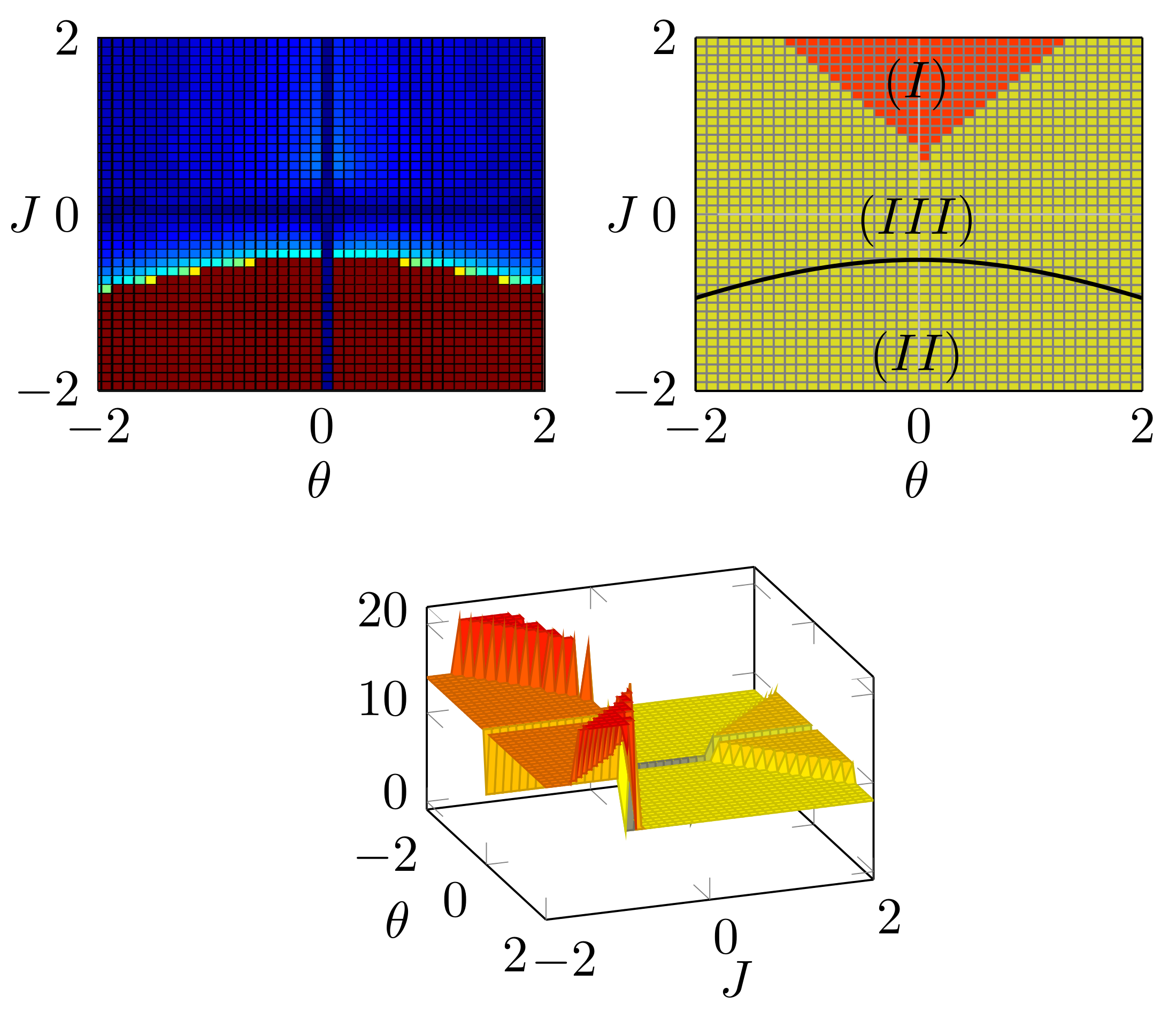

The marginals and the partition function can be estimated in a straight-forward manner for tree-structured models but require efficient approximation methods if the graphical model contains loops. One such method is Belief Propagation (BP) that exploits the structure of probabilistic graphical models in order to approximate the marginal distribution and the partition function. In this work, we analyze the difference between accurate marginals and an accurate partition function. Therefore, we go beyond well-established models (e.g., attractive models with identical or random potentials) and introduce a rich class of attractive models with inherent structure: patch potential models. These models exhibit many interesting phenomena and provide deep insights into the relationship between the approximation quality of the marginals and the partition function. We discuss the properties of the solution space and demonstrate that: (i) three different regions with fundamentally different properties exist; (ii) although it is often infeasible to obtain and combine...

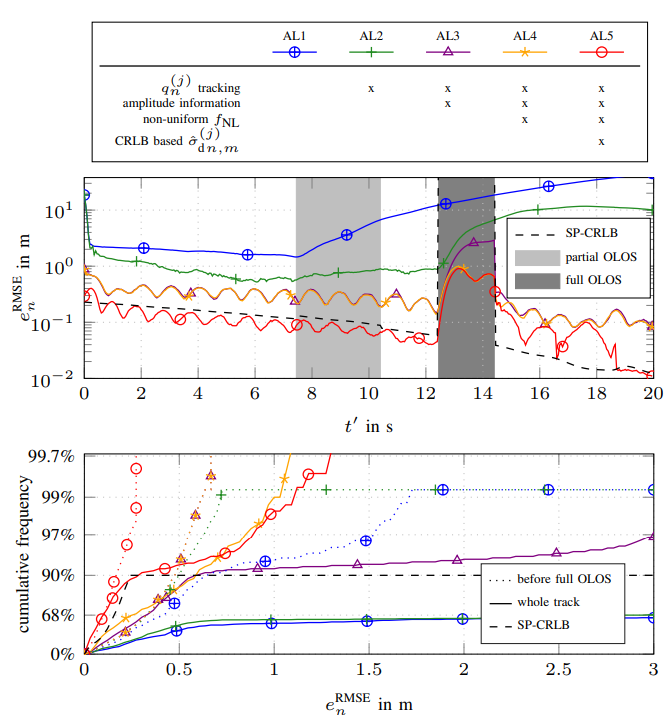

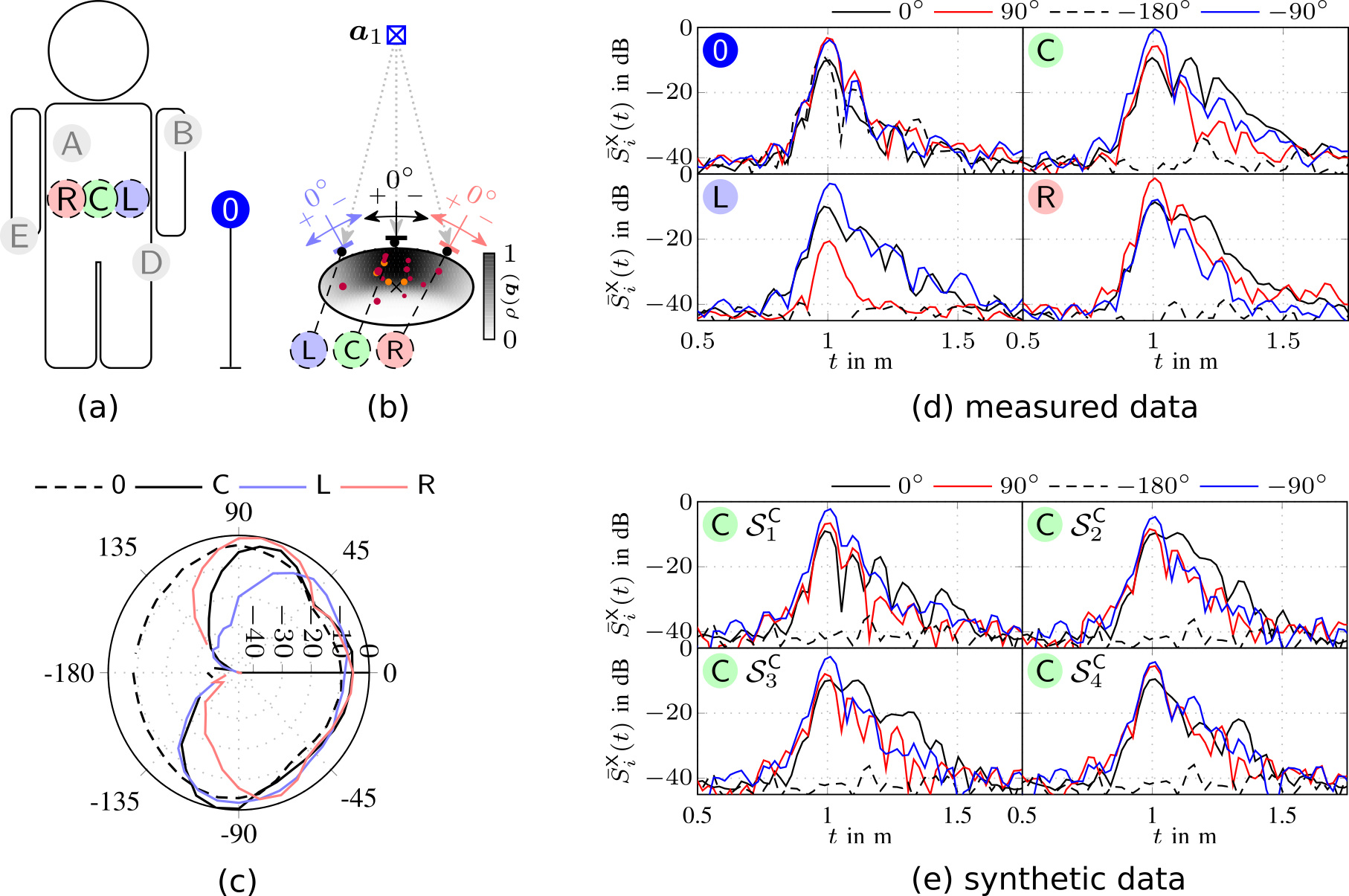

Simultaneous localization and mapping (SLAM) is important in many fields including robotics, autonomous driving, location-aware communication, and robust indoor localization. Specifically, robustness, i.e. achieving a low probability of localization outage, is still a challenging task in environments with strong multipath propagation. Therefore, new systems supporting multipath channels either take advantage of it by exploiting multipath components (MPCs) for localization [5], [6], [10], exploiting cooperation among agents, and/or exploiting robust signal processing against multipath propagation and clutter measurements in general. This work presents a Bayesian feature-based simultaneous localization and mapping (SLAM) algorithm that exploits multipath components (MPCs) in radio-signals. The proposed belief propagation (BP)-based algorithm enables the estimation of the position, velocity, and orientation of the mobile agent equipped with an antenna array by utilizing the delays and the angle-of-arrivals (AoAs) of the MPCs. The proposed algorithm also exploits the statistics of the complex amplitudes of MPC parameters, i.e. amplitude information...

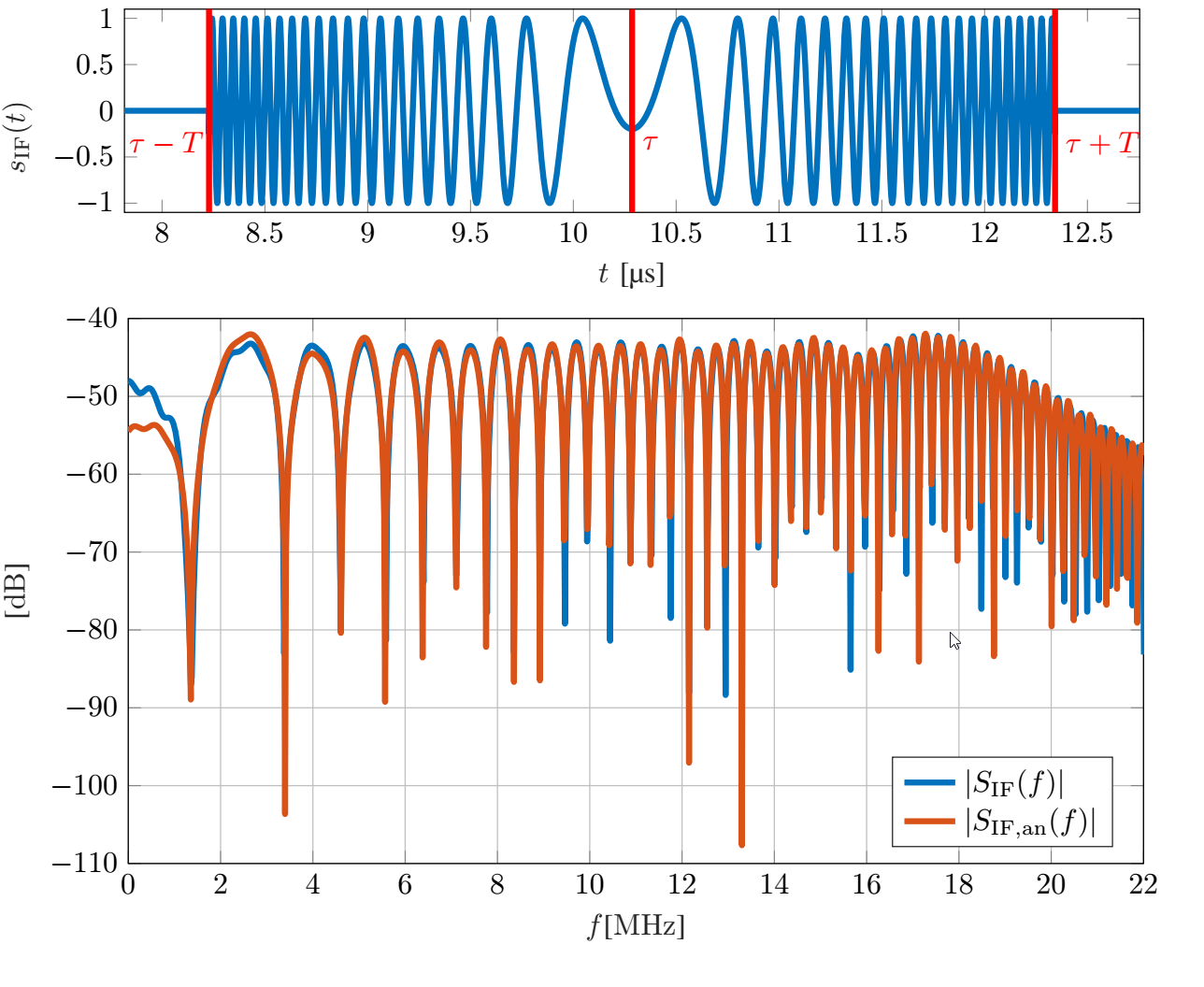

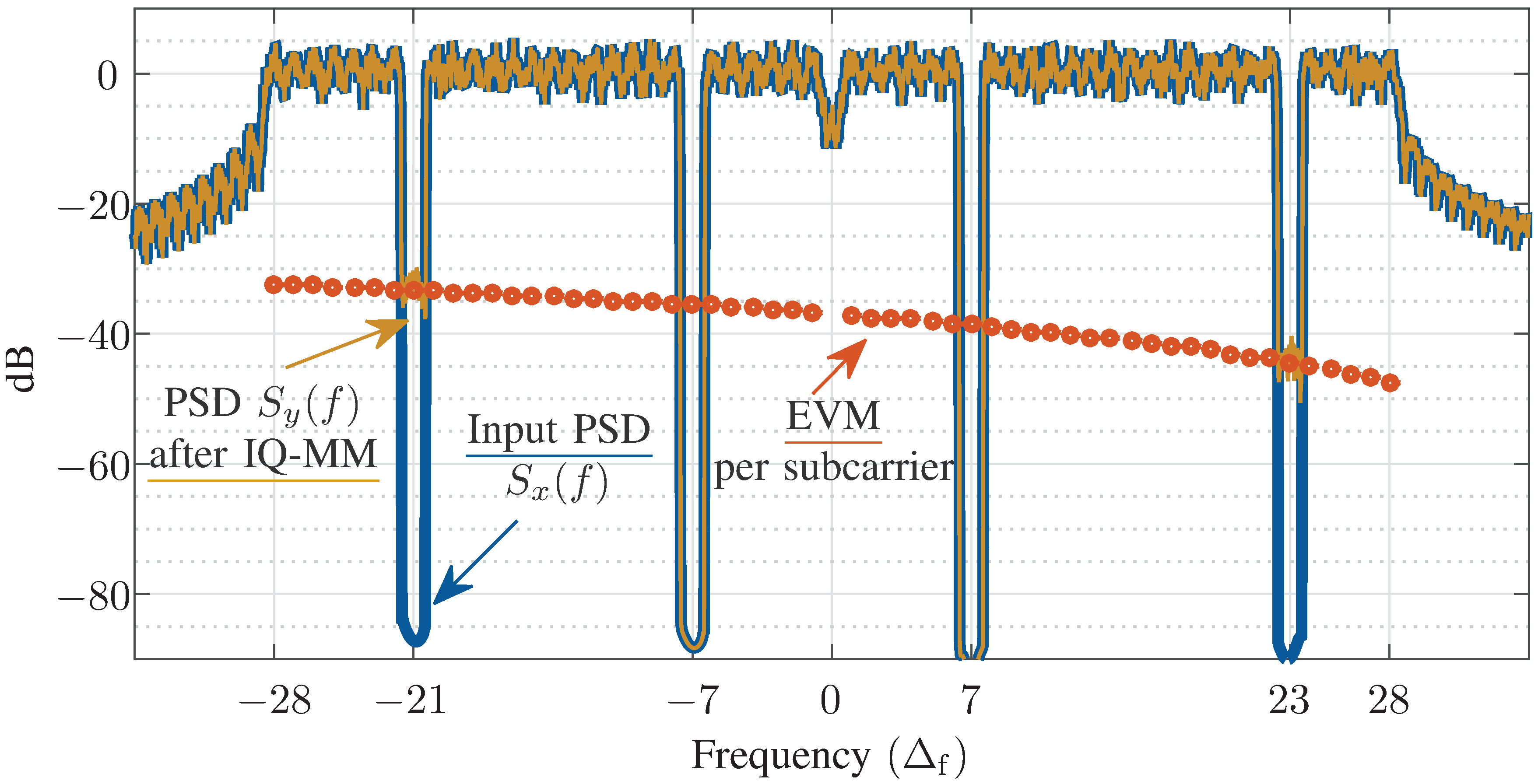

Radar sensors are increasingly utilized in today’s cars. This inevitably leads to increased mutual sensor interference and thus a performance decrease, potentially resulting in major safety risks. Understanding signal impairments caused by interference accurately helps to devise signal processing schemes to combat said performance degradation. For the FMCW radars prevalent in automotive applications, it has been shown that so-called non-coherent interference occurs frequently and results in an increase of the noise floor. In this work we investigate the impact of interference analytically by focusing on its detailed description. We show, among others, that the spectrum of the typical interference signal has a linear phase and a magnitude that is strongly fluctuating with the phase parameters of the time domain interference signal. Analytical results are verified by simulation, highlighting the dependence on the specific phase terms that cause strong deviations from spectral whiteness. Figure: The upper plot depicts an example of...

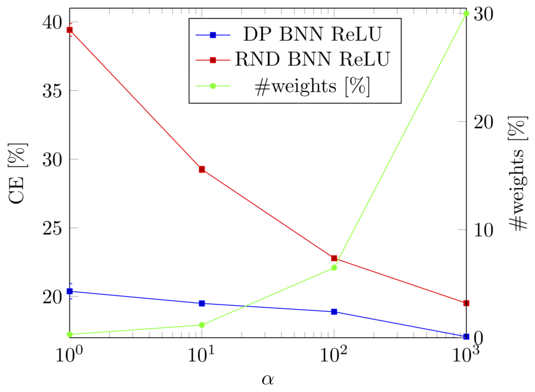

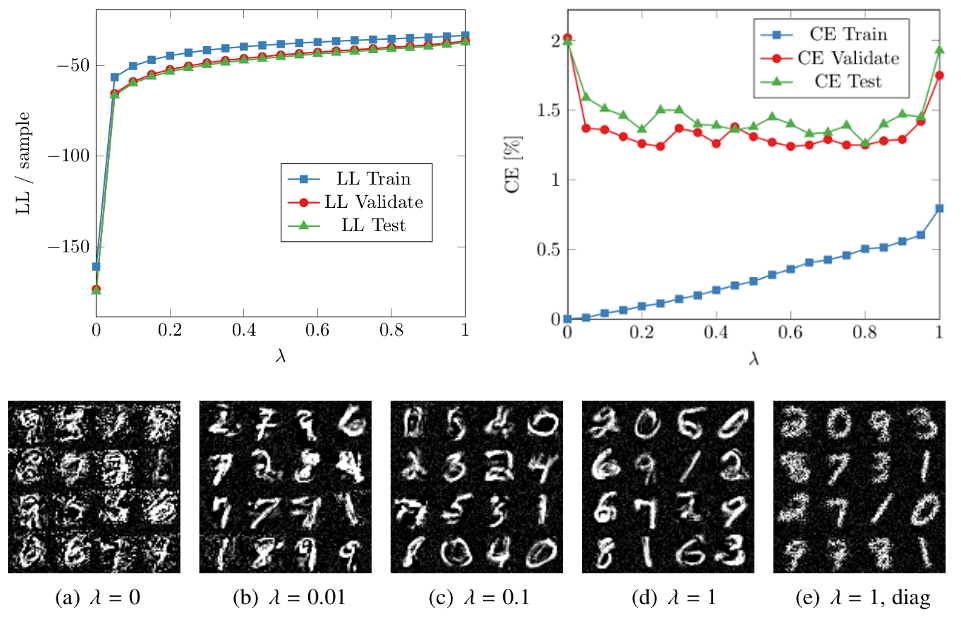

We extend feed-forward neural networks with a Dirichlet process prior over the weight distribution. This enforces a sharing on the network weights, which can reduce the overall number of parameters drastically. We alternately sample from the posterior of the weights and the posterior of assignments of network connections to the weights. This results in a weight sharing that is adopted to the given data. In order to make the procedure feasible, we present several techniques to reduce the computational burden. Experiments show that our approach mostly outperforms models with random weight sharing. Our model is capable of reducing the memory footprint substantially while maintaining a good performance compared to neural networks without weight sharing. Figure: The concentration parameter alpha of the Dirichlet processes can be used to trade-off between the classification error (CE) and the memory requirements of the model, i.e., how many weights are shared. Especially if only a...

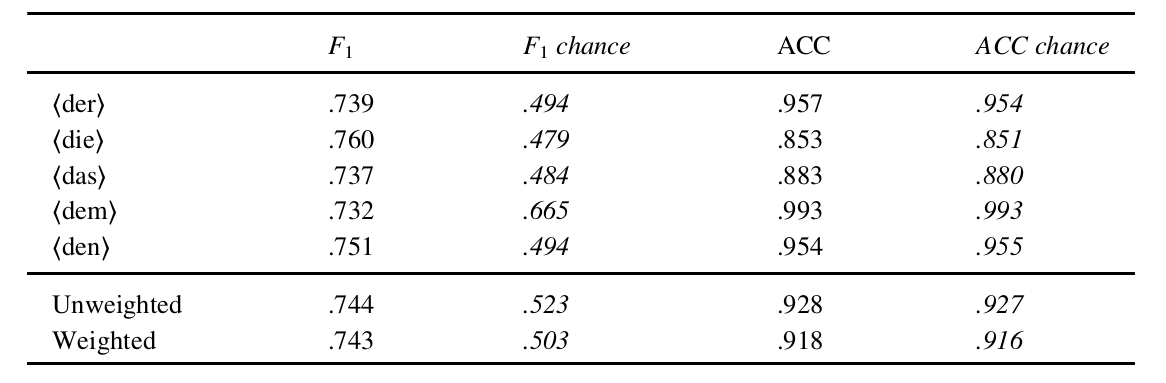

Homophones pose serious issues for automatic speech recognition (ASR) as they have the same pronunciation but different meanings or spellings. Homophone disambiguation is usually done within a stochastic language model or by an analysis of the homophonous word’s context. Whereas this method reaches good results in read speech, it fails in conversational, spontaneous speech, where utterances are often short, contain disfluencies and/or are realized syntactically incomplete. Phonetic studies have shown that words that are homophonous in read speech often differ in their phonetic detail in spontaneous speech. Whereas humans use phonetic detail to disambiguate homophones, this linguistic information is usually not explicitly incorporated into ASR systems. In this paper, we show that phonetic detail can be used to automatically disambiguate homophones using the example of German pronouns. In these example sentences, “der” fuctions as article (A) and as relative pronoun (B): (A) Hans, der Floh, hatte ein gutes Leben. John,...

Highly accurate indoor positioning is still a hard problem due to interference caused by multipath propagation and the resulting high complexity of the infrastructure. We focus on the possibility of exploiting information contained in specular multipath components (SMCs) to increase the positioning accuracy of the system and to reduce the required infrastructure, using a-priori information in form of a floor plan. The system utilizes a single anchor equipped with array antennas and wideband signals to allow separating the SMCs. We derive a closed form of the Cramér-Rao lower bound for array-based multipath-assisted positioning and examine the beneficial effect of spatial aliasing of antenna arrays on the achievable angular resolution and as a direct consequence onto the positioning accuracy. It is shown that ambiguities that arise due to the aliasing can be resolved by exploiting the information contained in SMCs. The theoretic results are validated by simulations. The figure illustrates the...

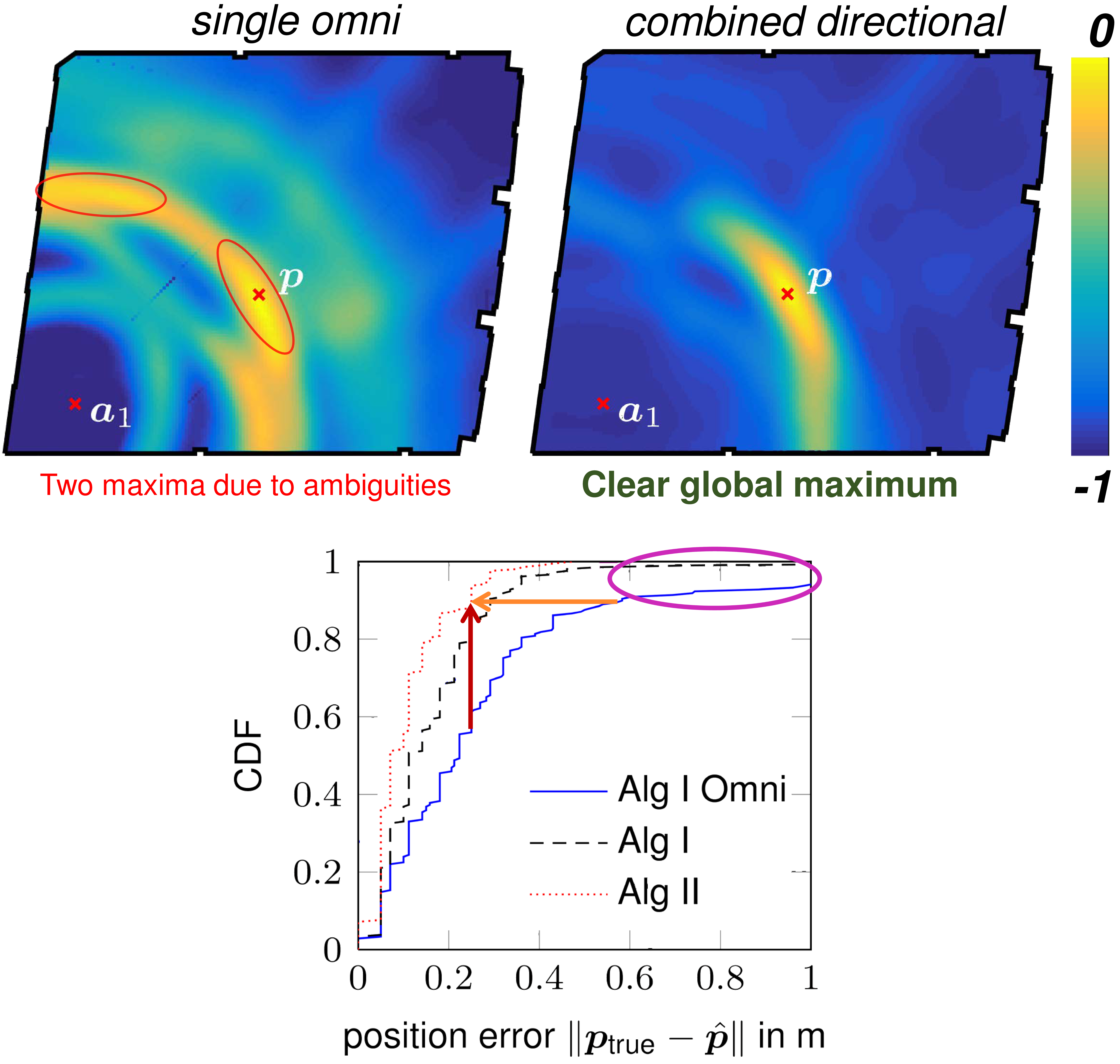

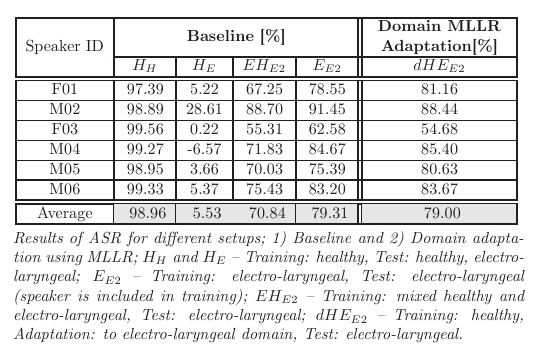

Setting up indoor localization systems is often excessively time-consuming and labor-intensive, because of the high amount of anchors to be carefully deployed or the burdensome collection of fingerprints. In this work, we present SALMA, a novel low-cost ultra-wideband-based indoor localization system that makes use of only one anchor with minimized calibration and training efforts. The system leverages the gained insights of our previous works, exploiting multipath reflections of radio signals to enhance positioning performance. To this end, only a crude floor plan is needed which enables SALMA to accurately determine the position of a mobile tag using a single anchor, hence minimizing the infrastructure costs, as well as the setup time. We implement SALMA on off-the-shelf UWB devices based on the Decawave DW1000 transceiver and show that, by making use of multiple directional antennas, SALMA can also resolve ambiguities due to overlapping multipath components. An experimental evaluation in an office...