On Representation Learning for Artificial Bandwidth Extension

- Published

- Thu, Oct 01, 2015

- Tags

- rotm

- Contact

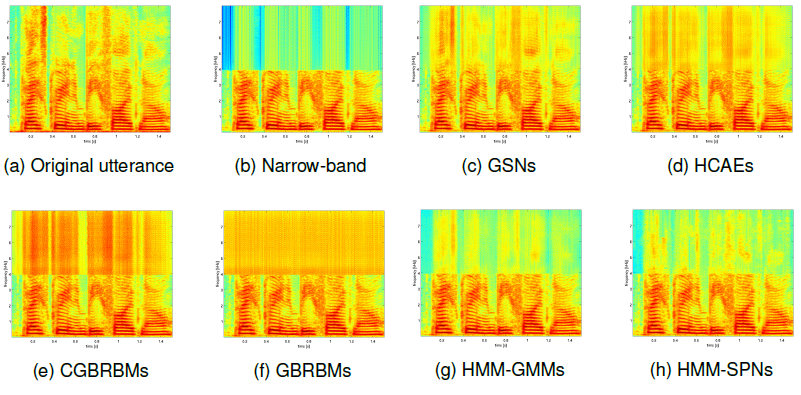

Recently, sum-product networks (SPNs) showed convincing results on the ill-posed task of artificial bandwidth extension (ABE). However, SPNs are just one type of many architectures which can be summarized as representational models. In this paper, using ABE as benchmark task, we perform a comparative study of Gauss Bernoulli restricted Boltzmann machines, conditional restricted Boltzmann machines, higher order contractive autoencoders, SPNs and generative stochastic networks (GSNs). Especially the latter ones are promising architectures in terms of its reconstruction capabilities. Our experiments show impressive results of GSNs, achieving on average an improvement of 3.90dB and 4.08dB in segmental SNR on a speaker dependent (SD) and speaker independent (SI) scenario compared to SPNs, respectively.

The figure shows the log-spectogram of the utterance ‘‘Place green in b 5 now’’, spoken by s20 recovered by various frame-wise SD deep representation models and hybrid HMM models: (a) original full bandwidth signal; narrow bandwidth signal (b); GSNs (c), HCAEs (d), CGBRBMs (e), GBRBMs (f), HMM-GMMs (g) and HMM-SPNs (h).

Browse the Results of the Month archive.