On phase estimation in single-channel speech enhancement and separation

- Published

- Fri, Mar 01, 2013

- Tags

- rotm

In many speech processing applications, the spectral amplitude is the dominant information while the use of phase spectrum is not so widely spead. In [6] we present an overview on why speech phase spectrum has been neglected in the conventional techniques used in different applications including speech enhancement and source separation. Recovering a target speech signal from a single-channel recording falls into two groups of methods: 1) single-channel speech separation, and 2) single-channel speech enhancement algorithms. While there has been some success in either of the groups, all of them frequently ignore the issue of phase estimation in their parameter estimation and signal reconstruction. Instead, they directly pass the noisy signal phase for

reconstructing the output signal which leads to certain perceptual artifacts in the form of musical noise and cross-talk in speech enhancement and speech separation scenarios, respectively.

To address the phase impacts on single-channel speech enhancement/separation algorithms, we recently studied the importance of the phase information in two steps:

1) Amplitude estimation: Recent findings in [2] shows that the phase plays role in the amplitude estimation stage of single-channel speech enhancement and separation. In particular, phase prior information can be employed to derive a new phase-aware amplitude estimator in the parameter estimation stage of single-channel speech enhancement [2]. In [1,2], wee recently showed that the improvement in the single-channel source separation performance by including the phase information versus previous phase-independent methods (see [1,2]).

2) Signal reconstruction: we recently showed that replacing the noisy signal phase with an estimated one can lead to considerable improvement in the perceived signal quality in single-channel speech separation or speech enhancement(see [1,3,4]). The geometry-based phase estimation using group delay deviation constraint proposed in [1] was extend to time-frequency constraints in [8]. More recently, a least-squares phase estimator was proposed in [7].

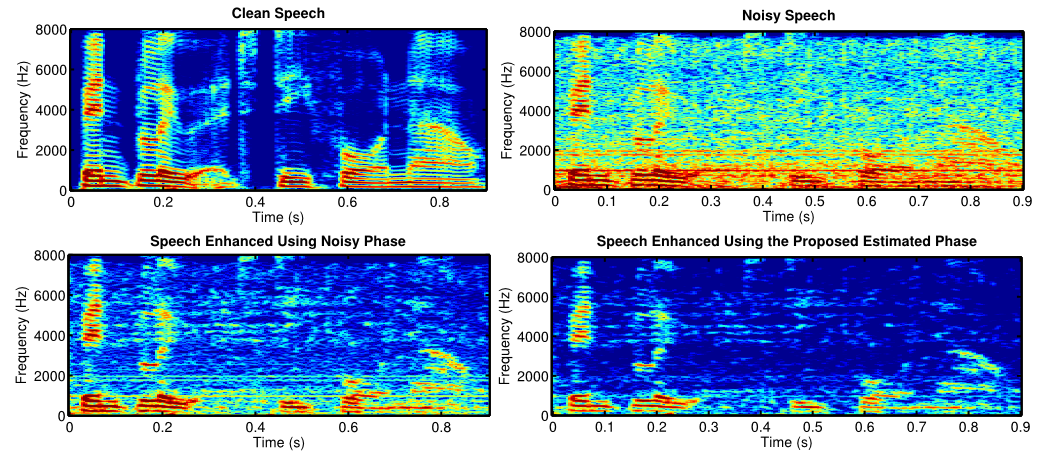

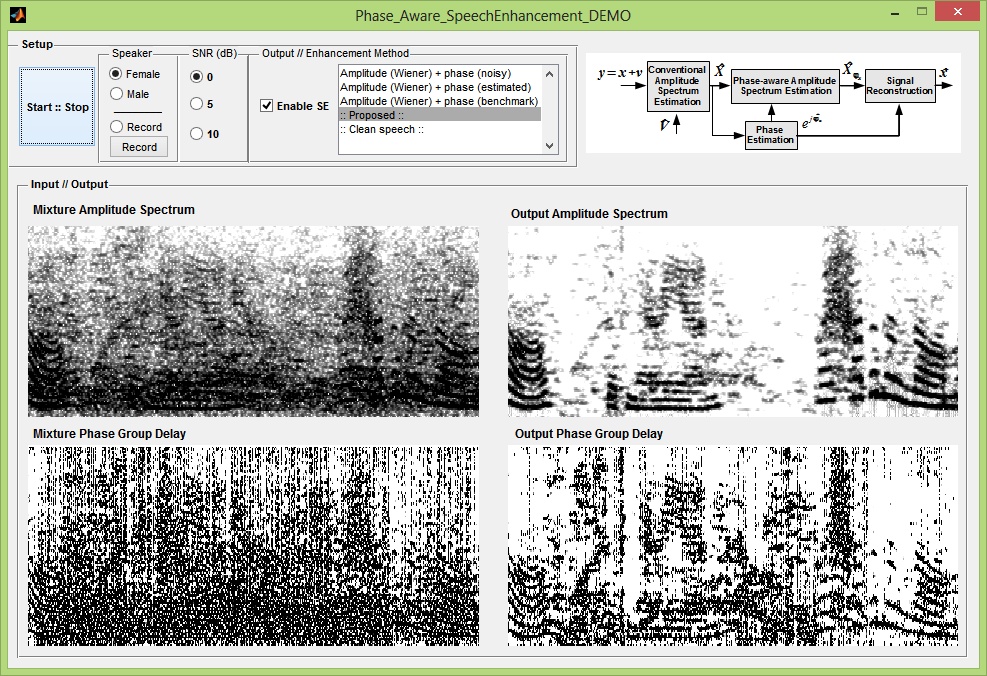

The combination of the two aforementioned steps was demonstrated in an open loop configuration in Show and tell contribution at INTERSPEECH 2013 in Lyon, France which won Google award [4]. The demonstration aimed to show how the proposed full phase-aware speech enhancement algorithm works in real-time for difference noise scenarios with a direct comparison to the state-of-the-art phase-insensitive methods. A closed loop configuration containing the two steps is shown in [5]. The above figure shows an example for where we compare the spectrograms for enhanced speech signal using noisy signal phase versus the enhanced signal using the proposed estimated phase. An improvement of 0.3 PESQ is obtained. Some audio demoes are available here:

- Phase-aware amplitude estimation [Audio]

- Show and tell demo Video [Video]

References

[1] P. Mowlaee, R. Saeidi, R. Martin, “Phase Estimation for Signal Reconstruction in Single-Channel Source Separation”, in Proc. 13th Annual Conference of the International Speech Communication Association, Interspeech 2012 [Audio | pdf].

[2] P. Mowlaee, R. Martin, “On Phase Importance in Parameter Estimation for Single-channel Source Separation”, Int. Workshop Acoust. Signal Enhancement (IWAENC), Sep. 2012 [pdf].

[3] M. K. Watanabe, P. Mowlaee, “Iterative Sinusoidal-based Partial Phase Reconstruction in Single-Channel Source Separation”, in Proc. 14th Annual Conference of the International Speech Communication Association, Interspeech pp. 832-836, 2013 [Audio | Link].

[4] P. Mowlaee, M. K. Watanabe, and R. Saeidi, “Show and Tell: Phase-Aware Single-Channel Speech Enhancement”, 14th Annual Conference of the International Speech Communication Association, Lyon, France, pp. 1872-1874, 2013 [Video | Link].

[5] P. Mowlaee, R. Saeidi, “Iterative Closed-Loop Phase-Aware Single-Channel Speech Enhancement”, Signal Processing Letters, Vol. 20, No. 12, pp. 1235-1239, Dec. 2013 (DOI 10.1109/LSP 2013.2286748) [Audio|Link|pdf].

[6] P. Mowlaee, R. Saeidi, Y. Stylianou “INTERSPEECH 2014 Special Session on Phase Importance in Speech Processing , 15th Annual Conference of the International Speech Communication Association, Singapore[Link | Paper].

[7] C. Chacon, P. Mowlaee, “Least Squares Phase Estimation of Mixed Signals “, 15th Annual Conference of the International Speech Communication Association, Singapore [paper].

[8] P. Mowlaee, R. Saeidi “Time-Frequency Constraints for Phase Estimation in Single-Channel Speech Enhancement “, The International Workshop on Acoustic Signal Enhancement (IWAENC 2014) [paper].

Browse the Results of the Month archive.