Virtual Adversarial Training Applied to Neural Higher-Order Factors for Phone Classification

- Published

- Thu, Sep 01, 2016

- Tags

- rotm

- Contact

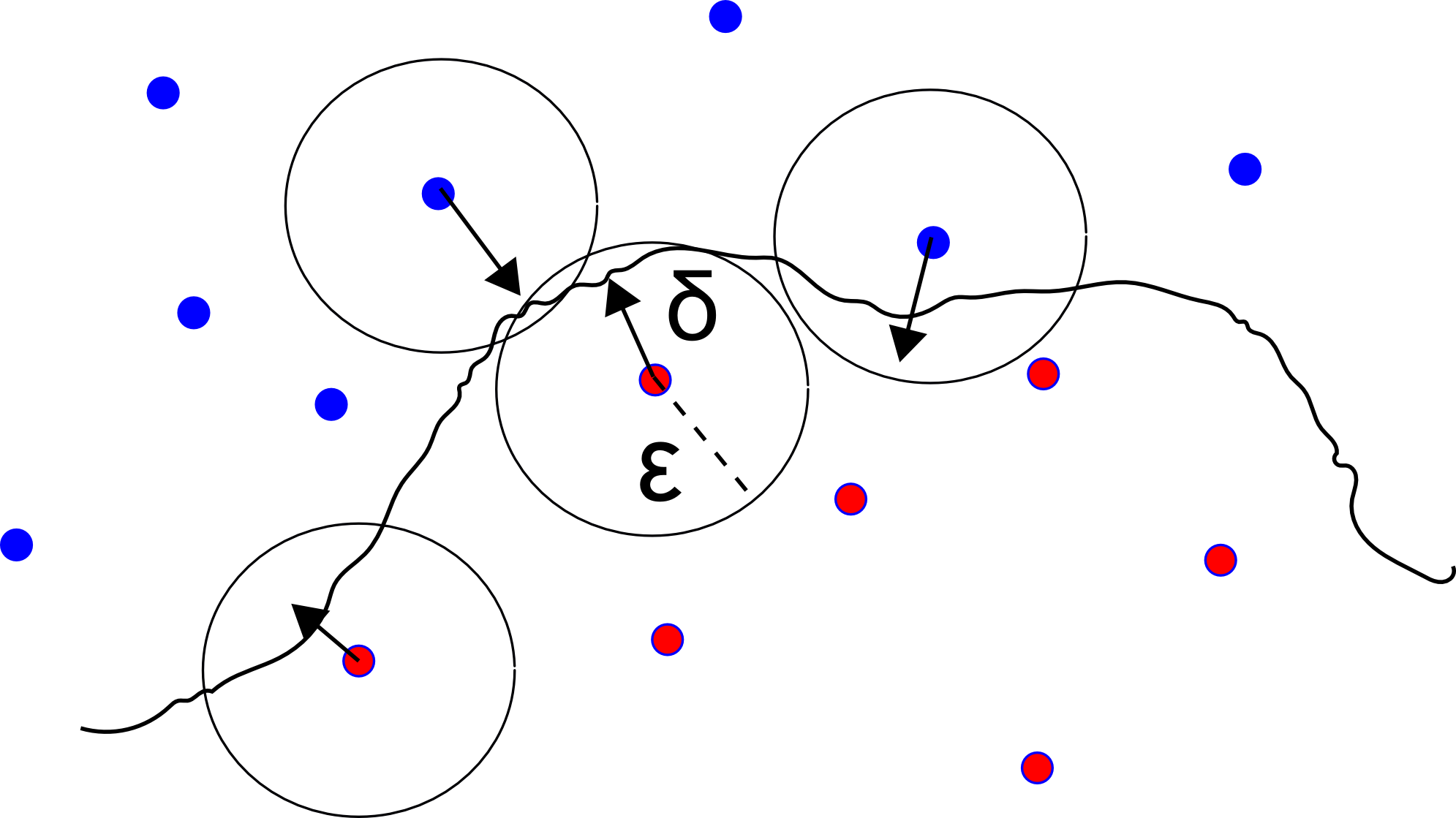

We explore virtual adversarial training (VAT) applied to neural higher-order conditional random fields for sequence labeling. VAT is a recently introduced regularization method promoting local distributional smoothness: It counteracts the problem that predictions of many state-of-the-art classifiers are unstable to adversarial perturbations. Unlike random noise, adversarial perturbations are minimal and bounded perturbations that flip the predicted label. We utilize VAT to regularize neural higher-order factors in conditional random fields. These factors are for example important for phone classification where phone representations strongly depend on the context phones. However, without using VAT for regularization, the use of such factors was limited as they were prone to overfitting. In extensive experiments, we successfully apply VAT to improve performance on the TIMIT phone classification task. In particular, we achieve a phone error rate of 13.0%, exceeding the state-ofthe-art performance by a wide margin.

We will give an oral presentation at Interspeech 2016.

More information can be found in our paper.

Browse the Results of the Month archive.