Recurrent Dilated DenseNets for a Time-Series Segmentation Task

- Published

- Wed, Jan 01, 2020

- Tags

- rotm

- Contact

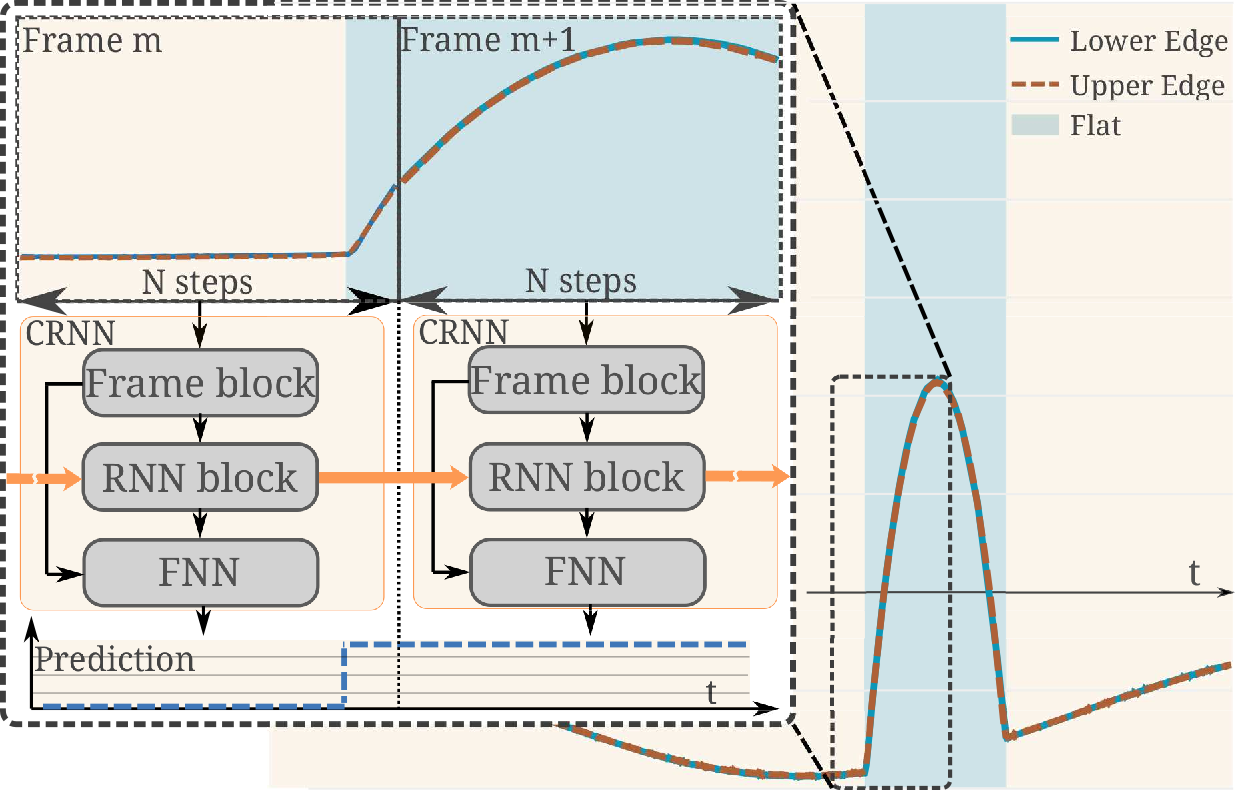

Efficient real-time segmentation and classification of time-series data is key in many applications, including sound and measurement analysis. We propose an efficient convolutional recurrent neural network (CRNN) architecture that is able to deliver improved segmentation performance at lower computational cost than plain RNN methods. We develop a CNN architecture, using dilated DenseNet-like kernels and implement it within the proposed CRNN architecture. For the task of online wafer-edge measurement analysis, we compare our proposed methods to standard RNN methods, such as Long Short Term Memory (LSTM) and Gated Recurrent Units (GRUs). We focus on small models with a low computational complexity, in order to run our model on an embedded device. We show that frame-based methods generally perform better than RNNs in our segmentation task and that our proposed recurrent dilated DenseNet achieves a substantial improvement of over 1.1 % F1-score compared to other frame-based methods.

Figure: Principle of the recurrent dilated DenseNet architecture for wafer-edge segmentation. The network has three main components: The frame block containing the dilated dense block, the RNN block and the FNN layers providing the final prediction. By combining the information of previous frames with the current frame information, the network is able to efficiently provide a prediction for each time-step within the frame, using information from various time-scales.

Browse the Results of the Month archive.