Variational Inference in Neural Networks using an Approximate Closed-Form Objective

- Published

- Wed, Feb 01, 2017

- Tags

- rotm

- Contact

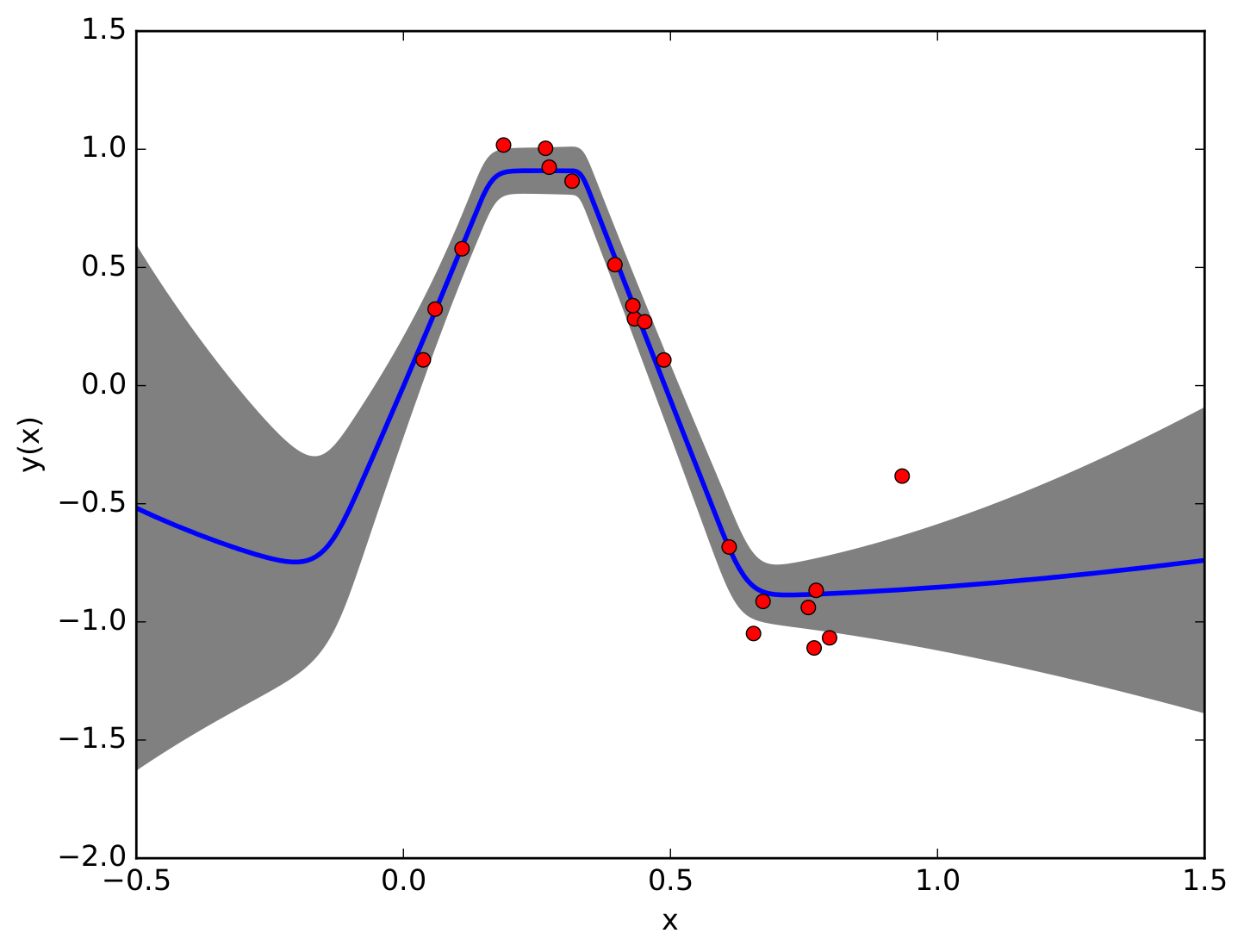

We propose a closed-form approximation of the intractable KL divergence objective for variational inference in neural networks. The approximation is based on a probabilistic forward pass where we successively propagate probabilities through the network. Unlike existing variational inferences schemes that typically rely on stochastic gradients that often suffer from high variance our method has a closed-form gradient. Furthermore, the probabilistic forward pass inherently computes expected predictions together with uncertainty estimates at the outputs. In experiments, we show that our model improves the performance of plain feed-forward neural networks. Moreover, we show that our closed-form approximation works well compared to model averaging and that our model is capable of producing reasonable uncertainties in regions where no data is observed.

Figure: Common neural networks simply compute a point-estimate y(x) for a given input x (blue line). Our model additionally produces uncertainties that show how confident the model is about its prediction (shaded region). The uncertainties are larger in regions where no data is observed. For more information, see our recent NIPS (workshop) paper.

Browse the Results of the Month archive.