On Efficient Uncertainty Estimation for Resource-Constrained Mobile Applications

- Published

- Tue, Feb 01, 2022

- Tags

- rotm

- Contact

Uncertainty estimation and out-of-distribution robustness are vital aspects of modern deep learning. Predictive uncertainty supplements model predictions and enables improved functionality of downstream tasks including various resource-constrained embedded and mobile applications. Popular examples are virtual reality (VR), augmented reality (AR), sensor fusion, perception, and health applications including fitness indicators, arrhythmia detection, and skin lesion detection. Robust and reliable predictions with uncertainty estimates are increasingly important when operating on noisy in-the-wild data from sensory inputs. A large variety of deep learning architectures have been applied to various tasks with great success in terms of prediction quality, however, producing reliable and robust uncertainty without additional computational and memory overhead remains a challenge. This issue is further aggravated due to the limited computational and memory budget available in typical battery-powered mobile devices.

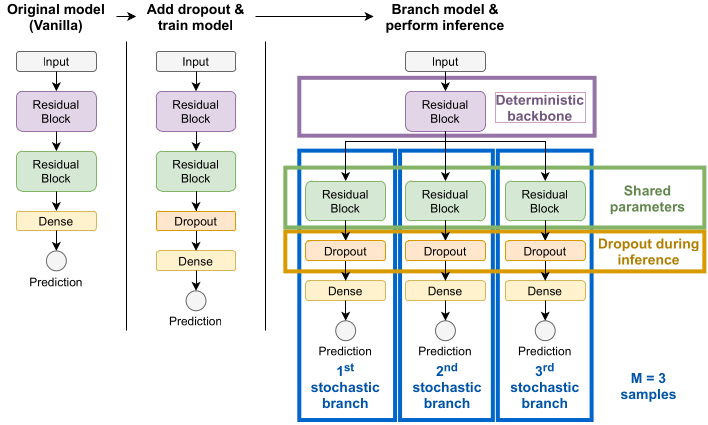

In this paper, we aim to investigate more resource-efficient methods for uncertainty estimation that also provide good performance and robustness under dataset shifts. We extend Monte Carlo Dropout (MCDO) and propose the following adaptations: (i) the use of spatial dropout instead of randomly dropping activations during inference, (ii) contrastive dropout rates during training and inference, and (iii) branched MCDO. The first two modifications, namely spatial dropout and contrastive dropout rates, aim to diversify MCDO samples, and consequently improve robustness to out-of-distribution data. Furthermore, branched MCDO reduces latency due to a common backbone for the deterministic portion of the model and parallel branches for each replica of the stochastic portion of the model. We evaluate our method on two datasets and show, that we can reach or even outperform the baseline in terms of predictive performance with almost half its latency. This enables time-sensitive applications on mobile platforms to leverage uncertainty estimation.

Figure: A high-level diagram of the branched MCDO principle with three branches and shared parameters. During inference, the common and deterministic backbone is computed and cached; its outputs are connected to each branch, i.e. one replica of the stochastic portion of the network, which are processed in parallel.

The full version of this paper can be found on https://arxiv.org/abs/2111.09838.

Browse the Results of the Month archive.