Resource-Efficient Deep Neural Networks for Automotive Radar Interference Mitigation

- Published

- Sat, May 01, 2021

- Tags

- rotm

- Contact

Radar sensors are crucial for environment perception of driver assistance systems as well as autonomous vehicles. Key performance factors are weather resistance and the possibility to directly measure velocity. With a rising number of radar sensors and the so-far unregulated automotive radar frequency band, mutual interference is inevitable and must be dealt with. Algorithms and models operating on radar data in early processing stages are required to run directly on specialized hardware, i.e. the radar sensor. This specialized hardware typically has strict resource constraints, i.e. a low memory capacity and low computational power.

Convolutional Neural Network (CNN)-based approaches for denoising and interference mitigation yield promising results for radar processing in terms of performance. However, these models typically contain millions of parameters, stored in hundreds of megabytes of memory, and require additional memory during execution.

In this paper, we investigate quantization techniques for CNN-based denoising and interference mitigation of radar signals. We analyze the quantization potential of different CNN-based model architectures and sizes by considering (i) quantized weights and (ii) piecewise constant activation functions, which results in reduced memory requirements for model storage and during the inference step respectively.

We compare two quantization techniques for CNN-based models to reduce the total memory requirements on radar sensors. The first technique, known as quantization aware training, is based on the straight-through gradient estimator (STE). The second technique is based on training distributions over discrete weights.

Furthermore, our paper discusses, how distributions over discrete weights can be used to create uncertainty estimates in RD maps. It also presents some insights into what the network has learned in form of filter- and activation visualizations.

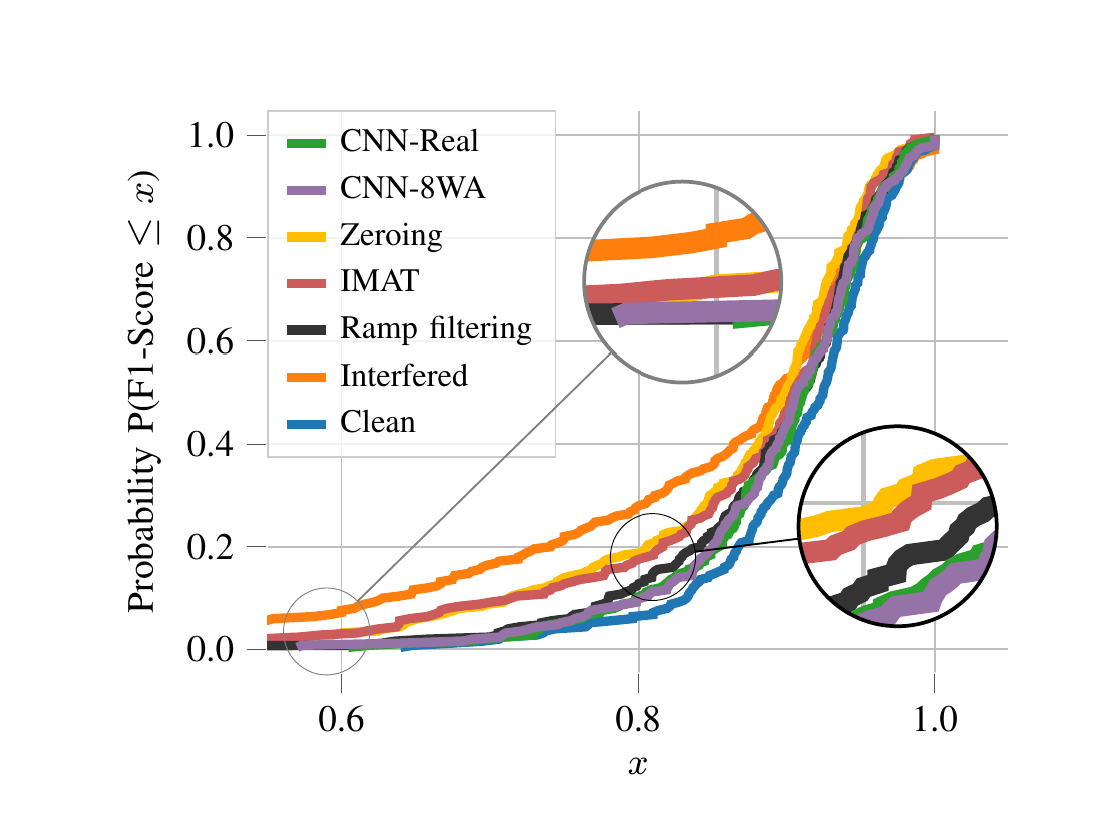

Figure: CDF comparison of the sample-wise F1-Score between the real-valued CNN model (CNN-Real), the 8 bit quantized CNN model (CNN-8WA), and the three classical methods zeroing, IMAT, and Ramp filtering. 8-bit quantization reduces the memory requirements during the inference step by around 75 % without having a substantial impact on the performance. It also outperforms all classical interference mitigation methods.

The full version of this paper can be found on https://ieeexplore.ieee.org/document/9364355.

Browse the Results of the Month archive.