Hybrid Generative-Discriminative Training of Gaussian Mixture Models

- Status

- Finished

- Type

- Master Thesis

- Announcement date

- 11 Oct 2013

- Student

- Wolfgang Roth

- Mentors

- Franz Pernkopf

- Sebastian Tschiatschek

- Robert Peharz

- Research Areas

Short Description

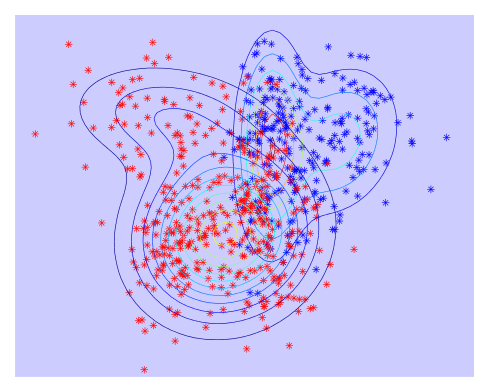

Gaussian Mixture Models (GMMs) are a popular choice for modeling probability density functions, with a vast number of applications in speech and audio technologies and machine learning in general. For classification, one can use class-dependent GMMs and easily build a probabilistic classifier using Bayes rule. The problem here is, that the classical way to train GMMs, i.e. using the maximum likelihood principle and applying the expectation-maximization algorithm, is not aware of classification. This generative training typically yields sub-optimal classifiers. On the other hand, when the classifier is trained in a purely discriminative way, the model actually looses most of its probabilistic semantics.

In this master/diploma thesis, the task is to apply a recently proposed hybrid generative-discriminative learning criterion for GMM training. The goal of this approach is to

- yield good classifiers,

- but still allowing generative treatment of the model: marginalization of missing features and semi-supervised learning

Requirements

You should have

- Good programming skills (Matlab is fine, but C++, Java, Python can also be used)

- Strong interest in Machine Learning (at least absolved CI/EW, or some similar lectures)

- Basic knowledge about probability calculus, ideally having experience with probabilistic graphical models

References

[1] C. M. Bishop, Pattern Recognition and Machine Learning, Springer, 2006.

[2] R. Peharz, S. Tschiatschek, F. Pernkopf, “The Most Generative Maximum Margin Bayesian Networks”, ICML, 2013.

[3] F. Pernkopf and M. Wohlmayr, “Large Margin Learning of Bayesian Classifiers based on Gaussian Mixture Models”, ECML, 2010