Bayesian Neural Networks for Missing Data Scenarios

- Status

- Finished

- Type

- Master Thesis

- Announcement date

- 12 Oct 2019

- Mentors

- Research Areas

Short Description:

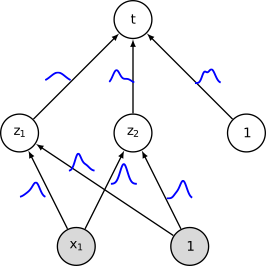

Variational inference is a popular method for learning Bayesian neural networks. Instead of learning a single set of network weights, the aim of Bayesian inference is to model a distribution over the weights. This distribution is typically used to compute prediction uncertainties in order to estimate how certain the model is about its own prediction.

In this thesis, the task is to apply Bayesian neural networks for missing data scenarios where parts of the data are missing, i.e., missing input features and/or missing labels (semi-supervised learning).

Requirements:

- Good programming skills (Matlab or Python; preferably experience with numpy and Theano/Tensorflow or similar frameworks)

- Strong interest in Machine Learning (at least absolved CI/EW or similar lectures, ideally having experience with neural networks)

- Basic knowledge about probability calculus