Single-Channel Source Separation in Multisource Environment

- Status

- Open

- Type

- Master Project

- Announcement date

- 06 Mar 2013

- Mentors

- Research Areas

Short Description

Single-channel source separation (SCSS) have already been shown as an attractive candidate for removing the competing speaker as interfering signal in a single-channel scenario [1-4]. However, previous SCSS methods are restricted to co-channel speech separation task (speech mixture of two speakers), without background noise and reverberation. The new challenges in [5] introduce the task of separating a target speaker from multisource reverberant environment which is a more realistic separation scenario.

The goal in this thesis, is to investigate new approaches to address the source separation problem for realistic noise scenario as in [5]. In a systematic way, we address the source separation problem as a parameter estimation one, where we are interested to estimate the parameters of the underlying sources, namely, sinusoidal components (e.g., see [6]). We recently addressed the target speaker separation problem in multisource reverberant of CHiME 2 challenge. The resulst of different teams are available by the organizers here. The paper was presented in CHiME 2 satelite workshop of ICASSP 2013 [7] and for some demo wave files see webpage or demopage.

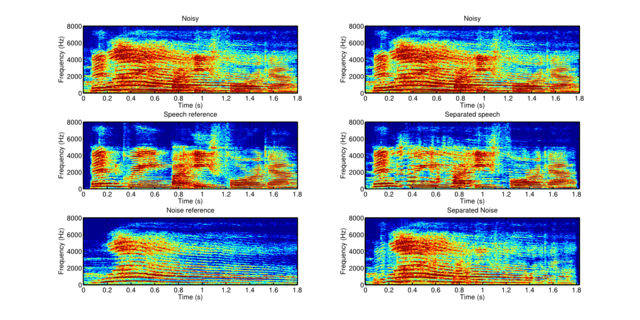

Block Separation example of a mixture of female speaker and child at mixed at -6 decibel.

Your Profile

The candidate should be interested in speech signal processing and Matlab programming.

References

[1] M. Cooke, J. R. Hershey, and S. J. Rennie, “Monaural speech separation and recognition challenge,” Elsevier Comput. Speech Lang., vol. 24, no. 1, pp. 1-15, 2010.

[2] P. Mowlaee, R. Saeidi, Z.-H, Tan, M. G. Christensen, T. Kinnunen, P. Fränti, S. H. Jensen, “A Joint Approach for single-channel Speaker Identification and Speech Separation,” IEEE Trans. on Audio, Speech, and Language Process., vol. 20, no. 9, pp. 2586-2601, 2012.

[3] P. Mowlaee, M. G. Christensen, S. H. Jensen, “New Results on Single-Channel Speech Separation Using Sinusoidal Modeling,” Audio, Speech, and Language Processing, IEEE Transactions on , vol.19, no.5, pp.1265-1277, 2011.

[4] P. Mowlaee, New strategies for single-channel speech separation, PhD thesis, Department of Electronic systems, Aalborg University, 2010.

[5] CHiME challenge website

[6] M.G. Christensen and P. Mowlaee, A new metric for VQ-based speech enhancement and separation”, in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 4764-4767, 2011.

[7] P. Mowlaee, J. A. Morales-Cordovilla, F. Pernkopf, H. Passentheiner, M. Hagmuller, and G. Kubin, “The 2nd CHiME Speech Separation and Recognition Challenge: Approaches on Single-Channel Speech Separation and Model-driven Speech Enhancement”, in Proceeding of the 2nd CHiME Speech Separation and Recognition Challenge, IEEE Int. Conference on Acoustics, Speech and Signal Processing, Vancouver, Canada, May, 2013 [link].