Learning Mixtures of Submodular Functions for Image Collection Summarization

- Published

- Thu, Jan 01, 2015

- Tags

- rotm

- Contact

We address the problem of image collection summarization by learning mixtures of submodular functions. Submodularity is useful for this problem since it naturally represents characteristics such as fidelity and diversity, desirable for any summary. Several previously proposed image summarization scoring methodologies, in fact, instinctively arrived at submodularity. We provide classes of submodular component functions (including some which are instantiated via a deep neural network) over which mixtures may be learnt.

We formulate the learning of such mixtures as a supervised problem via large-margin structured prediction. As a loss function, and for automatic summary scoring, we introduce a novel summary evaluation method called V-ROUGE, and test both submodular and non-submodular optimization (using the submodular-supermodular procedure) to learn a mixture of submodular functions. Interestingly, using non-submodular optimization to learn submodular functions provides the best results. We also provide a new data set consisting of 14 real-world image collections along with many human-generated ground truth summaries collected using Amazon Mechanical Turk. We compare our method with previous work on this problem and show that our learning approach outperforms all competitors on this new data set. This paper provides, to our knowledge, the first systematic approach for quantifying the problem of image collection summarization, along with a new data set of image collections and human summaries.

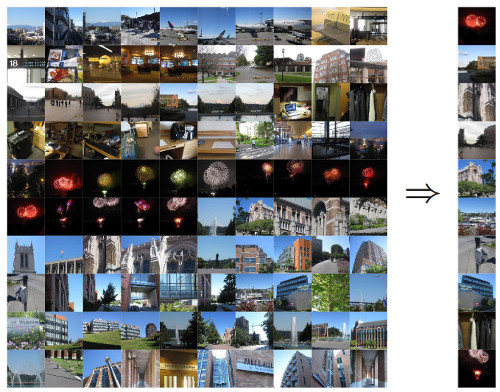

The figure shows an example of the considered image collections as well as a candidate summary.

The full paper is available here.

Browse the Results of the Month archive.